THIS IS A TEST INSTANCE. ALL YOUR CHANGES WILL BE LOST!!!!

...

- Replace all local storage with remote storage - Instead of using local storage on Kafka brokers, only remote storage is used for storing log segments and offset index files. While this has the benefits related to reducing the local storage, it has the problem of not leveraging the OS page cache and local disk for efficient latest reads as done in Kafka today.

- Implement Kafka API on another store - This is an approach that is taken by some vendors where Kafka API is implemented on a different distributed, scalable storage (example HDFS). Such an option does not leverage Kafka other than API compliance and requires the much riskier option of replacing the entire Kafka cluster with another system.

- Client directly reads remote log segments from the remote storage - The log segments on the remote storage can be directly read by the client instead of serving it from Kafka broker. This reduces Kafka broker changes and has benefits of removing an extra hop. However, this bypasses Kafka security completely, increases Kafka client library complexity and footprint, causes compatibility issues to the existing Kafka client libraries, and hence is not considered.

- Store all remote segment metadata in remote storage. This approach works with the storage systems that provide strong consistent metadata, such as HDFS, but does not work with S3 and GCS. Frequently calling LIST API on S3 or GCS also incurs huge costs. So, we choose to store metadata in a Kafka topic in the default implementation, but allow users to use other methods with their own RLMM implementations.

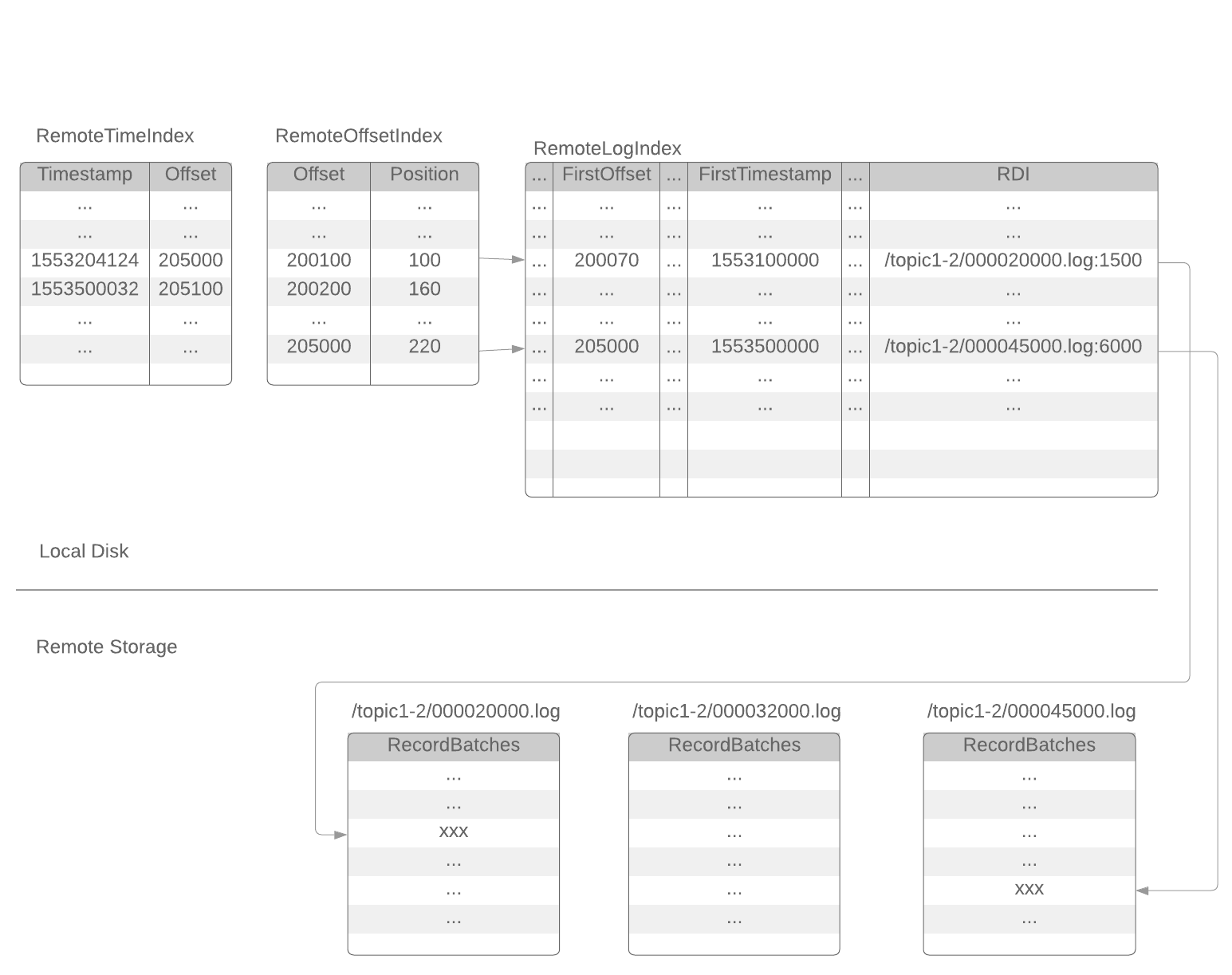

- Cache all remote log indexes in local storage. Store remote log segment information in local storage.

Meeting Notes

- Discussion Recording

- Notes

- Discussed that we can have producerid snapshot for each log segment which will be copied to remote storage. There is already a PR for KAFKA-9393 which addresses similar requirements.

- Discussed on a case when the local data is not available on brokers, whether it is possible to recover the state from remote storage.

- We will update the KIP by early next week with

- Topic deletion design proposed/discussed in the earlier meeting. This includes the schemas of remote log segment metadata events stored in the topic.

- Producerid snapshot for each segment discussion.

- ListOffsets API version bump to support offset for the earliest local timestamp.

- Justifying the rationale behind keeping RLMM and local leader epoch as the source of truth.

- Rocks DB instances as cache for remote log segment metadata.

- Any other missing updates planned earlier.

- Discussion Recording

- Notes

- Discussed the proposed topic deletion lifecycle with and without KIP-516.

- We will update the KIP with the design details.

Jun mentioned that KIP-516 will be available in 3.0 and we can go with the design assuming TopicId support.

Discussed on remote log metadata truncation and losing the data of Kafka brokers local storage.

We will update KIP on possible approaches and add any possible APIs needed for RemtoeStorageManager(low Priority for now).

- Discussed the proposed topic deletion lifecycle with and without KIP-516.

...