...

- find out the corresponding RemoteLogSegmentId from RLMM and startPosition and endPosition from the offset index.

- try to build Records instance data fetched from RSM.fetchLogSegmentData(RemoteLogSegmentMetadata remoteLogSegmentMetadata, Long startPosition, Long endPosition)

- if success, RemoteFetchPurgatory will be notified to return the data to the client

- if the remote segment file is already deleted, RemoteFetchPurgatory will be notified to return an error to the client.

- if the remote storage operation failed (remote storage is temporarily unavailable), the operation will be retried with Exponential Back-Off, until the original consumer fetch request timeout.

Remote Log

...

Metadata State transitions

Leader broker copies the log segments to the remote storage and puts the remote log segment metadata with the state as “COPY_SEGMENT_STARTED” and updates the state as “COPY_SEGMENT_FINISHED” once the copy is successful. Leaders also remove the remote log segments based on the retention policy. Before the log segment is removed using RSM.deleteLogSegment(RemoteLogSegmentMetadata remoteLogSegmentMetadata), it updates the remote log segment with the state as DELETEas DELETE_SEGMENT_STARTED and it updates with DELETEwith DELETE_SEGMENT_FINISHED once it is successful.

When a partition is deleted, leader controller updates its state in RLMM with DELETE_MARKED. A task on each leader of “__remote_log_segment_metadata_topic” partitions consume the messages and checks for messages which have the state as “DELETE_MARKED” and schedules them to be deleted. It commits consumer offsets upto which these markers were handled so that whenever a leader switches to other brokers they can continue from where they were left. The controller considers the topic partition is deleted only when it determines that there are no log segments for that topic partition by using RLMM.PARTITION_MARKED and it expects RLMM will have a mechanism to cleanup the remote log segments. This process for default RLMM is described with details here.

RemoteLogMetadataManager implemented with an internal topic

...

| remote.log.metadata.topic.replication.factor | Replication factor of the topic Default: 3 |

| remote.log.metadata.topic.partitions | No of partitions of the topic Default: 50 |

| remote.log.metadata.topic.retention.ms | Retention of the topic in milli seconds Default: 365 * 24 * 60 * 60 * 1000 (1 yr) |

| remote.log.metadata.manager.listener.name | Listener name to be used to connect to the local broker by RemoteLogMetadataManager implementation on the broker. Respective endpoint address is passed with "bootstrap.servers" property while invoking RemoteLogMetadataManager#configure(Map<String, ?> props). This is used by kafka clients created in RemoteLogMetadataManager implementation. |

| remote.log.metadata.* | Any other properties should be prefixed with "remote.log.metadata." and these will be passed to RemoteLogMetadataManager#configure(Map<String, ?> props). For ex: Security configuration to connect to the local broker for the listener name configured are passed with props. |

Topic deletioAnchor topic-deletion topic-deletion

n lifecycle

| topic-deletion | |

| topic-deletion |

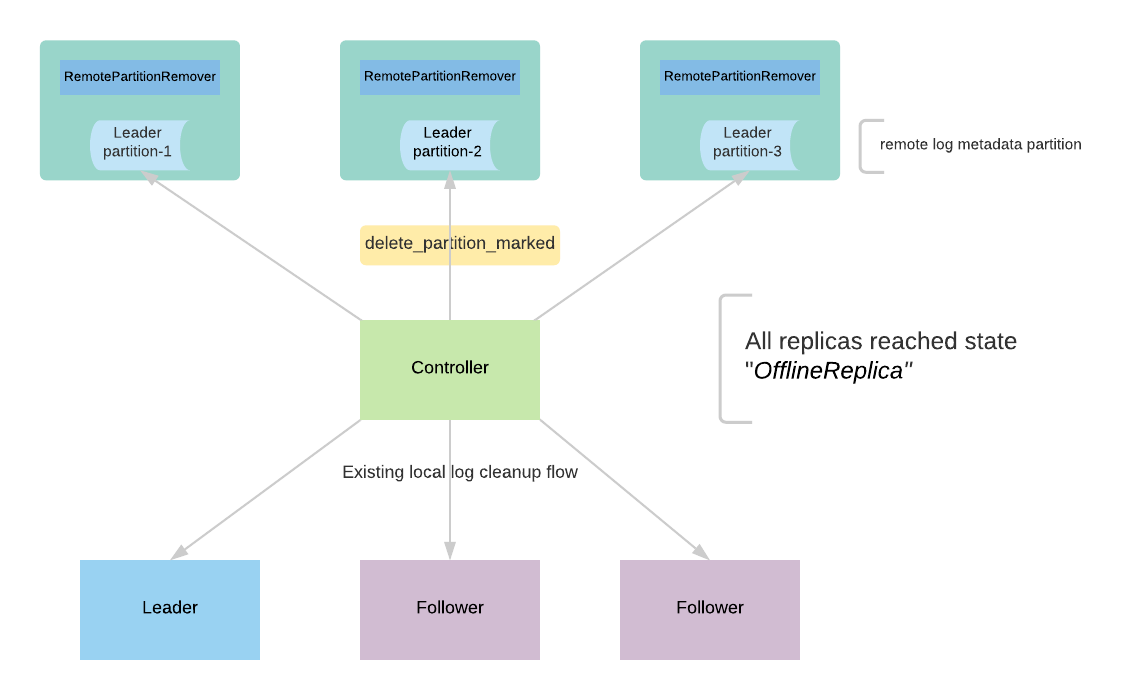

The controller receives a delete request for a topic. It goes through the existing protocol of deletion and it makes all the replicas offline to stop taking any fetch requests. After all replicas reach this state, the controller publishes an event to the remote log metadata topic by marking the topic as deleted.

RemoteLogCleaner instance is created on the leader for each of the remote log segment metadata topic partitions. It consumes messages from that partitions and filters the delete partition events which need to be processed. It also maintains a committed offset for this instance to handle leader failovers to a different replica so that it can start processing the messages where it left off.

RemoteLogCleaner(RLC) processes the request with the following flow as mentioned in the below diagram.

- The controller publishes delete_partition_marked event to say that the partition is marked for deletion. There can be multiple events published when the controller restarts or failover and this event will be deduplicated by RLC.

- RLC receives the delete_partition_marked and processes it if it is not yet processed earlier.

- RLC publishes an event delete_partition_started that indicates the partition deletion has already been started.

- RLC gets all the remote log segments for the partition and each of these remote log segments is deleted with the next steps.

- Publish delete_segment_started event with the segment id.

- RLC deletes the segment using RSM

- Publish delete_segment_finished event with segment id once it is successful.

- Publish delete_partition_finished once all the segments have been deleted successfully.

[We will add more details later about how the resultant state for each topic partition is computed ]

Public Interfaces

Compacted topics will not have remote storage support.

...