node may still believe it is leader and send fetch requests to the rest of the quorum

| Table of Contents |

|---|

Status

Current state: Under Discussion

...

Disruptive server scenarios

Network Partition

Let's consider two scenarios -

When a follower becomes partitioned from the rest of the quorum, it will continuously increase its epoch to start elections until it is able to regain connection to the leader/rest of the quorum. When the follower regains connectivity, it will disturb the rest of the quorum as they will be forced to participate in an unnecessary election. While this situation only results in one leader stepping down, as we start supporting larger quorums these events may occur more frequently per quorum.

...

- It becomes aware that it should step down as a leader/initiates a new election.

This is now the follower case covered above. - It continues to believe it is the leader until rejoining the rest of the quorum.

On rejoining the rest of the quorum, it will start receiving messages w/ a different epoch. If higher than its own, the node will know to resign as leader. If lower than its own, the node may still believe it is leader and send fetch requests to the rest of the quorum. Nodes will transition to Unattached state and election(s) will ensue (todo)

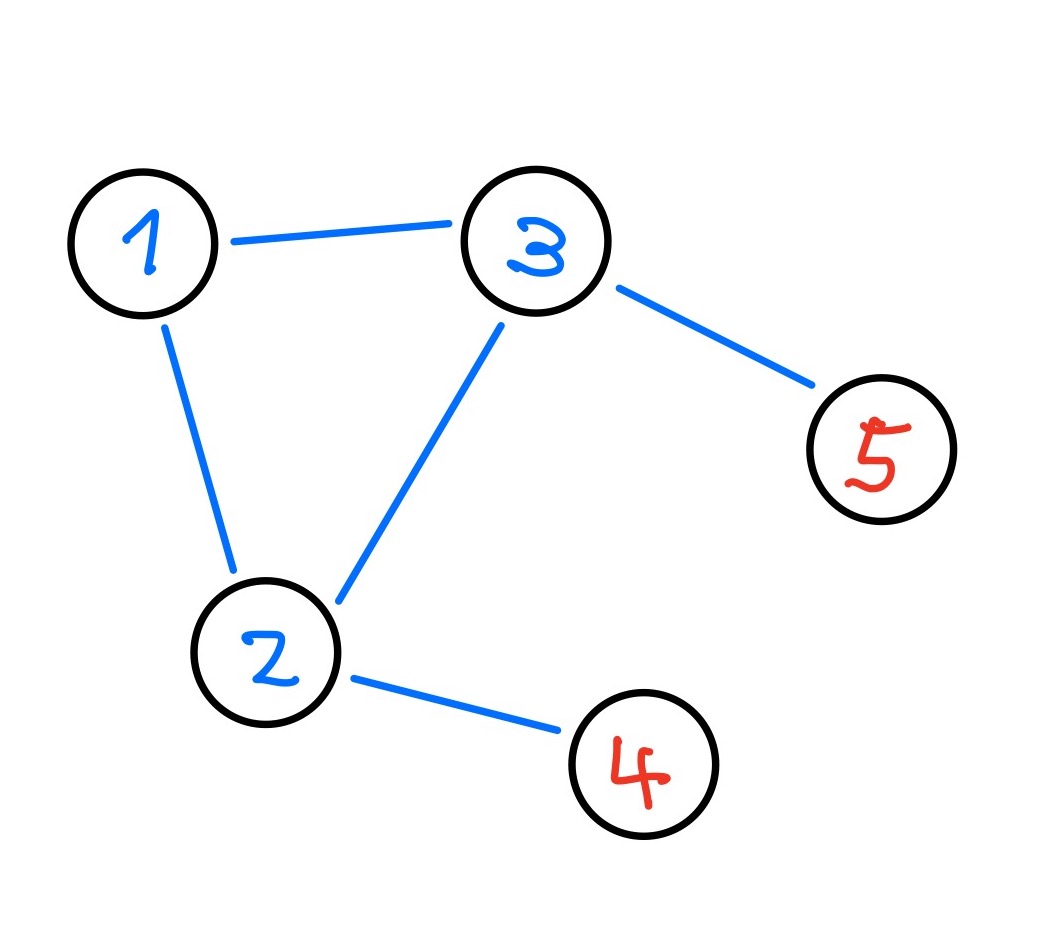

For instance, here's a great example from https://decentralizedthoughts.github.io/2020-12-12-raft-liveness-full-omission/

Let's say server 3 is the current leader. Server 4 will eventually start an election because it is unable to find the leader, causing Server 2 to transition to Unattached. Server 4 will not be able to receive enough votes to become leader, but Server 2 will be able to once its election timer expires. As Server 5 is also unable to communicate to Server 2, this kicks off a back and forth of leadership transition between Servers 2 and 3.

A - epoch 1 (disconnected) where leader is A

B, C, E - epoch 2 where leader is BD - epoch 0 (disconnected)

While network partitions may be the main issue we expect to encounter/mitigate impact for, it’s possible that bugs and user error create similar effects to network partitions that we need to guard against. For instance, a bug which causes fetch requests to periodically timeout or setting controller.quorum.fetch.timeout.ms and other related configs too low.

...

Raft equiv for metadata version?

If X nodes are upgraded and start ignoring vote requests and Y nodes continue to reply and transition to “Unattached” could we run into a scenario where we violate raft invariants (more than one leader) or cause extended quorum unavailability?

We can never have more than one leader - if Y nodes are enough to vote in a new leader, the resulting state change they undergo (e.g. to

Voted, toFollower) will causemaybeFireLeaderChangeto update the current leader’s state (e.g. toResigned) as needed.Proving that unavailability would remain the same or better w/ a mish-mash of upgraded nodes is a bit harder.

What happens if the leader is part of the Y nodes that will continue to respond to unnecessary vote requests?

If Y is a minority of the cluster, the current leader and minority of cluster may vote for this disruptive node to be the new leader but the node will never win the election. The disruptive node will keep trying with exponential backoff. At some point a majority of the cluster will not have heard from a leader for fetch.timeout.ms and will start participating in voting. A leader can be elected at this point. In the worst case this would extend the time we are leader-less by the length of the fetch timeout.

If Y is a majority of the cluster, we have enough nodes to potentially elect the disruptive node. (This is the same as the old behavior.) If a majority of votes were not received by the node, it will keep trying with exponential backoff. At some point the rest of the cluster will not have heard from a leader for fetch.timeout.ms and will start participating in voting. In the worst case this would extend the time we are leader-less by the length of the fetch timeout.

What happens if leader is part of the X nodes which will reject unnecessary vote requests?

If Y is a minority of the cluster, the minority of cluster may vote for this disruptive node to be the new leader but the node will never win the election. The disruptive node will keep trying with exponential backoff, increasing its epoch. If the current leader ever resigns or a majority of the cluster hasn’t heard from a leader, there’s a potential we will now vote for the node - but an election would have been necessary in this case anyways

If Y is a majority of the cluster, we have enough nodes to potentially elect the disruptive node. (This is the same as the old behavior.) If a majority of votes were not received by the node, it will keep trying with exponential backoff. The nodes in Y will transition to unattached after receiving the first vote request and potentially start their own elections. The nodes in X will still be receiving fetch responses from the current leader and will be rejecting all vote requests from nodes in Y. Although there must be at least one node in Y that is eligible to become leader (otherwise, Y couldn’t have been a majority of the cluster), if we suffer enough node failures in Y where Y is no longer a majority of the cluster, we get stuck. X (a minority of the cluster, but now needed to form a majority) stays connected to the current leader and never votes for an eligible leader in Y. No new commits can be made to the log. Check quorum would solve this case (leader resigns since it senses it no longer has a majority).

Test Plan

This will be tested with unit tests, integration tests, system tests, and TLA+. (todo)

...