...

When servers receive VoteRequests with the PreVote field set to true, they will respond with VoteGranted set to

trueif they are not a Follower and the epoch and offsets in the Pre-Vote request satisfy the same requirements as a standard votefalseif otherwise

...

Yes. If a leader is unable to receive fetch responses from a majority of servers, it can impede followers that are able to communicate with it from voting in an eligible leader that can communicate with a majority of the cluster. This is the reason why an additional "Check Quorum" safeguard is needed which is what KAFKA-15489 implements. Check Quorum ensures a leader steps down if it is unable to receive fetch responses from a majority of servers.

Are Why do we need Followers to reject Pre-Vote requests? Shouldn't the Pre-Vote and Check Quorum mechanism be enough to prevent disruptive servers?

Not quite. We also want servers to reject Pre-Vote requests received If servers are still within their fetch timeout. This basically just means any server currently in Follower state should reject Pre-Votes because , this means they have recently heard from a leader recently so there is no need to consider electing . It can be less disruptive if they refuse to vote for a new leader while still following an existing one.

The following scenarios show why just Pre-Vote and Check Quorum (without Followers rejecting Pre-Votes) are not enough. (The second scenario will be covered by KIP-853: KRaft Controller Membership Changes or future work if not covered here.)

- Scenario A: We can image a scenario where two servers (S1 & S2) are both up-to-date on the log but unable to maintain a stable connection with each other. Let's say S1 is the leader. When S2 loses connectivity with S1 and is unable to find the leader, it will start a Pre-Vote. Since its log may be up-to-date, a majority of the quorum may grant the Pre-Vote and S2 may then start and win a standard vote to become the new leader. This is already a disruption since S1 was serving the majority of the quorum well as a leader. When S1 loses connectivity with S2 again it will start an election, and this bouncing of leadership could continue.

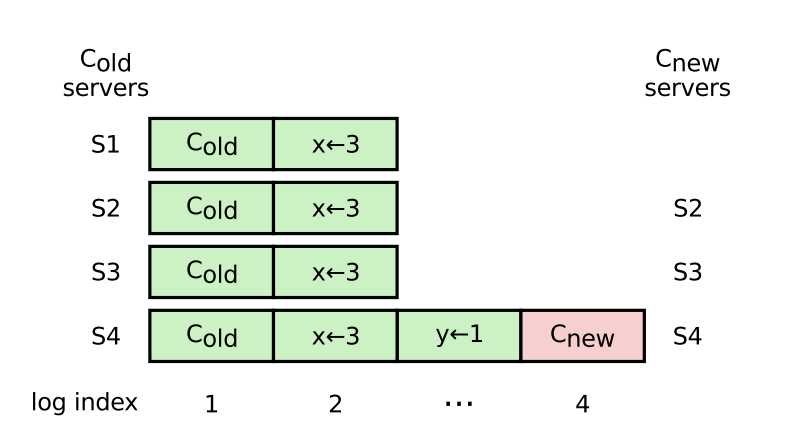

- Scenario B: A server in an old configuration (e.g. S1 in the below diagram pg. 41) starts a “pre-vote” when the leader is temporarily unavailable, and is elected because it is as up-to-date as the majority of the quorum. The Raft paper argues we can not rely on the original leader replicating fast enough to remove S1 from the quorum - we can imagine some bug/limitation with quorum reconfiguration causes S1 to continuously try to start elections when the leader is trying to remove it from the quorum. This scenario will be covered by KIP-853: KRaft Controller Membership Changes or future work if not covered here.

The logic now looks like the following for servers receiving VoteRequests with PreVote set to true:

...

trueif they are not a Follower and the epoch and offsets in the Pre-Vote request satisfy the same requirements as a standard votefalseif they are a Follower or the epoch and end offsets in the Pre-Vote request do not satisfy the requirements(Not in scope) To address the disk loss and 'Servers in new configuration' scenario, one option would be to have servers respondfalseto vote requests from servers that have a new disk and haven't caught up on replication

Compatibility

We can gate Pre-Vote with a new VoteRequest and VoteResponse version. Instead of sending a Pre-Vote, a server will transition from Prospective immediately to Candidate if it knows of other servers which do not support Pre-Vote yet. This will result in the server sending standard votes which are understood by servers on older software versions.

...