...

Current state: Under Discussion

Discussion thread: here [Change the link from the KIP proposal email archive to your own email thread]

JIRA: KAFKA-13361

Please keep the discussion on the mailing list rather than commenting on the wiki (wiki discussions get unwieldy fast).

...

It adds the following options into the Producer, Broker, and Topic configurations:

compression.gzip.buffer: the buffer size that feeds raw input into the Deflator or is fed by the uncompressed output from the Deflator. (available: [512, ), default: 8192(=8kb).)compression.snappy.block: the block size that snappy uses. (available: [1024, ), default: 32768(=32kb).)compression.lz4.block: the block size that lz4 uses. (available: [4, 7], (means 64kb, 256kb, 1mb, 4mb respectively), default: 4.)compression.zstd.window: enables long mode; the log of the window size that zstd uses to memorize the compressing data. (available: 0 or [10, 22], default: 0 (disables long mode.))

...

If the user uses gzip, the buffer size of `BufferedInputStream` BufferedInputStream, which reads the decompressed data from `GZIPInputStream` GZIPInputStream, is impacted by `compression`compression.gzip.buffer`buffer`. In other cases, there are no explicit changes.

...

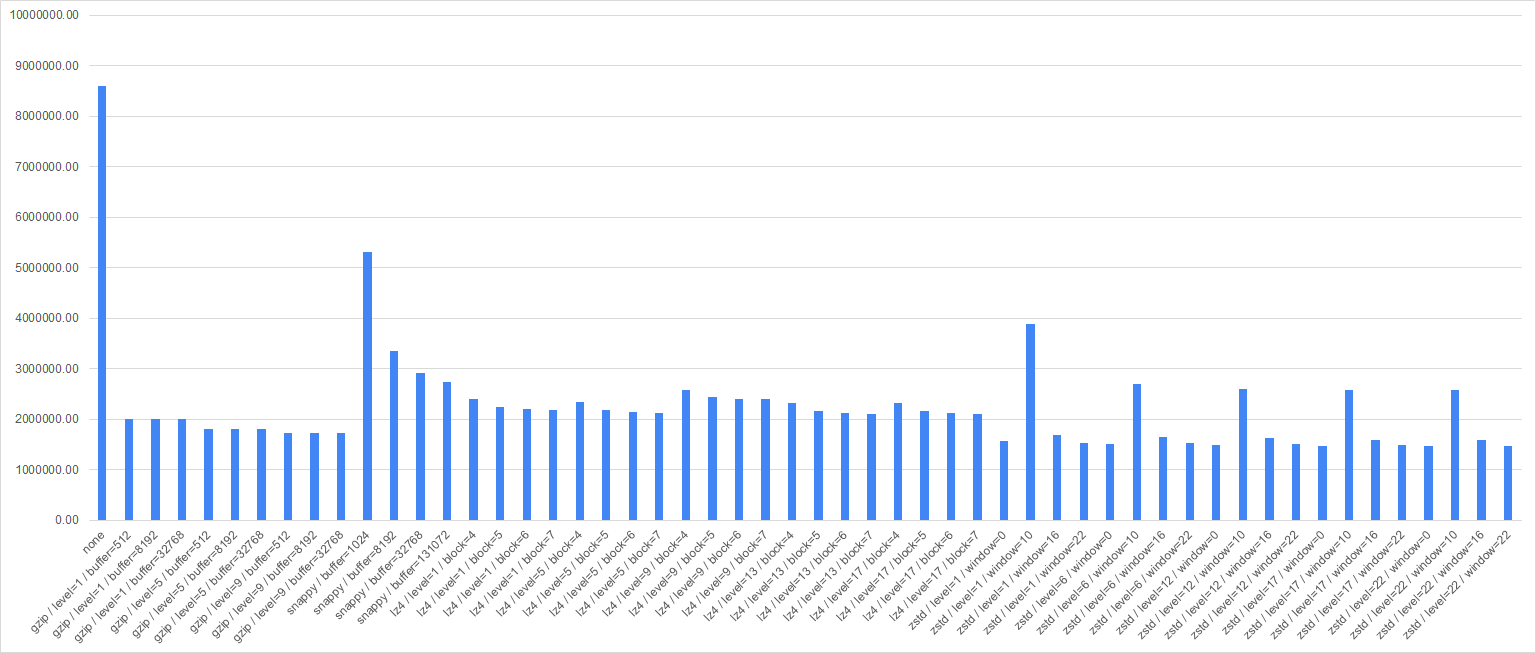

Two microbenchmarks were run to see how each configuration option interacts with the other one; each benchmark measured the read or write ops/sec for various compression options.

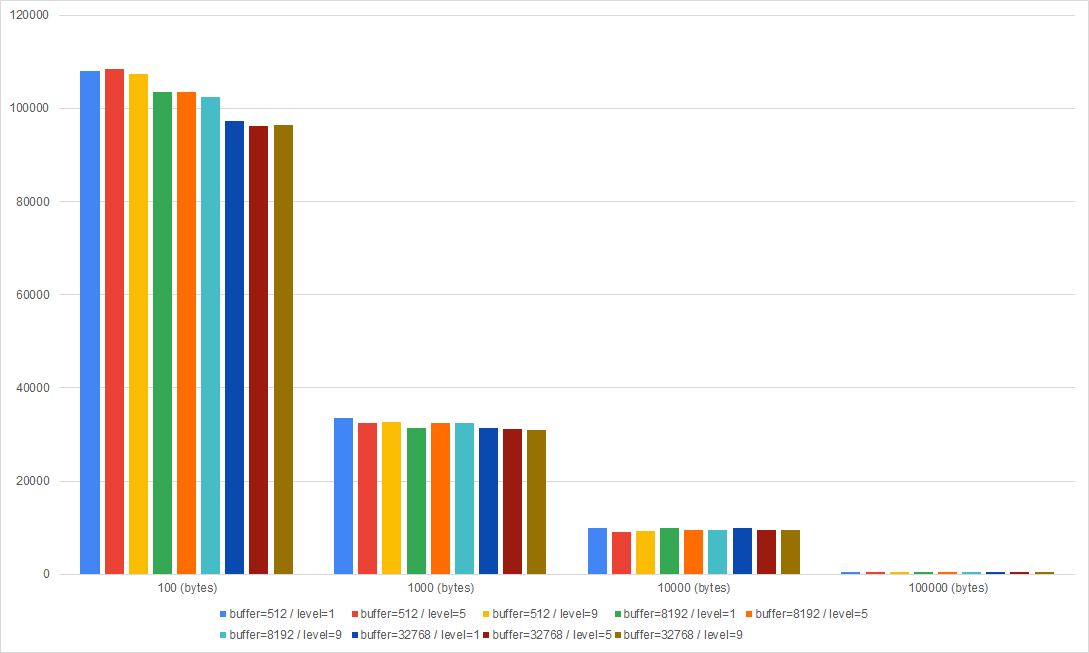

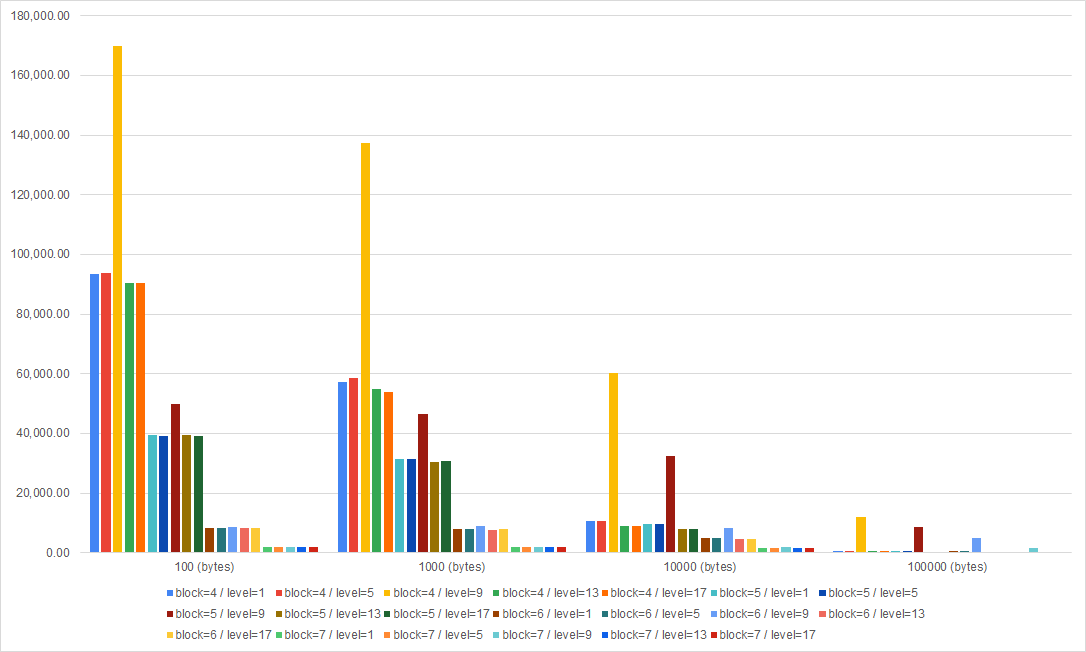

Gzip

(Left: Gzip (Write ops/sec), Right: Gzip (Read ops/sec))

Compression level and buffer size did not significantly impact both writing and reading speed; The most significant factor was data size. The impact of compression parameters was restricted only when the data size was very small, and made only ~11% of differences.

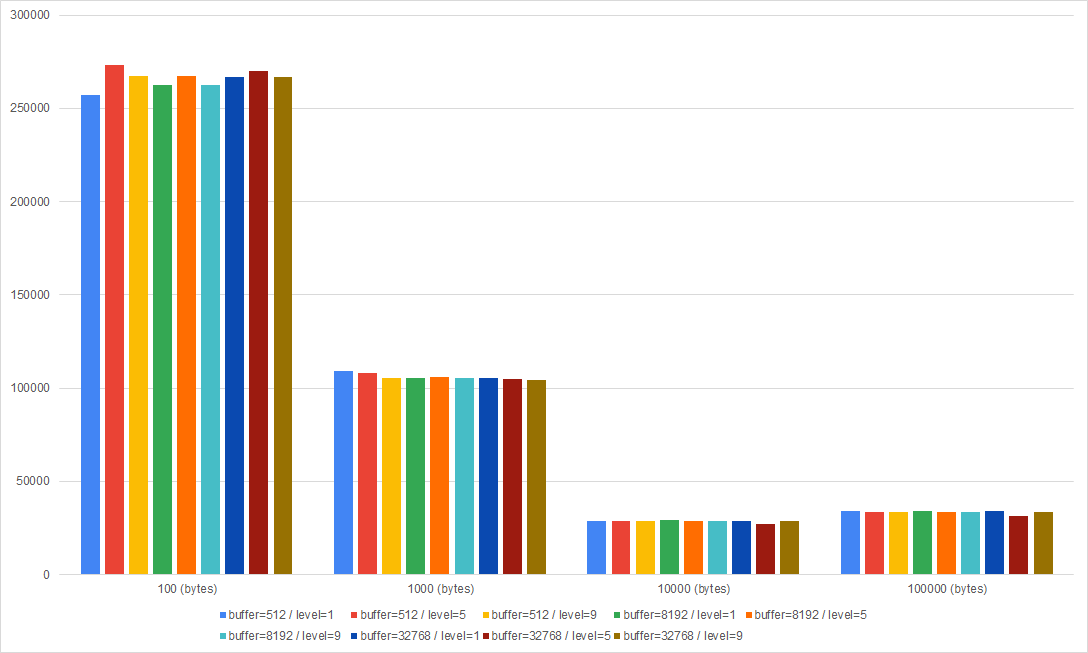

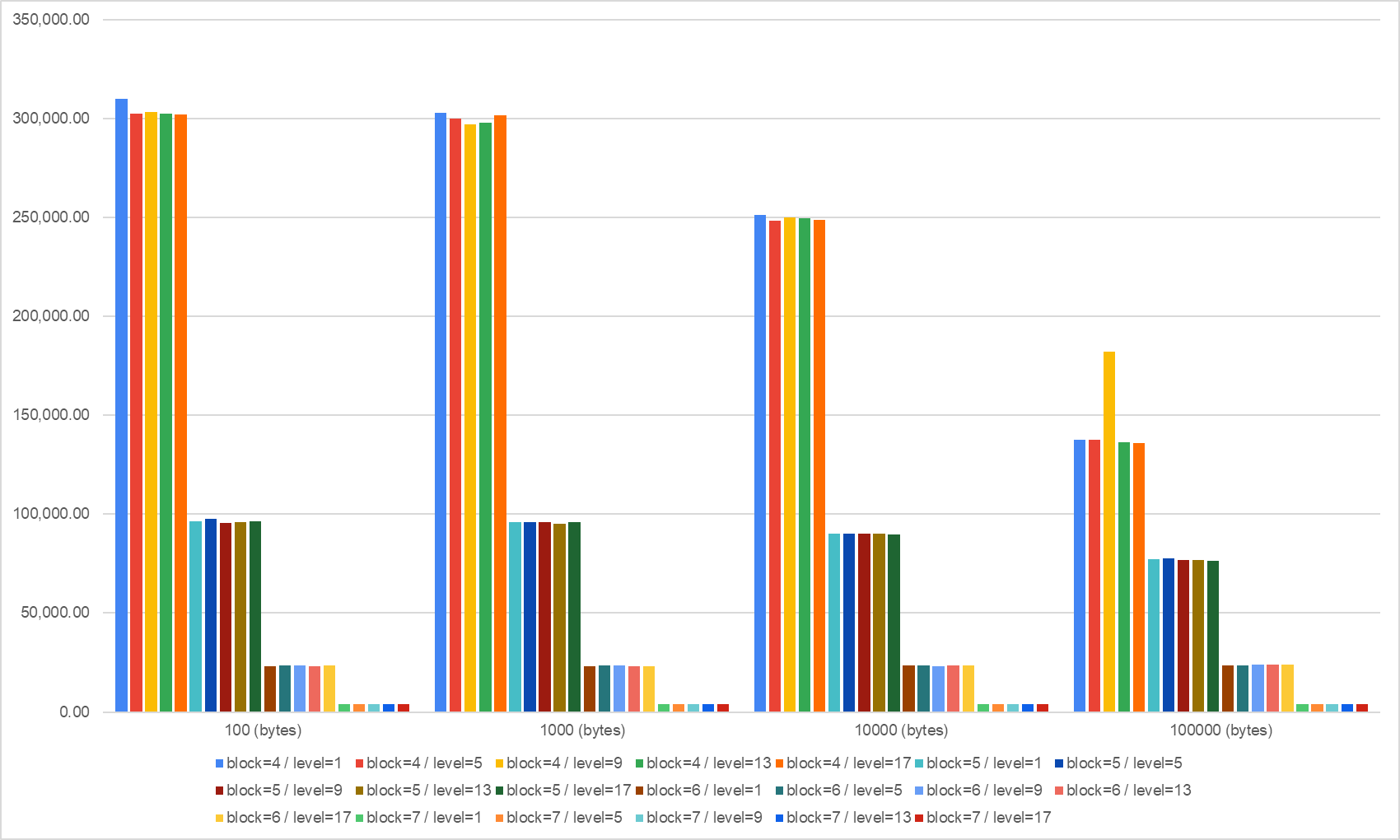

Snappy

(Left: Snappy (Write ops/sec), Right: Snappy (Read ops/sec))

Changing the block size did not drastically affect the writing speed - however, if you stick to the too-small block size for large records, it will degrade the writing speed. For example, using a block size of 1024 with 100 bytes-sized data degrades the compression speed 0.01% only. In contrast, if the data size is 10000 or 100000 bytes, the gap in writing speed is 30% or 38%, respectively.

If the record size is so small (like less than 1kb), reducing the block size to smaller than 32kb (default) would be reasonable for both of compression speed and memory footprint. However, if the record size is not so small yet, reducing the block size is not recommended.

Block size also had some impact on reading speed; As the size of data increases, small block size degraded the read performance.

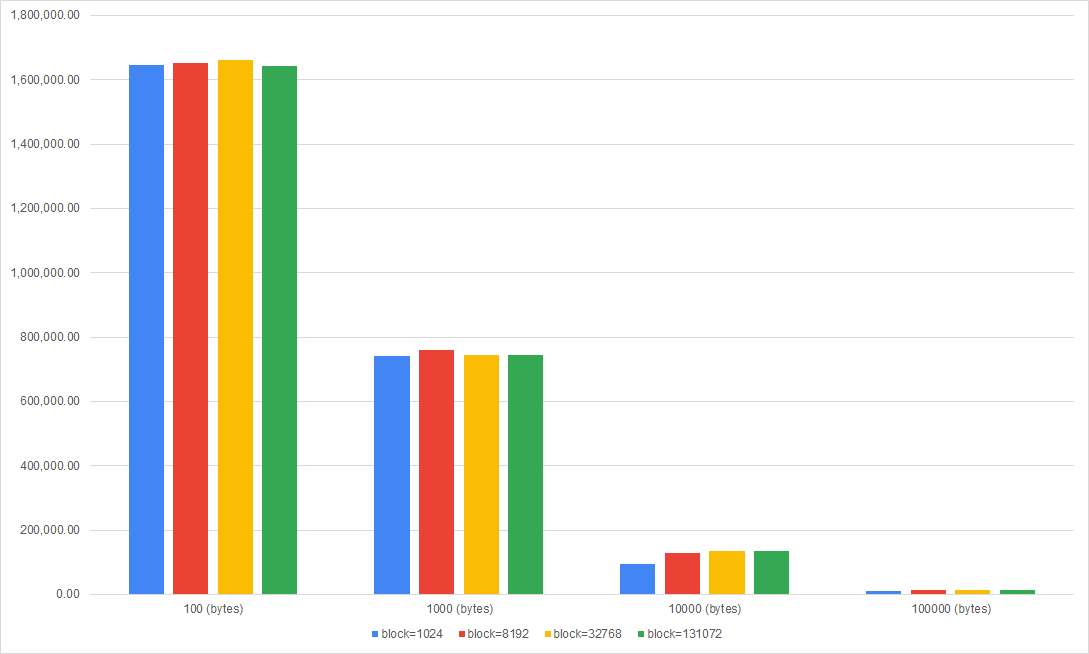

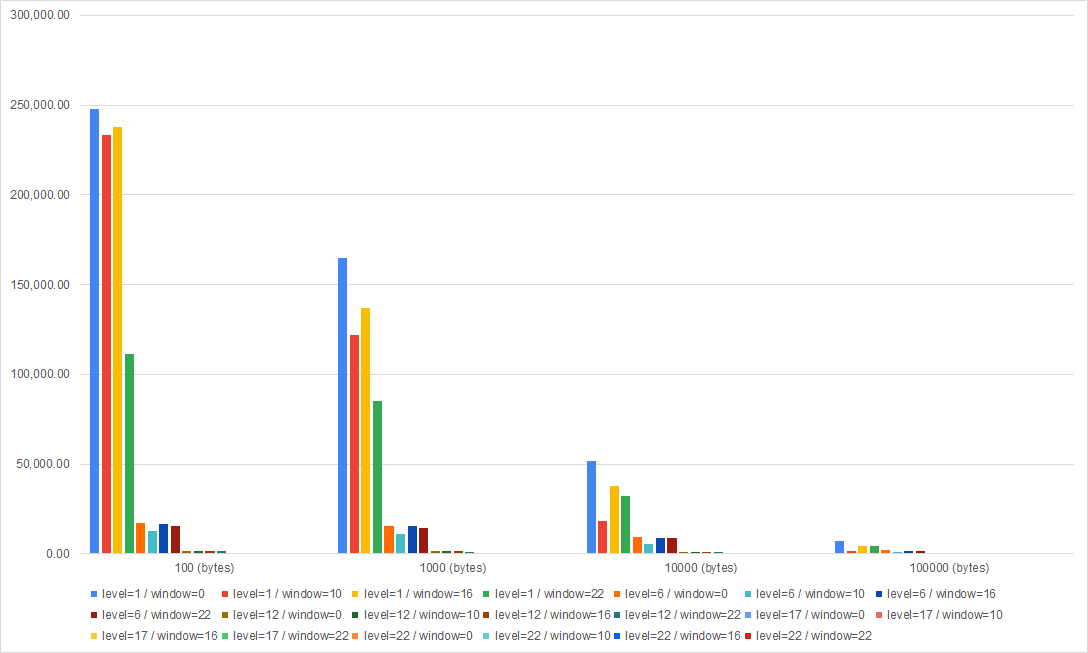

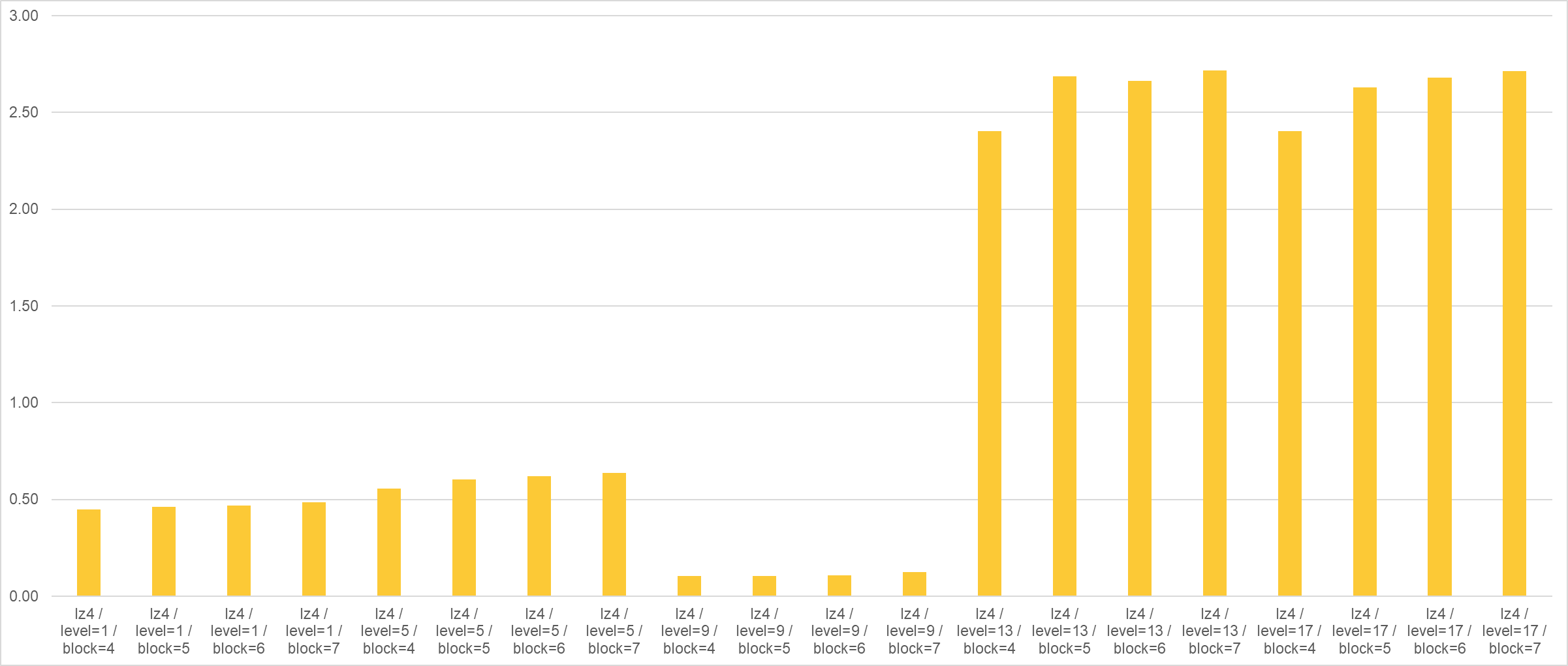

LZ4

(Left: LZ4 (Write ops/sec), Right: LZ4 (Read ops/sec))

Level 9 (default) always did the best; The differences between the compression level were neglectable, and as the block size increased, and in these cases, level 9 also showed similar speed with the other levels.

Overall, as the block size increased, the writing speed decreased for all data sizes. In contrast, the reading speed was almost the same at <= 1.000 bytes. If the block size is too big, the ops/sec for reading operation also degrades.

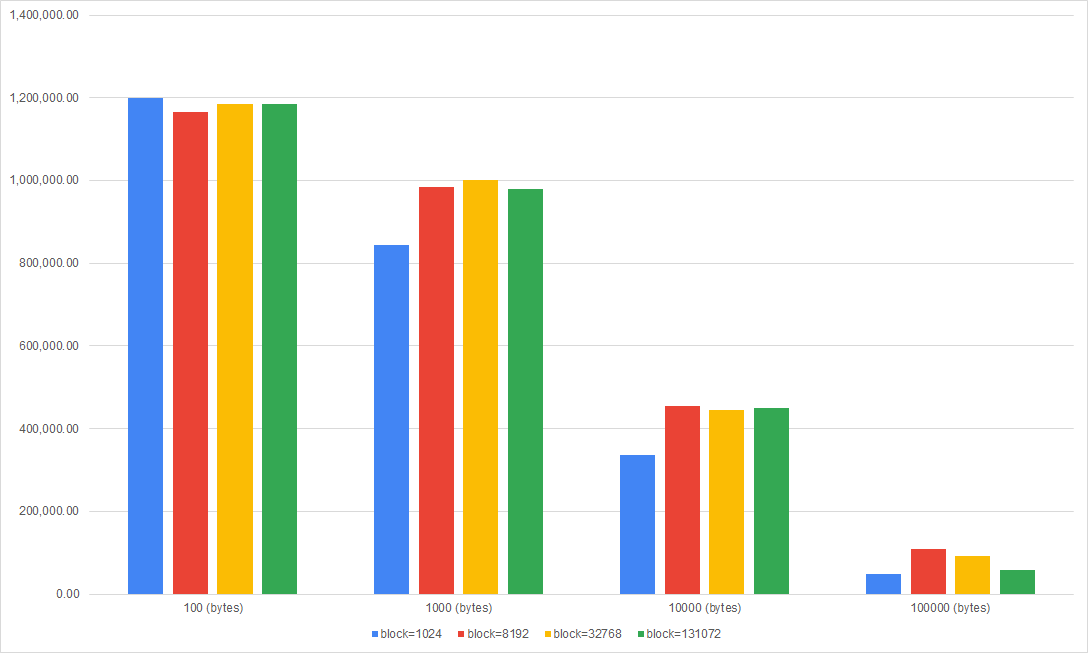

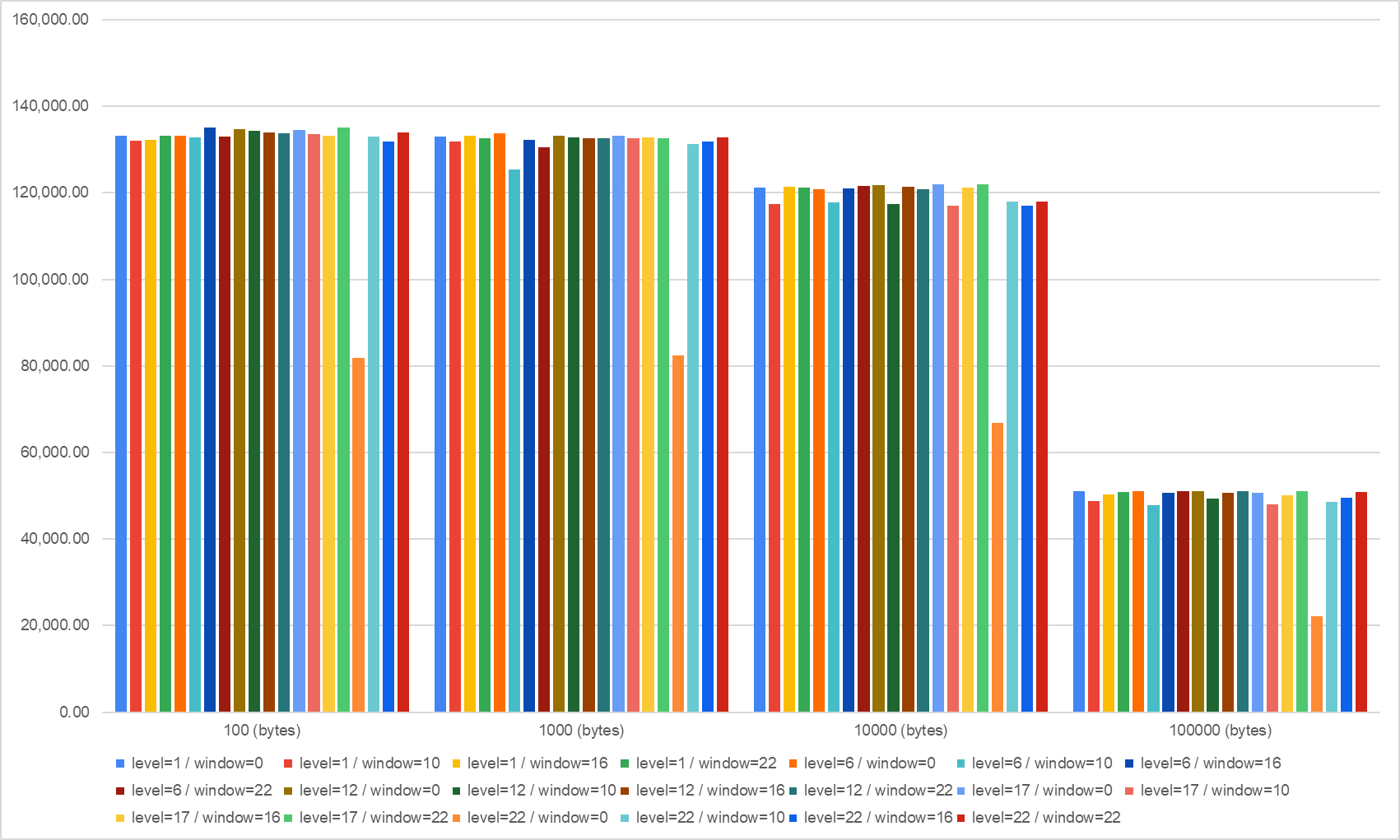

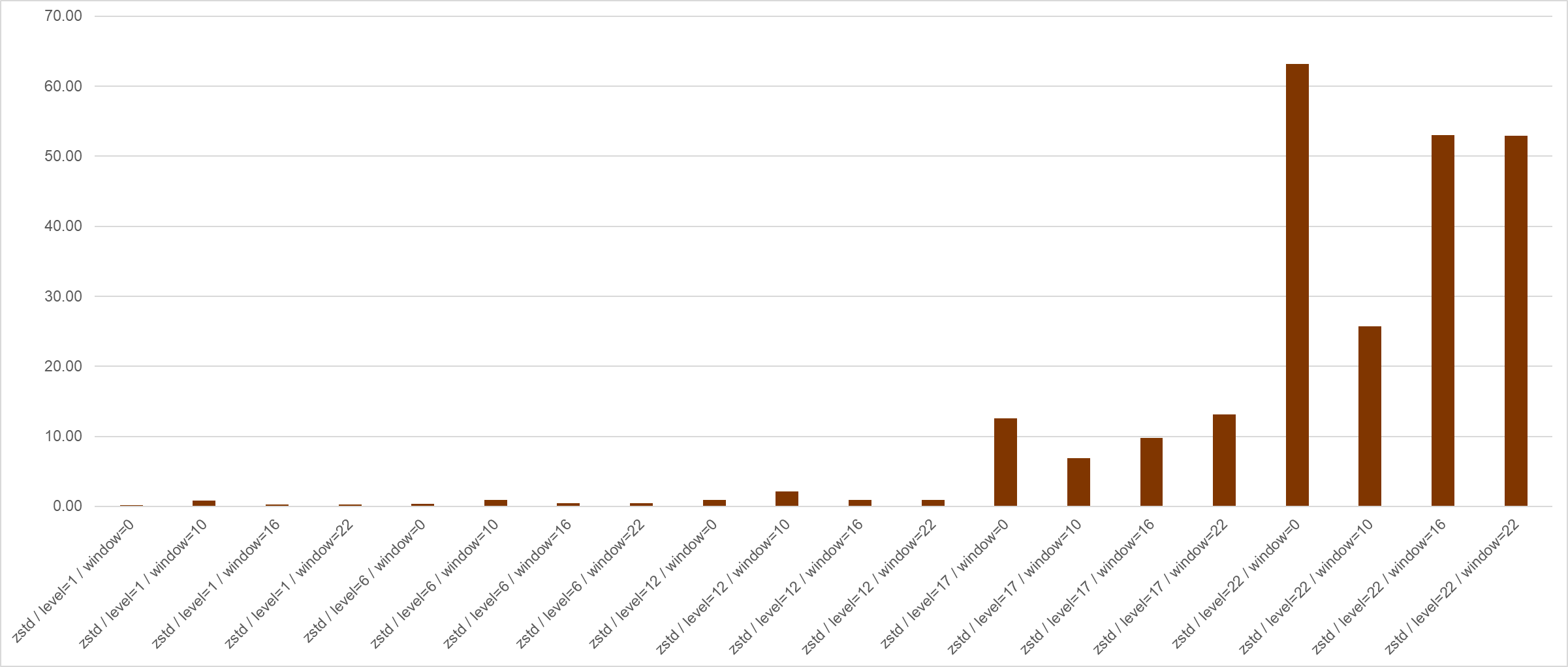

Zstd

(Left: Zstd (Write ops/sec), Right: Zstd (Read ops/sec))

For the writing speed, as the data size increases, the ops/sec differences between the levels disappear. Disabling the long mode was better than enabling, but if the long mode is turned on, the windowLog of 16 resulted in the best.

...

Real World Dataset

A benchmark with a real-world dataset was run to see the actual compression ratio and speed.

...

The dataset used consists of json data files collected from IoT sensors and measured the average compressed size / elapsed time of 100 batches, each consisting of 10 json files from the dataset.

(Compressed Size in bytes, overall)

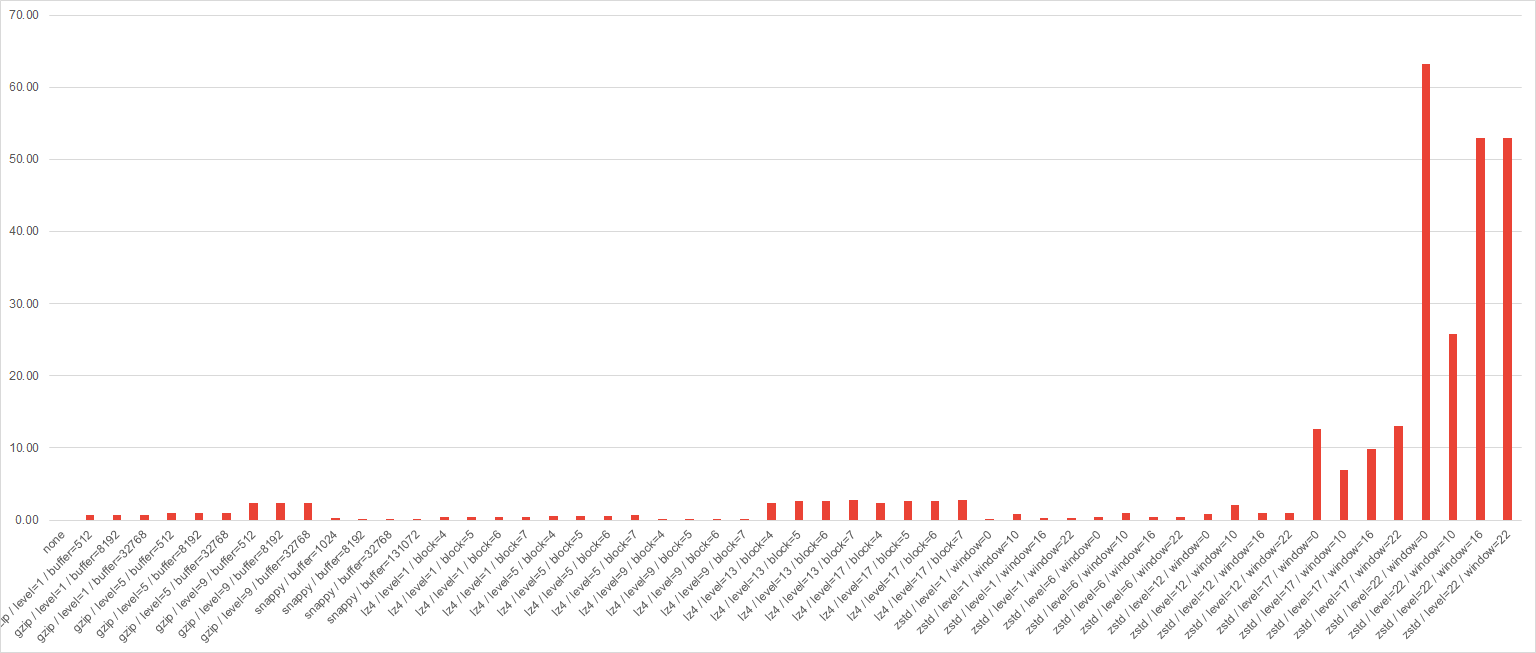

(Elapsed in sec, overall)

Since the variance in elapsed time is so significant, I will show the detailed graph per codec for better understanding in the following sections.

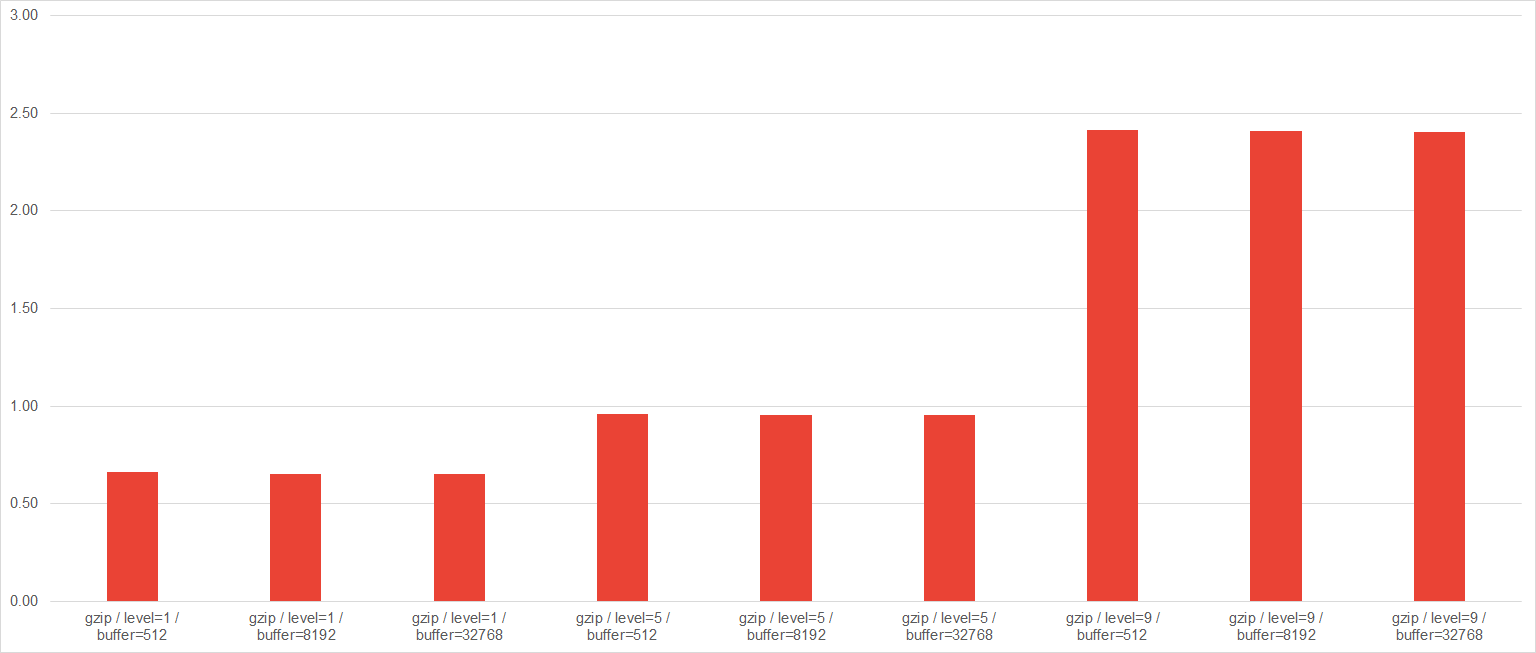

Gzip

(Gzip Elapsed time, detail)

Gzip took second place in terms of the compression ratio. However, although the variance in the compressed size is less than 15%, as we took the higher compression level, the elapsed time also increases, regardless of the buffer size. So, setting the level to 1 and assigning enough buffer to the consumer would be a preferable strategy in terms of producing and consuming both.

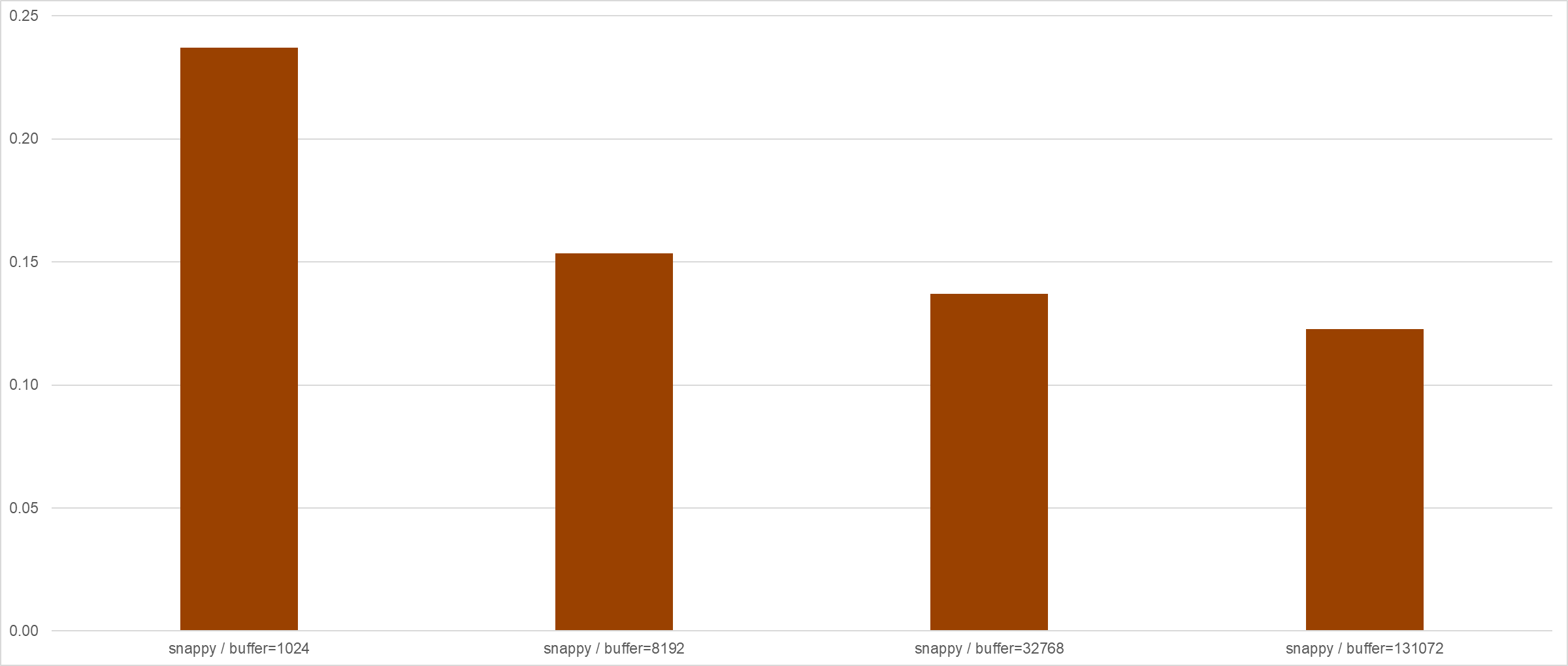

Snappy

(Snappy Elapsed time, detail)

Snappy took the bottom in terms of the compression ratio. The bigger block size snappy uses, both of the compression ratio and speed increased. So, finding the optimal block size seems to be the essential thing in tuning snappy.

LZ4

(LZ4 Elapsed time, detail)

The third place in compression ratio. The default configuration (level = 9, block = 4) outperformed all the other configurations in speed and size both.

...

In general, when the user has to use LZ4, using the default configuration would be the most reasonable strategy. But, if the user needs the smaller compressed data size, enlarging the block size and lowering the level would be an option.

Zstd

(Zstd Elapsed time, detail)

Zstd outperformed all the other configurations, both in compression ratio and speed. Comparing gzip / level=9 / buffer=32768 and zstd / level=1 / window=0, the best configuration among all the other and the most speed-first strategy in zstd, zstd outplayed gzip with only 90% of the size and 7% of compression time.

Turning on the long mode with a small window size (=10) did not help in both size and speed; as the compression level increased, the compression ratio also improved but, they had a small gain of size compared with zstd / level=1 / window=0 (less than 10%) with being overwhelmingly slow. If the smaller compressed size is necessary at the cost of the speed with zstd / level = 1 fixed, enlarging the window size to 22 would be a good approach - it takes twice compressing time but is still much faster than the other configurations.

Conclusion

In general, lowering the level and making the buffer/block/window size enough to the given data size resulted in the most satisfactory result. This result agrees with the randomly generated dataset-based result and the producer/consumer benchmark conducted in KIP-390 - which recommended lower the compression level to boost the producing speed.

...

Since the default values of newly introduced options are all identical to the currently used values, there are no compatibility or migration issues.

Rejected Alternatives

Recompression is enabled even when the detailed codec configuration is different

To enable this feature, we have to store the detailed compression configuration into the record batch. (currently, only the codec is stored.) It requires the modification of the record batch's binary format.

...