Authors Satish Duggana, Sriharsha Chintalapani, Satish DugganaYing Zheng, Suresh Srinivas, Ying Zheng (alphabetical order by the last names)

| Table of Contents |

|---|

Status

Current State: Discussion "Accepted"

Discussion Thread: Discuss Thread here

JIRA: Jira server ASF JIRA serverId 5aa69414-a9e9-3523-82ec-879b028fb15b key KAFKA-7739

Motivation

Kafka is an important part of data infrastructure and is seeing significant adoption and growth. As the Kafka cluster size grows and more data is stored in Kafka for a longer duration, several issues related to scalability, efficiency, and operations become important to address.

...

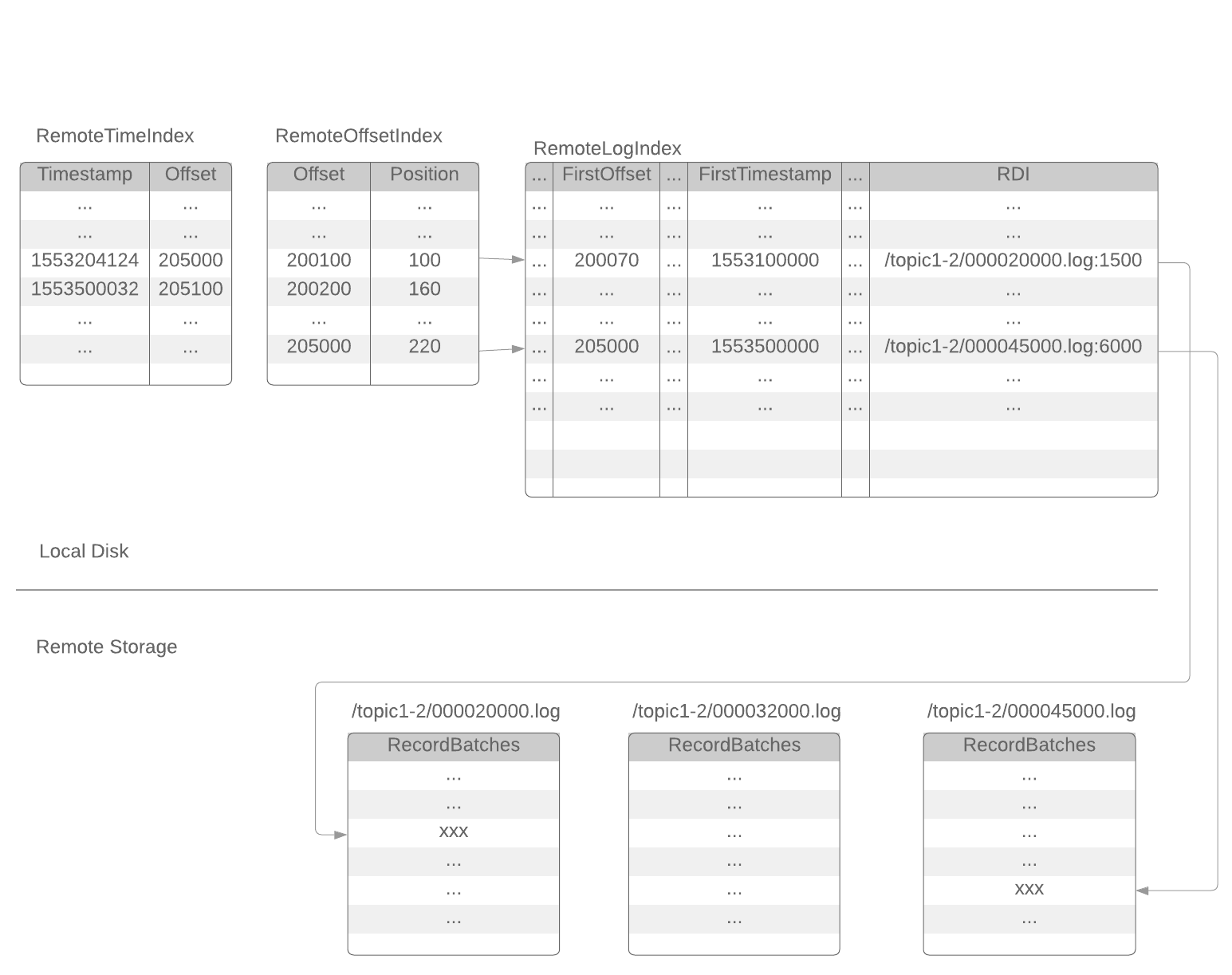

In the tiered storage approach, Kafka cluster is configured with two tiers of storage - local and remote. The local tier is the same as the current Kafka that uses the local disks on the Kafka brokers to store the log segments. The new remote tier uses systems, such as HDFS or S3 to store the completed log segments. Two separate retention periods are defined corresponding to each of the tiers. With remote tier enabled, the retention period for the local tier can be significantly reduced from days to few hours. The retention period for remote tier can be much longer, days, or even months. When a log segment is rolled on the local tier, it is copied to the remote tier along with the corresponding offset indexindexes. Latency sensitive applications perform tail reads and are served from local tier leveraging the existing Kafka mechanism of efficiently using page cache to serve the data. Backfill and other applications recovering from a failure that needs data older than what is in the local tier are served from the remote tier.

...

It does not support compact topics with tiered storage. Topic created with the effective value for remote.log.storage.enable as true, can not change its retention policy from delete to compact.

...

- receives callback events for leadership changes and stop/delete events of topic partitions on a broker.

- delegates copy, read, and delete of topic partition segments to a pluggable storage manager(viz RemoteStorageManager) implementation and maintains respective remote log segment metadata through RemoteLogMetadataManager.

`RemoteLogManager` is an internal component and it is not a public API.

`RemoteStorageManager` is an interface to provide the lifecycle of remote log segments and indexes. More details about how we arrived at this interface are discussed in the document. We will provide a simple implementation of RSM to get a better understanding of the APIs. HDFS and S3 implementation are planned to be hosted in external repos and these will not be part of Apache Kafka repo. This is inline with the approach taken for Kafka connectors.

...

RLM creates tasks for each leader or follower topic partition, which are explained in detail here.

- RLM Leader Task

- It checks for rolled over LogSegments (which have the last message offset less than last stable offset of that topic partition) and copies them along with their offset/time/transaction/producer-snapshot indexes and leader epoch cache to the remote tier. It also serves the fetch requests for older data from the remote tier. Local logs are not cleaned up till those segments are copied successfully to remote even though their retention time/size is reached.

...

1) Retrieve the Earliest Local Offset (ELO) and the corresponding leader epoch (ELO-LE) from the leader with a ListOffset request (timestamp = -34)

2) Truncate local log and local auxiliary state

...

Let us discuss a few cases that followers can encounter while it tries to replicate from the leader and build the auxiliary state from remote storage.

OMRS OMTS : OffsetMovedToRemoteStorageOffsetMovedToTieredStorage

ELO : Earliest-Local-Offset

...

Broker A (Leader) | Broker B (Follower) | Remote Storage | RL metadata storage |

3: msg 3 LE-1 4: msg 4 LE-1 5: msg 5 LE-2 6: msg 6 LE-2 7: msg 7 LE-3 (HW) leader_epochs LE-0, 0 LE-1, 3 LE-2, 5 LE-3, 7 | 1. Fetch LE-1, 0 2. Receives OMRSOMTS 3. Receives ELO 3, LE-1 4. Fetch remote segment info and build local leader epoch sequence until ELO leader_epochs LE-0, 0 LE-1, 3 | seg-0-2, uuid-1 log: 0: msg 0 LE-0 1: msg 1 LE-0 2: msg 2 LE-0 epochs: LE-0, 0 seg 3-5, uuid-2 log: 3: msg 3 LE-1 4: msg 4 LE-1 5: msg 5 LE-2 epochs: LE-0, 0 LE-1, 3 LE-2, 5 | seg-0-2, uuid-1 segment epochs LE-0, 0 seg-3-5, uuid-2 segment epochs LE-1, 3 LE-2, 5 |

...

Broker A (Leader) | Broker B (Follower) | Remote Storage | RL metadata storage |

9: msg 9 LE-3 10: msg 10 LE-3 11: msg 11 LE-3 (HW) [segments till offset 8 were deleted] leader_epochs LE-0, 0 LE-1, 3 LE-2, 5 LE-3, 7 | 0: msg 0 LE-0 1: msg 1 LE-0 2: msg 2 LE-0 3: msg 3 LE-1 leader_epochs LE-0, 0 LE-1, 3 <Fetch State> 1. Fetch from leader LE-1, 4 2. Receives OMRSOMTS, truncate local segments. 3. Fetch ELO, Receives ELO 9, LE-3 and moves to BuildingRemoteLogAux state | seg-0-2, uuid-1 log: 0: msg 0 LE-0 1: msg 1 LE-0 2: msg 2 LE-0 epochs: LE-0, 0 seg 3-5, uuid-2 log: 3: msg 3 LE-1 4: msg 4 LE-1 5: msg 5 LE-2 epochs: LE-0, 0 LE-1, 3 LE-2, 5 Seg 6-8, uuid-3, LE-3 log: 6: msg 6 LE-2 7: msg 7 LE-3 8: msg 8 LE-3 epochs: LE-0, 0 LE-1, 3 LE-2, 5 LE-3, 7 | seg-0-2, uuid-1 segment epochs LE-0, 0 seg-3-5, uuid-2 segment epochs LE-1, 3 LE-2, 5 seg-6-8, uuid-3 segment epochs LE-2, 5 LE-3, 7 |

...

Broker A (Leader) | Broker B | Remote Storage | RL metadata storage |

0: msg 0 LE-0 1: msg 1 LE-0 2: msg 2 LE-0 (HW) leader_epochs LE-0, 0 | 0: msg 0 LE-0 1: msg 1 LE-00 2: msg 2 LE-0 0 (HW) leader_epochs LE-0, 0 | seg-0-1: log: 0: msg 0 LE-0 1: msg 1 LE-0 epoch: LE-0, 0 | seg-0-1, uuid-1 segment epochs LE-0, 0 |

...

In this case, it is acceptable to lose data, but we have to keep the same behaviour as described in the KIP-101.

Broker A (stopped) | Broker B (Leader) | Remote Storage | RL metadata storage |

0: msg 0 LE-0 1: msg 1 LE-0 2: msg 2 LE-0 (HW) leader_epochs LE-0, 0 | 0: msg 0 LE-0 (HW) 1: msg 3 LE-1 leader_epochs LE-0, 0 LE-1, 1 | seg-0-1: log: 0: msg 0 LE-0 1: msg 1 LE-0 epoch: LE-0, 0 | seg-0-1, uuid-1 segment epochs LE-0, 0 |

After restart, B losses message 1 and 2. B becomes the new leader, and receives a new message 3 (LE1LE-1, offset 1).

(Note: This may not be technically an unclean-leader-election, because B may have not been removed from ISR because both of the 2 brokers crashed at the same time.)

...

Broker A (follower) | Broker B (Leader) | Remote Storage | RL metadata storage |

0: msg 0 LE-0 1: msg 3 LE-1 2: msg 4 LE-1 (HW) leader_epochs LE-0, 0 LE-1, 1LE-2, 2 | 0: msg 0 LE-0 1: msg 3 LE-1 2: msg 4 LE-1 (HW) leader_epochs LE-0, 0 LE-1, 1 LE-2, 2 | seg-0-1: log: 0: msg 0 LE-0 1: msg 1 LE-0 epoch: LE-0, 0 seg-1-1 log: 1: msg 1 3 LE-1 epoch: LE-0, 0 LE-1, 1 | seg-0-1, uuid-1 segment epochs LE-0, 0 seg-1-1, uuid-2 segment epochs LE-1, 1 |

A new message (message 4) is received. The 2nd segment on broker B (seg-1-1) is shipped to remote storage.

The Consider the local segments upto up to offset 2 are deleted on both brokers.:

A consumer fetches offset 0, LE-0. According to the local leader epoch cache, offset 0 LE-0 is valid. So, the broker returns message 0 from remote segment 0-1.

A pre-KIP-320 consumer fetches offset 1, without leader epoch info. According to the local leader epoch cache, offset 1 belongs to LE-1. So, the broker returns message 3 from remote segment 1-1, rather than the LE-0 offset 1 message (message 1 ) in seg-0-1.

A consumer fetches offset 2 LE0 LE-0 is fenced (KIP-320).

A consumer fetches offset 1 LE-1 LE1 receives message 3 from remote segment 1-1.

...

Broker A (Leader) | Broker B (stopped) | Remote Storage | RL metadata storage |

0: msg 0 LE-0 1: msg 1 LE-0 2: msg 2 LE-0 3: msg 3 LE-0 4: msg 4 LE-0 5: msg 7 LE-2 6: msg 8 LE-2 leader_epochs LE-0, 0 LE-2, 5 1. Broker A receives two new messages in LE-2 2. Broker A shipps ships seg-4-5 to remote storage | 0: msg 0 LE-0 1: msg 1 LE-0 2: msg 4 LE-1 3: msg 5 LE-1 4: msg 6 LE-1 leader_epochs LE-0, 0 LE-1, 2 | seg-0-3 log: 0: msg 0 LE-0 1: msg 1 LE-0 2: msg 2 LE-0 3: msg 3 LE-0 epoch: LE-0, 0 seg-0-3 0: msg 0 LE-0 1: msg 1 LE-0 2: msg 4 LE-1 3: msg 5 LE-1 epoch: LE-0, 0 LE-1, 2 seg-4-5 epoch: LE-0, 0 LE-2, 5 | seg-0-3, uuid1 segment epochs LE-0, 0 seg-0-3, uuid2 segment epochs LE-0, 0 LE-1, 2 seg-4-5, uuid3 segment epochs LE-0, 0 LE-2, 5 |

...

Broker A (Leader) | Broker B (started, follower) | Remote Storage | RL metadata storage |

6: msg 8 LE-2 leader_epochs LE-0, 0 LE-2, 5 | 1. Broker B fetches offset 0, and receives OMRS OMTS error. 2. Broker B receives ELO=6, LE-2 3. in BuildingRemoteLogAux state, broker B finds seg-4-5 has LE-2. So, it builds local LE cache from seg-4-5: leader_epochs LE-0, 0 LE-2, 5 4. Broker B continue fetching from local messages from ELO 6, LE-2 5. Broker B joins ISR | seg-0-3 log: 0: msg 0 LE-0 1: msg 1 LE-0 2: msg 2 LE-0 3: msg 3 LE-0 epoch: LE-0, 0 seg-0-3 0: msg 0 LE-0 1: msg 1 LE-0 2: msg 4 LE-1 3: msg 5 LE-1 epoch: LE-0, 0 LE-1, 2 seg-4-5 epoch: LE-0, 0 LE-2, 5 | seg-0-3, uuid1 segment epochs LE-0, 0 seg-0-3, uuid2 segment epochs LE-0, 0 LE-1, 2 seg-4-5, uuid3 segment epochs LE-0, 0 LE-2, 5 |

...

For any fetch requests, ReplicaManager will proceed with making a call to readFromLocalLog, if this method returns OffsetOutOfRange exception it will delegate the read call to RemoteLogManager. More details are explained in the RLM/RSM tasks section. If the remote storage is not available then it will throw a new error TIERED_STORAGE_NOT_AVAILABLE.

...

This API is enhanced with supporting new target timestamp value as -3 which 4 which is called EARLIEST_LOCAL_TIMESTAMP. There will not be any new fields added in request and response schemes but there will be a version bump to indicate the version update. This request is about the offset that the followers should start fetching to replicate the local logs. It represents the log-start-offset available in the local log storage which is also called as local-log-start-offset. All the records earlier to this offset can be considered as copied to the remote storage. This is used by follower replicas to avoid fetching records that are already copied to remote tier storage.

...

If the process of a topic-partition is failed due to remote storage error, it follows retry backing off algorithm with intiial retry interval as `remote.log.manager.task.retry.interval.ms`, max backoff as `remote.log.manager.task.retry.backoff.max.ms`, and jitter as `remote.log.manager.task.retry.jitter`. You can see more details about the exponential backoff algorithm here.

...

When a partition is deleted, the controller updates its state in RLMM with DELETE_PARTITION_MARKED and it expects RLMM will have a mechanism to cleanup clean up the remote log segments. This process for default RLMM is described in detail here.

RemoteLogMetadataManager implemented with an internal topic

Metadata of remote log segments are stored in an internal non compact topic called `__remote_log_metadata`. This topic can be created with default partitions count as 50. Users can configure the partitions count and replication factor etc as mentioned in the config section.

...

RLMM maintains metadata cache by subscribing to the respective remote log metadata topic partitions. Whenever a topic partition is reassigned to a new broker and RLMM on that broker is not subscribed to the respective remote log metadata topic partition then it will subscribe to the respective remote log metadata topic partition and adds all the entries to the cache. So, in the worst case, RLMM on a broker may be consuming from most of the remote log metadata topic partitions. In the initial version, we will have a file-based cache for all the messages that are already consumed by this instance and it will load inmemory in-memory whenever RLMM is started. This cache is maintained in a separate file for each of the topic partitions. This will allow us to commit offsets of the partitions that are already read. Committed offsets can be stored in a local file to avoid reading the messages again when a broker is restarted.

...

RLMM instance on broker publishes the message to the topic with key as null and value with the below format.

type : unsigned var int, represents Represents the value type. This value is 'apikey' as mentioned in the schema. Its type is 'byte'.

version : unsigned var int, the 'version' number of the type as mentioned in the schema. Its type is 'byte'.

data : record payload in kafka protocol message format, the schema is given below.

...

| Code Block | ||

|---|---|---|

| ||

{

"apiKey": 0,

"type": "data",

"name": "RemoteLogSegmentMetadataRecord",

"validVersions": "0",

"flexibleVersions": "none",

"fields": [

{

"name": "RemoteLogSegmentId",

"type": "RemoteLogSegmentIdEntry",

"versions": "0+",

"about": "Unique idrepresentation of the remote log segment",

"fields": [

{

"name": "topicNameTopicIdPartition",

"type": "stringTopicIdPartitionEntry",

"versions": "0+",

"about": "Topic name"Represents unique topic partition",

}, "fields": [

{

"name": "topicIdName",

" "type": "uuidstring",

"versions": "0+",

"about": "Topic idname"

},

{

"name": "partitionId",

"type": "int32uuid",

"versions": "0+",

"about": "Partition number"

Unique identifier of the topic"

},

{

"name": "segmentIdPartition",

"type": "uuidint32",

"versions": "0+",

"about": "UniquePartition identifiernumber"

of the log segment"

}

]

},

{

"name": "StartOffsetId",

"type": "int64uuid",

"versions": "0+",

"about": "StartUnique offsetidentifier of the remote log segment.""

}

]

},

{

"name": "endOffsetStartOffset",

"type": "int64",

"versions": "0+",

"about": "EndStart offset of the segment."

},

{

"name": "LeaderEpochEndOffset",

"type": "int32int64",

"versions": "0+",

"about": "LeaderEnd epochoffset from whichof thisthe segment instance is created or updated."

},

{

"name": "MaxTimestampLeaderEpoch",

"type": "int64int32",

"versions": "0+",

"about": "MaximumLeader timestamp epoch from which this segment instance is created or updated"

},

{

"name": "MaxTimestamp",

"type": "int64",

"versions": "0+",

"about": "Maximum timestamp with in this segment."

},

{

"name": "EventTimestamp",

"type": "int64",

"versions": "0+",

"about": "Event timestamp of this segment."

},

{

"name": "SegmentLeaderEpochs",

"type": "[]SegmentLeaderEpochEntry",

"versions": "0+",

"about": "EventLeader timestamp of this segmentepoch cache.",

"fields": [

{

"name": "LeaderEpoch",

"type": "int32",

"versions": "0+",

"about": "Leader epoch"

},

{

"name": "Offset",

"type": "int64",

"versions": "0+",

"about": "Start offset for the leader epoch"

}

]

},

{

"name": "SegmentSizeInBytes",

"type": "int32",

"versions": "0+",

"about": "Segment size in bytes"

},

{

"name": "RemoteLogStateRemoteLogSegmentState",

"type": "int8",

"versions": "0+",

"about": "State of the remote log segment"

}

]

}

{

"apiKey": 1,

"type": "data",

"name": "RemoteLogSegmentMetadataRecordUpdate",

"validVersions": "0",

"flexibleVersions": "none",

"fields": [

{

"name": "RemoteLogSegmentId",

"type": "RemoteLogSegmentIdEntry",

"versions": "0+",

"about": "Unique idrepresentation of the remote log segment",

"fields": [

{

"name": "topicTopicIdPartition",

"type": "stringTopicIdPartitionEntry",

"versions": "0+",

"about": "Topic name"

Represents unique topic partition",

},

"fields": [

{

"name": "topicIdName",

"type": "uuidstring",

"versions": "0+",

"about": "UniqueTopic identifiername"

of the topic id"

},

{

"name": "partitionId",

"type": "uuid",

"versions": "0+",

"about": "Unique identifier of the topic"

},

{

"name": "Partition",

"type": "int32",

"versions": "0+",

"about": "Partition number"

}

]

},

{

"name": "idId",

"type": "uuid",

"versions": "0+",

"about": "Unique identifier of the remote log segment"

}

]

},

{

"name": "LeaderEpoch",

"type": "int32",

"versions": "0+",

"about": "Leader epoch from which this segment instance is created or updated"

},

{

"name": "EventTimestamp",

"type": "int64",

"versions": "0+",

"about": "Event timestamp of this segment."

},

{

"name": "RemoteLogStateRemoteLogSegmentState",

"type": "int8",

"versions": "0+",

"about": "State of the remote segment"

}

]

}

{

"apiKey": 2,

"type": "data",

"name": "DeletePartitionStateRecordRemotePartitionDeleteMetadataRecord",

"validVersions": "0",

"flexibleVersions": "none",

"fields": [

{

"name": "TopicIdPartition",

"type": "TopicIdPartitionEntry",

"versions": "0+",

"about": "TopicRepresents unique topic partition",

"fields": [

{

"name": "nameName",

"type": "string",

"versions": "0+",

"about": "Topic name"

},

{

"name": "topicIdId",

"type": "uuid",

"versions": "0+",

"about": "Unique identifier of the topic id"

},

{

"name": "partitionPartition",

"type": "int32",

"versions": "0+",

"about": "Partition number"

}

]

},

{

"name": "epochEpoch",

"type": "int32",

"versions": "0+",

"about": "Epoch (controller or leader) from which this event is created. DELETE_PARTITION_MARKED is sent by the controller. DELETE_PARTITION_STARTED and DELETE_PARTITION_FINISHED are sent by remote log metadata topic partition leader."

},

{

"name": "EventTimestamp",

"type": "int64",

"versions": "0+",

"about": "Event timestamp of this segment."

},

{

"name": "RemotePartitionStateRemotePartitionDeleteState",

"type": "int8",

"versions": "0+",

"about": "StateDeletion state of the remote partition"

}

]

}

package org.apache.kafka.commonserver.log.remote.storage;

...

/**

* It indicates the deletion state of the remote topic partition. This will be based on the action executed on this

* partition by the remote log service implementation.

*/

public enum RemotePartitionStateRemotePartitionDeleteState {

/**

* This is used when a topic/partition is determined to be deleted by controller.

* This partition is marked for delete by controller. That means, all its remote log segments are eligible for

* deletion so that remote partition removers can start deleting them.

*/

DELETE_PARTITION_MARKED((byte) 0),

/**

* This state indicates that the partition deletion is started but not yet finished.

*/

DELETE_PARTITION_STARTED((byte) 1),

/**

* This state indicates that the partition is deleted successfully.

*/

DELETE_PARTITION_FINISHED((byte) 2);

...

}

package org.apache.kafka.commonserver.log.remote.storage;

...

/**

* It indicates the state of the remote log segment or partition. This will be based on the action executed on this

* segment or partition by the remote log service implementation.

* <p>

* todo: check whether the state validations to be checked or not, add next possible states for each state.

*/

public enum RemoteLogStateRemoteLogSegmentState {

/**

* This state indicates that the segment copying to remote storage is started but not yet finished.

*/

COPY_SEGMENT_STARTED((byte) 0),

/**

* This state indicates that the segment copying to remote storage is finished.

*/

COPY_SEGMENT_FINISHED((byte) 1),

/**

* This state indicates that the segment deletion is started but not yet finished.

*/

DELETE_SEGMENT_STARTED((byte) 2),

/**

* This state indicates that the segment is deleted successfully.

*/

DELETE_SEGMENT_FINISHED((byte) 3),

...

} |

...

| remote.log.metadata.topic.replication.factor | Replication factor of the topic Default: 3 |

| remote.log.metadata.topic.num.partitions | No of partitions of the topic Default: 50 |

| remote.log.metadata.topic.retention.ms | Retention of the topic in milli seconds. Default: 365 * 24 * 60 * 60 * 1000 (1 yr) -1, that means unlimited. Users can configure this value based on their usecases. To avoid any data loss, this value should be more than the maximum retention period of any topic enabled with tiered storage in the cluster. |

| remote.log.metadata.manager.listener.name | Listener name to be be used to connect to the local broker by RemoteLogMetadataManager implementation on the broker. This is a mandatory config while using the default RLMM implementation which is `org.apache.kafka.server.log.remote.metadata.storage.TopicBasedRemoteLogMetadataManager`. Respective endpoint address is passed with "bootstrap.servers" property while invoking RemoteLogMetadataManager#configureinvoking RemoteLogMetadataManager#configure(Map<String, ?> props). This is used by kafka clients created in RemoteLogMetadataManager implementation. |

| remote.log.metadata.* | Default RLMM implementation creates producer and consumer instances. Common client properties can be configured with `remote.log.metadata.common.client.` prefix. User can also pass properties specific to producer/consumer with `remote.log.metadata.producer.` and `remote.log.metadata.consumer.` prefixes. These will override properties with `remote.log.metadata.common.client.` prefix. Any other properties should be prefixed with the config: "remote.log.metadata.manager.impl.prefix", default value is "rlmm.config.". These configs Any other properties should be prefixed with "remote.log.metadata." and these will be passed to RemoteLogMetadataManager#configure(Map<String, ?> props). For ex: Security configuration to connect to the local broker for the listener name configured are passed with props.example: "rlmm.config.remote.log.metadata.producer.batch.size=100" will set the |

| remote.partition.remover.task.interval.ms | The interval at which remote partition remover runs to delete the remote storage of the partitions marked for deletion. Default value: 3600000 (1 hr ) |

Committed offsets file format

...

| Code Block | ||||

|---|---|---|---|---|

| ||||

<magic><topic-name><topic-id><metadata-topic-offset><sequence-of-serialized-entries> magic: version of this file format topic-id: uuid unsigned var int, version of this file format. topic-name: string, topic name. topic-id: uuid, uuid of topic metadata-topic-offset: var long, offset of the remote log metadata topic partition fromupto which this topic partition's remote log metadata is fetched. serialized-entry:entries: sequence of serialized entryentries defined as below, more types can be added later if needed. Serialization of entry is done as mentioned below. This is very similar to the message format mentioned earlier for storing into the metadata topic. length : unsigned var int, length of this entry which is sum of sizes of type, version, and data. type : unsigned var int, represents the value type. This value is 'apikey' as mentioned in the schema. version : unsigned var int, the 'version' number of the type as mentioned in the schema. data : record payload in kafka protocol message format, the schema is given below. Both type and version are added before the data is serialized into record value. Schema can be evolved by adding a new version with the respective changes. A new type can also be supported by adding the respective type and its version. { "apiKey": 0, "type": "data", "name": "RemoteLogSegmentMetadataRecordStored", "validVersions": "0", "flexibleVersions": "none", "fields": [ { "name": "segmentIdSegmentId", "type": "uuid", "versions": "0+", "about": "Unique identifier of the log segment" }, { "name": "StartOffset", "type": "int64", "versions": "0+", "about": "Start offset of the segment." }, { "name": "endOffsetEndOffset", "type": "int64", "versions": "0+", "about": "End offset of the segment." }, { "name": "LeaderEpoch", "type": "int32", "versions": "0+", "about": "Leader epoch from which this segment instance is created or updated" }, { "name": "MaxTimestamp", "type": "int64", "versions": "0+", "about": "Maximum timestamp with in this segment." }, { "name": "EventTimestamp", "type": "int64", "versions": "0+", "about": "Event timestamp of this segment." }, { "name": "SegmentLeaderEpochs", "type": "[]SegmentLeaderEpochEntry", "versions": "0+", "about": "Event timestamp of this segment.", "fields": [ { "name": "LeaderEpoch", "type": "int32", "versions": "0+", "about": "Leader epoch" }, { "name": "Offset", "type": "int64", "versions": "0+", "about": "Start offset for the leader epoch" } ] }, { "name": "SegmentSizeInBytes", "type": "int32", "versions": "0+", "about": "Segment size in bytes" }, { "name": "RemoteLogStateRemoteLogSegmentState", "type": "int8", "versions": "0+", "about": "State of the remote log segment" } ] } { "apiKey": 1, "type": "data", "name": "DeletePartitionStateRecord", "validVersions": "0", "flexibleVersions": "none", "fields": [ { "name": "epochEpoch", "type": "int32", "versions": "0+", "about": "Epoch (controller or leader) from which this event is created. DELETE_PARTITION_MARKED is sent by the controller. DELETE_PARTITION_STARTED and DELETE_PARTITION_FINISHED are sent by remote log metadata topic partition leader." }, { "name": "EventTimestamp", "type": "int64", "versions": "0+", "about": "Event timestamp of this segment." }, { "name": "RemotePartitionStateRemotePartitionDeleteState", "type": "int8", "versions": "0+", "about": "StateDeletion state of the remote partition" } ] } |

...

Message Formatter for the internal topic

//todo

...

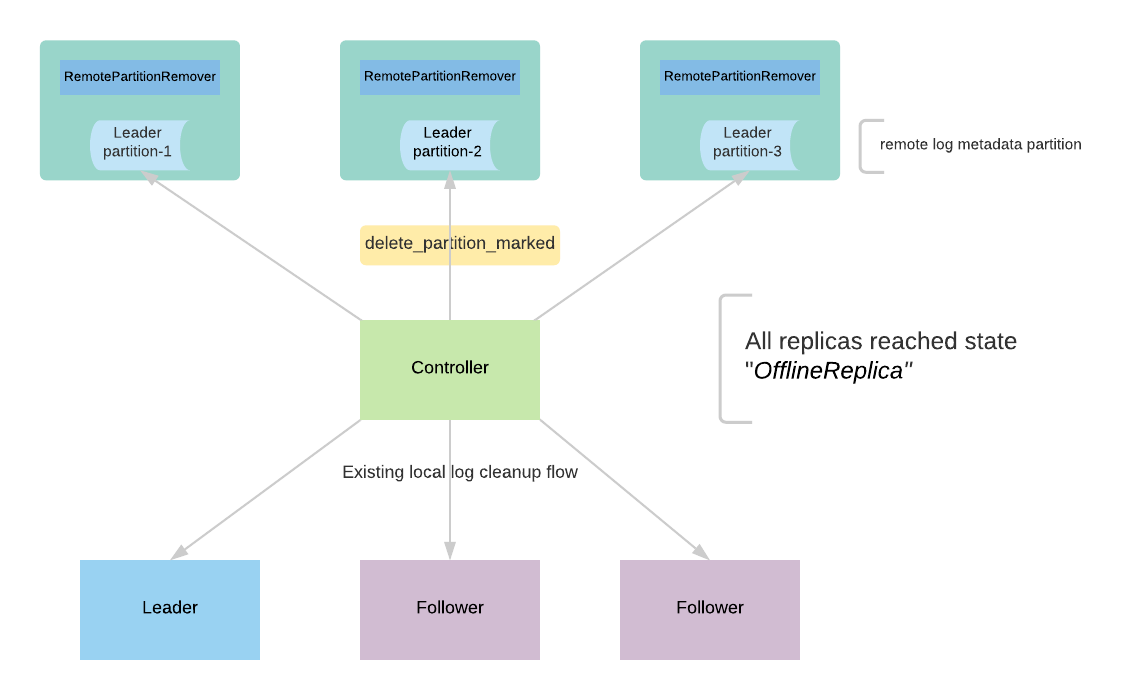

The controller receives a delete request for a topic. It goes through the existing protocol of deletion and it makes all the replicas offline to stop taking any fetch requests. After all the replicas reach the offline state, the controller publishes an event to the `org.apache.kafka.server.log.remote.storage.RemoteLogMetadataFormatter` can be used to format messages received from remote log metadata topic by marking the topic as deleted. With KIP-516, topics are represented with uuid, and topics can be deleted asynchronously. This allows the remote logs can be garbage collected later by publishing the deletion marker into the remote log metadata topic.

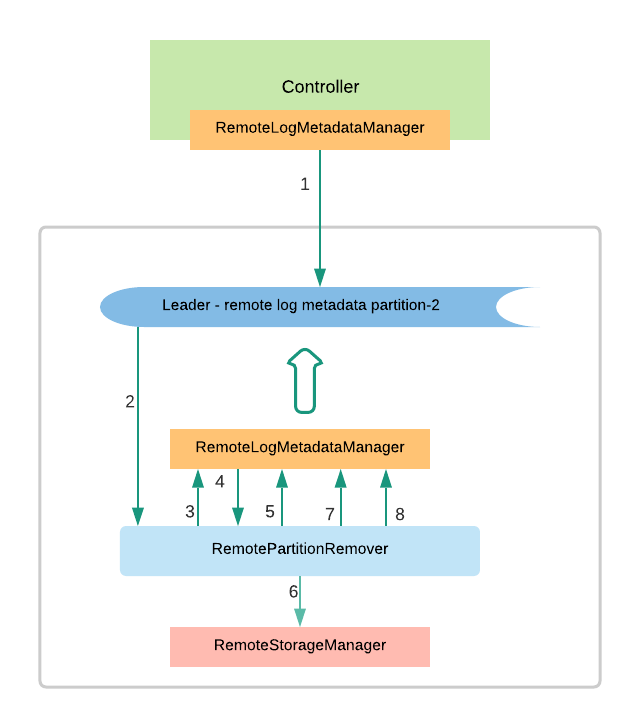

RemotePartitionRemover instance is created on the leader for each of the remote log segment metadata topic partitions. It consumes messages from that partitions and filters the delete partition events which need to be processed. It also maintains a committed offset for this instance to handle leader failovers to a different replica so that it can start processing the messages where it left off.

RemotePartitionRemover(RPM) processes the request with the following flow as mentioned in the below diagram.

- The controller publishes delete_partition_marked event to say that the partition is marked for deletion. There can be multiple events published when the controller restarts or failover and this event will be deduplicated by RPM.

- RPM receives the delete_partition_marked and processes it if it is not yet processed earlier.

- RPM publishes an event delete_partition_started that indicates the partition deletion has already been started.

- RPM gets all the remote log segments for the partition using RLMM and each of these remote log segments is deleted with the next steps.RLMM subscribes to the local remote log metadata partitions and it will have the segment metadata of all the user topic partitions associated to that remote log metadata partition.

- Publish delete_segment_started event with the segment id.

- RPM deletes the segment using RSM

- Publish delete_segment_finished event with segment id once it is successful.

- Publish delete_partition_finished once all the segments have been deleted successfully.

Protocol Changes

ListOffsets

Currently, it supports the listing of offsets based on the earliest timestamp and the latest timestamp of the complete log. There is no change in the protocol but the new versions will start supporting listing earliest offsets based on the local logs but not only on the complete log including remote log. This protocol will be updated with the changes from KIP-516 but there are no changes required as mentioned earlier. Request and response versions will be bumped to version 7.

Public Interfaces

Compacted topics will not have remote storage support.

Configs

...

remote.storage.system.enable - Whether to enable tier storage functionality in a broker or not. Valid values are `true` or `false` and the default value is false. This property gives backward compatibility. When it is true broker starts all the services required for tiered storage.

remote.log.storage.manager.class.name - This is mandatory if the remote.storage.system.enable is set as true.

remote.log.metadata.manager.class.name(optional) - This is an optional property. If this is not configured, Kafka uses an inbuilt metadata manager backed by an internal topic.

...

(These configs are dependent on remote storage manager implementation)

remote.log.storage.*

...

(These configs are dependent on remote log metadata manager implementation)

remote.log.metadata.*

...

remote.log.index.file.cache.total.size.mb

The total size of the space allocated to store index files fetched from remote storage in the local storage.

Default value: 1024

remote.log.manager.thread.pool.size

Remote log thread pool size, which is used in scheduling tasks to copy segments, and clean up remote log segments.

Default value: 10

remote.log.manager.task.interval.ms

The interval at which the remote log manager runs the scheduled tasks like copy segments, and clean up remote log segments.

Default value: 30,000

remote.log.manager.task.retry.backoff.ms

The amount of time in milliseconds to wait before attempting to retry a failed remote storage request.

Default value: 500

remote.log.manager.task.retry.backoff.max.ms

The maximum amount of time in milliseconds to wait before attempting to retry a failed remote storage request.

Default value: 30,000

remote.log.manager.task.retry.jitter

Random jitter amount applied to the `remote.log.manager.task.retry.backoff.ms` for computing the resultant backoff interval. This will avoid reconnection storms.

Default value: 0.2

remote.log.reader.threads

Remote log reader thread pool size, which is used in scheduling tasks to fetch data from remote storage.

Default value: 5

remote.log.reader.max.pending.tasks

Maximum remote log reader thread pool task queue size. If the task queue is full, broker will stop reading remote log segments.

Default value: 100

...

Users can set the desired config for remote.log.storage.enable property for a topic, the default value is false. To enable tier storage for a topic, set remote.log.storage.enable as true.

Below retention configs are similar to the log retention. This configuration is used to determine how long the log segments are to be retained in the local storage. Existing log.retention.* are retention configs for the topic partition which includes both local and remote storage.

local.log.retention.ms

The number of milli seconds to keep the local log segment before it gets deleted. If not set, the value in `log.retention.ms` is used. The effective value should always be less than or equal to log.retention.bytes value.

local.log.retention.bytes

The maximum size of local log segments that can grow for a partition before it deletes the old segments. If not set, the value in `log.retention.bytes` is used. The effective value should always be less than or equal to log.retention.bytes value.

console consumer. Users can pass properties mentioned in the below block with '–property' while running console consumer with this message formatter. The below block explains the format and it may change later. This formatter can be helpful for debugging purposes.

| Code Block | ||||||

|---|---|---|---|---|---|---|

| ||||||

partition:<val><sep>message-offset:<val><sep>type:<RemoteLogSegmentMetadata | RemoteLogSegmentMetadataUpdate | DeletePartitionState><sep>version:<_no_><vs>event-value:<string representation of the event>

val: represents the respective value of the key.

sep: represents the separator, default value is: ","

partition : Remote log metata topic partition number. This is optional.

Use print.partition property to print it, default is false

message-offset : Offset of this message in remote log metadata topic. This is optional.

Use print.message.offset property to print it, default is false

type: Event value type, which can be one of RemoteLogSegmentMetadata, RemoteLogSegmentMetadataUpdate, DeletePartitionState values.

version: Version number of the event value type. This is optional.

Use print.version property to print it, default is false

Use print.all.event.value.fields to print the string representation of the event which will include all the fields in the data, default property value is false.

Event value can be of any of the types below:

remote-log-segment-id is represented as "{id:<><sep>topicId:<val><sep>topicName:<val><sep>partition:<val>}" in the event value.

topic-id-partition is represented as "{topicId:<val><sep>topicName:<val><sep>partition:<val>}" in the event value.

For RemoteLogSegmentMetadata

default representation is "{remote-log-segment-id:<val><sep>start-offset:<val><sep>end-offset:<val><sep>leader-epoch:<val><sep>remote-log-segment-state:<COPY_SEGMENT_STARTED | COPY_SEGMENT_FINISHED | DELETE_SEGMENT_STARTED | DELETE_SEGMENT_FINISHED>}"

For RemoteLogSegmentMetadataUpdate

default representation is "{remote-log-segment-id:<val><sep>leader-epoch:<val><sep>remote-log-segment-state:<COPY_SEGMENT_STARTED | COPY_SEGMENT_FINISHED | DELETE_SEGMENT_STARTED | DELETE_SEGMENT_FINISHED>}"

For DeletePartitionState

default representation is "{topic-id-partition:<val><sep>epoch:<val><sep>remote-partition-delete-state:<DELETE_PARTITION_MARKED | DELETE_PARTITION_STARTED | DELETE_PARTITION_FINISHED>

|

Anchor topic-deletion topic-deletion

Topic deletion lifecycle

| topic-deletion | |

| topic-deletion |

The controller receives a delete request for a topic. It goes through the existing protocol of deletion and it makes all the replicas offline stop taking any fetch requests. After all the replicas reach the offline state, the controller publishes an event to the RemoteLogMetadataManager(RLMM) by marking the topic as deleted using RemoteLogMetadataManager.updateRemotePartitionDeleteMetadata with the state as RemotePartitionDeleteState#DELETE_PARTITION_MARKED. With KIP-516, topics are represented with uuid, and topics can be deleted asynchronously. This allows the remote logs can be garbage collected later by publishing the deletion marker into the remote log metadata topic. RLMM is responsible for asynchronously deleting all the remote log segments of a partition after receiving RemotePartitionDeleteState as DELETE_PARTITION_MARKED.

Default RLMM handles the remote partition deletion by using RemotePartitionRemover(RPRM).

RPRM instance is created on a broker with the leaders of the remote log segment metadata topic partitions. This task is responsible for removing remote storage of the topics marked for deletion. It consumes messages from those partitions remote log metadata partitions and filters the delete partition events which need to be processed. It collects those partitions and executes deletion of the respective segments using RemoteStorageManager. This is done at regular intervals of remote.partition.remover.task.interval.ms (default value of 1hr). It commits the processed offsets of metadata partitions once the deletions are executed successfully. This will also be helpful to handle leader failovers to a different replica so that it can start processing the messages where it left off.

RemotePartitionRemover(RPRM) processes the request with the following flow as mentioned in the below diagram.

- The controller publishes DELETE_PARTITION_MARKED event to say that the partition is marked for deletion. There can be multiple events published when the controller restarts or failover and this event will be deduplicated by RPRM.

- RPRM receives the DELETE_PARTITION_MARKED and processes it if it is not yet processed earlier.

- RPRM publishes an event DELETE_PARTITION_STARTED that indicates the partition deletion has already been started.

- RPRM gets all the remote log segments for the partition using RLMM and each of these remote log segments is deleted with the next steps.RLMM subscribes to the local remote log metadata partitions and it will have the segment metadata of all the user topic partitions associated with that remote log metadata partition.

- Publish DELETE_SEGMENT_STARTED event with the segment id.

- RPRM deletes the segment using RSM

- Publish DELETE_SEGMENT_FINISHED event with segment id once it is successful.

- Publish DELETE_PARTITION_FINISHED once all the segments have been deleted successfully.

Protocol Changes

ListOffsets

Currently, it supports the listing of offsets based on the earliest timestamp and the latest timestamp of the complete log. There is no change in the protocol but the new versions will start supporting listing earliest offsets based on the local logs but not only on the complete log including remote log. This protocol will be updated with the changes from KIP-516 but there are no changes required as mentioned earlier. Request and response versions will be bumped to version 7.

Fetch

We are bumping up fetch protocol to handle new error codes, there are no changes in request and response schemas. When a follower tries to fetch records for an offset that does not exist locally then it returns a new error `OFFSET_MOVED_TO_TIERED_STORAGE`. This is explained in detail here.

OFFSET_MOVED_TO_TIERED_STORAGE - when the requested offset is not available in local storage but it is moved to tiered storage.

Public Interfaces

Compacted topics will not have remote storage support.

Configs

| System-Wide | remote.log.storage.system.enable - Whether to enable tier storage functionality in a broker or not. Valid values are `true` or `false` and the default value is false. This property gives backward compatibility. When it is true broker starts all the services required for tiered storage. remote.log.storage.manager.class.name - This is mandatory if the remote.log.storage.system.enable is set as true. remote.log.metadata.manager.class.name(optional) - This is an optional property. If this is not configured, Kafka uses an inbuilt metadata manager backed by an internal topic. |

| RemoteStorageManager | (These configs are dependent on remote storage manager implementation) remote.log.storage.* |

| RemoteLogMetadataManager | (These configs are dependent on remote log metadata manager implementation) remote.log.metadata.* |

| Remote log manager related configuration. | remote.log.index.file.cache.total.size.mb remote.log.manager.thread.pool.size remote.log.manager.task.interval.ms Remote log manager tasks are retried with the exponential backoff algorithm mentioned here. remote.log.manager.task.retry.backoff.ms remote.log.manager.task.retry.backoff.max.ms remote.log.manager.task.retry.jitter remote.log.reader.threads remote.log.reader.max.pending.tasks |

| Per Topic Configuration | Users can set the desired config for remote.storage.enable property for a topic, the default value is false. To enable tier storage for a topic, set remote.storage.enable as true. You can not disable this config once it is enabled. We will provide this feature in future versions. Below retention configs are similar to the log retention. This configuration is used to determine how long the log segments are to be retained in the local storage. Existing retention.* are retention configs for the topic partition which includes both local and remote storage. local.retention.ms local.retention.bytes |

Remote Storage Manager

`RemoteStorageManager` is an interface to provide the lifecycle of remote log segments and indexes. More details about how we arrived at this interface are discussed in the document. We will provide a simple implementation of RSM to get a better understanding of the APIs. HDFS and S3 implementation are planned to be hosted in external repos and these will not be part of Apache Kafka repo. This is in line with the approach taken for Kafka connectors.

Copying and Deleting APIs are expected to be idempotent, so plugin implementations can retry safely and overwrite any partially copied content, or not failing when content is already deleted.

| Code Block | ||||

|---|---|---|---|---|

| ||||

package org.apache.kafka.server.log.remote.storage;

...

/**

* RemoteStorageManager provides the lifecycle of remote log segments that includes copy, fetch, and delete from remote

* storage.

* <p>

* Each upload or copy of a segment is initiated with {@link RemoteLogSegmentMetadata} containing {@link RemoteLogSegmentId}

* which is universally unique even for the same topic partition and offsets.

* <p>

* RemoteLogSegmentMetadata is stored in {@link RemoteLogMetadataManager} before and after copy/delete operations on

* RemoteStorageManager with the respective {@link RemoteLogSegmentState}. {@link RemoteLogMetadataManager} is

* responsible for storing and fetching metadata about the remote log segments in a strongly consistent manner.

* This allows RemoteStorageManager to store segments even in eventually consistent manner as the metadata is already

* stored in a consistent store.

* <p>

* All these APIs are still evolving.

*/

@InterfaceStability.Unstable

public interface RemoteStorageManager extends Configurable, Closeable {

/**

* Type of the index file.

*/

enum IndexType {

/**

* Represents offset index.

*/

Offset,

/**

* Represents timestamp index.

*/

Timestamp,

|

Remote Storage Manager

`RemoteStorageManager` is an interface to provide the lifecycle of remote log segments and indexes. More details about how we arrived at this interface are discussed in the document. We will provide a simple implementation of RSM to get a better understanding of the APIs. HDFS and S3 implementation are planned to be hosted in external repos and these will not be part of Apache Kafka repo. This is inline with the approach taken for Kafka connectors.

| Code Block | ||||

|---|---|---|---|---|

| ||||

package org.apache.kafka.common.log.remote.storage; ... /** * RemoteStorageManager provides the lifecycle of remote log segments that includes copy, fetch, and delete from remote * storage. * <p> * Each upload or copy of a segment is initiated with {@link RemoteLogSegmentMetadata} containing {@link RemoteLogSegmentId} * which is universally unique even for the same topic partition and offsets. * <p> * RemoteLogSegmentMetadata is stored in {@link RemoteLogMetadataManager} before and after copy/delete operations on * RemoteStorageManager with the respective {@link RemoteLogSegmentMetadata.State}. {@link RemoteLogMetadataManager} is * responsible for storing and fetching metadata about the remote log segments in a strongly consistent manner. * This allows RemoteStorageManager to store segments even in eventually consistent manner as the metadata is already * stored in a consistent store. * <p> * All these APIs are still evolving. */ @InterfaceStability.Unstable public interface RemoteStorageManager extends Configurable, Closeable { /** * Copies LogSegmentData provided for the given {@param remoteLogSegmentMetadata}. * <p> * Invoker of this API should always send a unique id as part of {@link RemoteLogSegmentMetadata#remoteLogSegmentId()#id()} * even when it retries to invoke this method for the same log segment data. * * @param remoteLogSegmentMetadata metadata about the remote log segment. * @param logSegmentData data to be copied to tiered storage. * @throws RemoteStorageException if there are any errors in storing the data of the segment. */ void copyLogSegment(RemoteLogSegmentMetadata remoteLogSegmentMetadata, LogSegmentData logSegmentData) throws RemoteStorageException; /** * Returns the remote log segment data file/object as InputStream for the given RemoteLogSegmentMetadata starting * from the given startPosition. The stream will end at the end of the remote log segment data file/object. * * @param remoteLogSegmentMetadata metadata about the remote log segment. * @param startPosition start position of log segment to be read, inclusive. * @return input stream of the requested log segment data. * @throws RemoteStorageException if there are any errors while fetching the desired segment. */ InputStream fetchLogSegmentData(RemoteLogSegmentMetadata remoteLogSegmentMetadata, int startPosition) throws RemoteStorageException; /** * Returns the remote log segment data file/object as InputStream for the given RemoteLogSegmentMetadata starting * from the given startPosition. The stream will end at the smaller of endPosition and the end of the remote log * segment data file/object. * * @param remoteLogSegmentMetadata metadata about the remote log segment. * @param startPosition start position of log segment to be read, inclusive. * @param endPosition end position of log segment to be read, inclusive. * @return input stream of the requested log segment data. * @throws RemoteStorageException if there are any errors while fetching the desired segment. */ InputStream fetchLogSegmentData(RemoteLogSegmentMetadata remoteLogSegmentMetadata, int startPosition, int endPosition) throws RemoteStorageException; /** * Returns the offset index* forRepresents theproducer respectivesnapshot logindex. segment of {@link RemoteLogSegmentMetadata}. */ * @param remoteLogSegmentMetadata metadataProducerSnapshot, about the remote log segment. /** * @return input stream of the requested* Represents offsettransaction index. */ @throws RemoteStorageException if there are any errors whileTransaction, fetching the index. /**/ InputStream fetchOffsetIndex(RemoteLogSegmentMetadata remoteLogSegmentMetadata) throws RemoteStorageException; * Represents leader epoch index. */** * Returns theLeaderEpoch, timestamp index for the} respective log segment of {@link RemoteLogSegmentMetadata}. /** * @param remoteLogSegmentMetadata metadata about Copies the given {@link LogSegmentData} provided for the remotegiven log{@code segment.remoteLogSegmentMetadata}. This includes * @returnlog inputsegment streamand ofits theauxiliary requestedindexes like timestampoffset index. , time index, transaction index, *leader @throwsepoch RemoteStorageExceptionindex, ifand there are any errors while* fetchingproducer thesnapshot index. */ <p> InputStream fetchTimestampIndex(RemoteLogSegmentMetadata remoteLogSegmentMetadata) listRemoteLogSegmentsthrows RemoteStorageException; /** * Returns the transaction index for the the respective log segment* Invoker of this API should always send a unique id as part of {@link RemoteLogSegmentMetadataRemoteLogSegmentMetadata#remoteLogSegmentId()}. * even when it retries *to @paraminvoke remoteLogSegmentMetadatathis metadatamethod aboutfor the remotesame log segment data. * @return input stream of the requested transaction index.<p> * @throwsThis RemoteStorageExceptionoperation ifis thereexpected areto any errors while fetching the index. */ default InputStream fetchTransactionIndex(RemoteLogSegmentMetadata remoteLogSegmentMetadata) throws RemoteStorageException; /** * Returns the producer snapshot index for the the respective log segment of {@link RemoteLogSegmentMetadata}.be idempotent. If a copy operation is retried and there is existing content already written, * it should be overwritten, and do not throw {@link RemoteStorageException} * * @param remoteLogSegmentMetadata metadata about the remote log segment. * @return@param inputlogSegmentData stream of the producer snapshot. * @throwsdata RemoteStorageExceptionto ifbe therecopied areto any errors while fetching the indextiered storage. */ InputStream fetchProducerSnapshotIndex(RemoteLogSegmentMetadata remoteLogSegmentMetadata) throws RemoteStorageException; /** * Returns the leader epoch index for the the respective log segment of {@link RemoteLogSegmentMetadata} @throws RemoteStorageException if there are any errors in storing the data of the segment. */ * @param remoteLogSegmentMetadata metadata about the remote log segment.void copyLogSegmentData(RemoteLogSegmentMetadata remoteLogSegmentMetadata, * @return input stream of the leader epoch index. * @throws RemoteStorageException if there are any errors while fetching theLogSegmentData index.logSegmentData) */ InputStream fetchLeaderEpochIndex(RemoteLogSegmentMetadata remoteLogSegmentMetadata) throws RemoteStorageException; /** * DeletesReturns the resources associated withremote log segment data file/object as InputStream for the given {@param@link remoteLogSegmentMetadataRemoteLogSegmentMetadata}. Deletion is considered as * starting from the *given successfulstartPosition. ifThe thisstream callwill returnsend successfullyat withoutthe anyend errors.of Itthe willremote throwlog {@linksegment RemoteStorageException} ifdata file/object. * there are any errors in* deleting@param theremoteLogSegmentMetadata file. metadata about the remote log * <p>segment. * {@link@param RemoteResourceNotFoundException}startPosition is thrown when there are no resources associated with the given start position of log *segment {@param remoteLogSegmentMetadata}. *to be read, inclusive. * @param@return remoteLogSegmentMetadatainput metadatastream aboutof the remoterequested log segment to be deleteddata. * @throws RemoteResourceNotFoundException if the requested resource is not found * @throws RemoteStorageExceptionRemoteStorageException if there are any errors while fetching the desired segment. * @throws RemoteResourceNotFoundException the requested log ifsegment thereis arenot anyfound storagein relatedthe errorsremote occurredstorage. */ voidInputStream deleteLogSegmentfetchLogSegment(RemoteLogSegmentMetadata remoteLogSegmentMetadata), throws RemoteStorageException; } package org.apache.kafka.common; ... public class TopicIdPartition { private final UUID topicId; private final TopicPartition topicPartition int startPosition) throws RemoteStorageException; public TopicIdPartition(UUID topicId, TopicPartition topicPartition) { Objects.requireNonNull(topicId, "topicId can not be null");/** * Returns the remote log segment data file/object as InputStream for the given {@link RemoteLogSegmentMetadata} * starting from the given ObjectsstartPosition.requireNonNull(topicPartition, "topicPartition can not be null"); this.topicId = topicId; The stream will end at the smaller of endPosition and the end of the * remote log this.topicPartitionsegment = topicPartition;data file/object. } * public UUID* topicId() { return topicId; } @param remoteLogSegmentMetadata metadata about the remote log segment. * @param startPosition public TopicPartition topicPartition() { start position of returnlog topicPartition; segment to be read, } inclusive... } package org.apache.kafka.common.log.remote.storage; ... /** * This represents a* universally@param uniqueendPosition identifier associated to a topic partition's log segment. This will be * regenerated for everyend attemptposition of copying a specific log segment into {@link RemoteStorageManager#copyLogSegment(RemoteLogSegmentMetadatabe read, LogSegmentData)}inclusive. */ public class RemoteLogSegmentId implements Comparable<RemoteLogSegmentId>,* Serializable@return { input stream of the privaterequested staticlog finalsegment longdata. serialVersionUID = 1L; * @throws privateRemoteStorageException final TopicIdPartition topicIdPartition; private final UUID id; if there are any publicerrors RemoteLogSegmentId(TopicIdPartition topicIdPartition, UUID id) {while fetching the desired segment. * @throws RemoteResourceNotFoundException this.topicIdPartitionthe = requireNonNull(topicIdPartition); this.id = requireNonNull(id); } /** requested log segment is not found in the remote storage. */ InputStream fetchLogSegment(RemoteLogSegmentMetadata remoteLogSegmentMetadata, * Returns TopicIdPartition of this remote log segment. *int startPosition, * @return */ public TopicIdPartition topicIdPartition() { return topicIdPartition; int endPosition) throws }RemoteStorageException; /** * Returns Universallythe Uniqueindex Idfor ofthe this remoterespective log segment. of *{@link RemoteLogSegmentMetadata}. * @return<p> */ If the index is publicnot UUIDpresent id() { return id; } ... } package org.apache.kafka.common.log.remote.storage; ... /** * It describes the metadata about the log segment in the remote storage. */ public class RemoteLogSegmentMetadata implements Serializable { private static final long serialVersionUID = 1L; /** * Universally unique remote log segment id. */ private final RemoteLogSegmentId remoteLogSegmentId; /**e.g. Transaction index may not exist because segments create prior to * version 2.8.0 will not have transaction index associated with them.), * throws {@link RemoteResourceNotFoundException} * * @param remoteLogSegmentMetadata metadata about the remote log segment. * @param indexType type of the index to be fetched for the segment. * @return Startinput offsetstream of the thisrequested segmentindex. */ @throws RemoteStorageException private final long startOffset; /** if there are any *errors Endwhile offsetfetching ofthe this segmentindex. */ @throws RemoteResourceNotFoundException the requested privateindex finalis long endOffset; /**not found in the remote storage. * LeaderThe epochcaller of thethis broker. function are encouraged to */ private final int leaderEpoch; /** * Maximum timestamp in the segmentre-create the indexes from the segment * as the suggested way of handling this error. */ privateInputStream final long maxTimestamp; fetchIndex(RemoteLogSegmentMetadata remoteLogSegmentMetadata, /** * Epoch time at which the respective {@link #state} is set. */ IndexType privateindexType) finalthrows long eventTimestampRemoteStorageException; /** * LeaderEpochDeletes vsthe offsetresources forassociated messageswith withthe ingiven this{@code segment.remoteLogSegmentMetadata}. Deletion is considered as */ successful if this call private final Map<Int, Long> segmentLeaderEpochs; /**returns successfully without any errors. It will throw {@link RemoteStorageException} if * Sizethere ofare theany segmenterrors in bytesdeleting the file. */ private final int segmentSizeInBytes; <p> /** This operation is expected *to Itbe indicatesidempotent. theIf stateresources inare whichnot thefound, actionit is executednot on this segment.expected to */ private final RemoteLogState state; throw {@link RemoteResourceNotFoundException} as it may be already removed from a previous attempt. /** * @param remoteLogSegmentIdremoteLogSegmentMetadata metadata Universallyabout uniquethe remote log segment to be iddeleted. * @param startOffset @throws RemoteStorageException if there are Startany offsetstorage ofrelated thiserrors segmentoccurred. */ @param endOffset void deleteLogSegmentData(RemoteLogSegmentMetadata remoteLogSegmentMetadata) throws RemoteStorageException; } package org.apache.kafka.common; ... public class TopicIdPartition End{ offset of this segment. private final UUID topicId; * @param maxTimestampprivate final TopicPartition topicPartition; public maximum timestamp in this segmentTopicIdPartition(UUID topicId, TopicPartition topicPartition) { * @param leaderEpoch Objects.requireNonNull(topicId, "topicId can not be null"); Leader epoch of the broker. * @param eventTimestampObjects.requireNonNull(topicPartition, "topicPartition can not be null"); Epoch time atthis.topicId which= thetopicId; remote log segment is copied to the remotethis.topicPartition tier= storage.topicPartition; } * @param segmentSizeInBytes public sizeUUID of this segment in bytes. topicId() { *return @paramtopicId; state The} respective segment of remoteLogSegmentIdpublic isTopicPartition marked fro deletion.topicPartition() { * @param segmentLeaderEpochs leaderreturn epochstopicPartition; occurred with in this segment */ public RemoteLogSegmentMetadata(RemoteLogSegmentId remoteLogSegmentId, long startOffset, long endOffset, long maxTimestamp, int leaderEpoch, long eventTimestamp, int segmentSizeInBytes, RemoteLogState state, Map<Int, Long> segmentLeaderEpochs } ... } package org.apache.kafka.server.log.remote.storage; ... /** * This represents a universally unique identifier associated to a topic partition's log segment. This will be * regenerated for every attempt of copying a specific log segment in {@link RemoteStorageManager#copyLogSegment(RemoteLogSegmentMetadata, LogSegmentData)}. */ public class RemoteLogSegmentId implements Comparable<RemoteLogSegmentId>, Serializable { private static final long serialVersionUID = 1L; private final TopicIdPartition topicIdPartition; private final UUID id; public RemoteLogSegmentId(TopicIdPartition topicIdPartition, UUID id) { this.remoteLogSegmentIdtopicIdPartition = remoteLogSegmentIdrequireNonNull(topicIdPartition); this.startOffsetid = startOffsetrequireNonNull(id); } this.endOffset = endOffset; /** * Returns TopicIdPartition of this this.leaderEpochremote =log leaderEpoch;segment. this.maxTimestamp = maxTimestamp;* this.eventTimestamp = eventTimestamp;* @return this.segmentLeaderEpochs = segmentLeaderEpochs;*/ public TopicIdPartition this.state = state;topicIdPartition() { this.segmentSizeInBytes = segmentSizeInBytesreturn topicIdPartition; } /** * @returnReturns Universally uniqueUnique idId of this remote log segment. */ * @return */ public RemoteLogSegmentIdUUID remoteLogSegmentIdid() { return remoteLogSegmentIdid; } ... } package org.apache.kafka.server.log.remote.storage; ... /** * It describes the *metadata @returnabout Start offset of thisthe log segment(inclusive). in the remote storage. */ public class RemoteLogSegmentMetadata implements public long startOffset() Serializable { private static final long serialVersionUID return= startOffset; }1L; /** * @returnUniversally Endunique offsetremote oflog this segment(inclusive)segment id. */ publicprivate longfinal endOffset() { return endOffset; }RemoteLogSegmentId remoteLogSegmentId; /** * @return Leader or controller epoch of the broker from where this event occurredStart offset of this segment. */ publicprivate intfinal brokerEpoch() {long startOffset; /** return brokerEpoch; } * End offset of this segment. */** private *final @return Epoch time at which this evcent is occurred.long endOffset; /** */ Leader epoch of public long eventTimestamp() {the broker. */ return eventTimestamp; private final int }leaderEpoch; /** * @returnMaximum timestamp in the segment */ public int segmentSizeInBytes() {private final long maxTimestamp; /** * Epoch time returnat segmentSizeInBytes; which the respective {@link #state} is set. public RemoteLogState state() {*/ private final long return stateeventTimestamp; }/** public long* maxTimestamp() { return maxTimestamp; } publicLeaderEpoch vs offset for messages with in this segment. */ private final Map<Int, Long> segmentLeaderEpochs() {; /** return segmentLeaderEpochs; } ... } package org.apache.kafka.common.log.remote.storage; ... public class LogSegmentData { * Size of the segment in bytes. */ private final Fileint logSegmentsegmentSizeInBytes; /** private final File offsetIndex; * It indicates privatethe finalstate Filein timeIndex; which the action is privateexecuted finalon Filethis txnIndex;segment. private final File producerIdSnapshotIndex; */ private final ByteBufferRemoteLogSegmentState leaderEpochIndexstate; public LogSegmentData(File logSegment, File offsetIndex, File timeIndex, File txnIndex, File producerIdSnapshotIndex, /** * @param remoteLogSegmentId Universally unique remote log segment id. * @param startOffset Start offset of this segment. ByteBuffer leaderEpochIndex) { * @param endOffset this.logSegment = logSegment; End offset of this.offsetIndex = offsetIndex; segment. * @param maxTimestamp this.timeIndex = timeIndex; Maximum timestamp in this.txnIndex = txnIndex; segment * @param leaderEpoch this.producerIdSnapshotIndex = producerIdSnapshotIndex; Leader epoch of the thisbroker.leaderEpochIndex = leaderEpochIndex; * } @param eventTimestamp public File logSegment() { Epoch time at which the remote log segment return logSegment; } is copied to the remote tier storage. public File* offsetIndex() { @param segmentSizeInBytes Size of this segment in bytes. return offsetIndex; * } @param state public File timeIndex() { return timeIndex; State of the respective } segment of remoteLogSegmentId. public File txnIndex() { * @param segmentLeaderEpochs leader epochs occurred with in returnthis txnIndex;segment }*/ public File producerIdSnapshotIndex() { RemoteLogSegmentMetadata(RemoteLogSegmentId remoteLogSegmentId, long startOffset, long endOffset, return producerIdSnapshotIndex; } public ByteBuffer leaderEpochIndex() { return leaderEpochIndex; } ... } |

RemoteLogMetadataManager

`RemoteLogMetadataManager` is an interface to provide the lifecycle of metadata about remote log segments with strongly consistent semantics. There is a default implementation that uses an internal topic. Users can plugin their own implementation if they intend to use another system to store remote log segment metadata.

| Code Block | ||||

|---|---|---|---|---|

| ||||

package org.apache.kafka.common.log.remote.storage; ... /** * This interface provides storing and fetching remote log segment metadata with strongly consistent semantics. * <p> * This class can be plugged in to Kafka cluster by adding the implementation class as * <code>remote.log.metadata.manager.class.name</code> property value. There is an inbuilt implementation backed by * topic storage in the local cluster. This is used as the default implementation if * remote.log.metadata.manager.class.name is not configured. * </p> * <p> * <code>remote.log.metadata.manager.class.path</code> property is about the class path of the RemoteLogStorageManager * implementation. If specified, the RemoteLogStorageManager implementation and its dependent libraries will be loaded * by a dedicated classloader which searches this class path before the Kafka broker class path. The syntax of this * parameter is same with the standard Java class path string. * </p> * <p> * <code>remote.log.metadata.manager.listener.name</code> property is about listener name of the local broker to which * it should get connected if needed by RemoteLogMetadataManager implementation. When this is configured all other * required properties can be passed as properties with prefix of 'remote.log.metadata.manager.listener. * </p> * "cluster.id", "broker.id" and all the properties prefixed with "remote.log.metadata." are passed when * {@link #configure(Map)} is invoked on this instance. * <p> * <p> * <p> * All these APIs are still evolving. * <p> */ @InterfaceStability.Unstable public interface RemoteLogMetadataManager extends Configurable, Closeable {long maxTimestamp, int leaderEpoch, long eventTimestamp, int segmentSizeInBytes, RemoteLogSegmentState state, Map<Int, Long> segmentLeaderEpochs) { this.remoteLogSegmentId = remoteLogSegmentId; this.startOffset = startOffset; this.endOffset = endOffset; this.leaderEpoch = leaderEpoch; this.maxTimestamp = maxTimestamp; this.eventTimestamp = eventTimestamp; this.segmentLeaderEpochs = segmentLeaderEpochs; this.state = state; this.segmentSizeInBytes = segmentSizeInBytes; } /** * @return unique id of this segment. */ public RemoteLogSegmentId remoteLogSegmentId() { return remoteLogSegmentId; } /** * @return Start offset of this segment(inclusive). */ public long startOffset() { return startOffset; } /** * Stores@return RemoteLogSegmentMetadataEnd withoffset theof containing RemoteLogSegmentId into RemoteLogMetadataManager.this segment(inclusive). */ public long *endOffset() <p>{ *return RemoteLogSegmentMetadataendOffset; is identified by RemoteLogSegmentId.} /** * @return Leader @paramor remoteLogSegmentMetadatacontroller metadataepoch aboutof the remotebroker logfrom segmentwhere tothis beevent deletedoccurred. */ @throws RemoteStorageException if therepublic areint any storage related errors occurred.brokerEpoch() { */ return void putRemoteLogSegmentData(RemoteLogSegmentMetadata remoteLogSegmentMetadata) throws RemoteStorageException;brokerEpoch; } /** * Explain@return detailEpoch whattime allat stateswhich this canevcent beis applied withoccurred. */ public *long @parameventTimestamp() remoteLogSegmentMetadataUpdate{ * @throws RemoteStorageException */return eventTimestamp; void updateRemoteLogSegmentMetadata(RemoteLogSegmentMetadataUpdate remoteLogSegmentMetadataUpdate) throws RemoteStorageException;} /** * @return Fetches RemoteLogSegmentMetadata if it exists*/ for the given topicpublic partitionint containing offset and leader-epoch for the offset, * else returns {@link Optional#empty()}.segmentSizeInBytes() { return segmentSizeInBytes; } public RemoteLogSegmentState state() { * return state; * @param topicIdPartition topic partition} public *long @param offsetmaxTimestamp() { return offsetmaxTimestamp; } * @param epochForOffset leaderpublic epochMap<Int, forLong> the given offsetsegmentLeaderEpochs() { * @return the requestedreturn remotesegmentLeaderEpochs; log segment metadata if it exists. } ... } package org.apache.kafka.server.log.remote.storage; ... public class LogSegmentData { *private @throwsfinal RemoteStorageExceptionFile iflogSegment; there are any storageprivate relatedfinal errorsFile occurred.offsetIndex; private */ final File timeIndex; Optional<RemoteLogSegmentMetadata> remoteLogSegmentMetadata(TopicIdPartition topicIdPartition,private longfinal offset, int epochForOffset)File txnIndex; private final File producerIdSnapshotIndex; private throwsfinal ByteBuffer RemoteStorageExceptionleaderEpochIndex; /** * Returns highest log offset of topic partition for the given leader epoch in remote storage. This is used by public LogSegmentData(File logSegment, File offsetIndex, File timeIndex, File txnIndex, File producerIdSnapshotIndex, * remote log management subsystem to know uptoByteBuffer whichleaderEpochIndex) offset{ the segments have been copied to remote storagethis.logSegment = forlogSegment; * a given leader epochthis. offsetIndex = offsetIndex; * *this.timeIndex @param topicIdPartition topic partition= timeIndex; * @param leaderEpoch this.txnIndex leader epoch= txnIndex; * @return the requestedthis.producerIdSnapshotIndex highest= logproducerIdSnapshotIndex; offset if exists. *this.leaderEpochIndex @throws= RemoteStorageExceptionleaderEpochIndex; if there are any storage related errors occurred. */ } public File logSegment() { Optional<Long> highestLogOffset(TopicIdPartition topicIdPartition, int leaderEpoch) throws RemoteStorageException; return logSegment; /**} public *File Update the delete partition state of a topic partition in metadata storage. Controller invokes this method withoffsetIndex() { return offsetIndex; } public * DeletePartitionUpdate having state as {@link RemoteLogState#DELETE_PARTITION_MARKED}. So, remote partition removersFile timeIndex() { * can act onreturn thistimeIndex; event to clean the} respective remote log segmentspublic ofFile the partition.txnIndex() { * return txnIndex; * Incase of default} RLMM implementation, remote partitionpublic removerFile processes RemoteLogState#DELETE_PARTITION_MARKEDproducerIdSnapshotIndex() { * - sendsreturn anproducerIdSnapshotIndex; event with state as RemoteLogState#DELETE_PARTITION_STARTED } public *ByteBuffer leaderEpochIndex() -{ getting all the remote log segments and deletesreturn them.leaderEpochIndex; } ... } |

RemoteLogMetadataManager

`RemoteLogMetadataManager` is an interface to provide the lifecycle of metadata about remote log segments with strongly consistent semantics. There is a default implementation that uses an internal topic. Users can plugin their own implementation if they intend to use another system to store remote log segment metadata.

| Code Block | ||||

|---|---|---|---|---|

| ||||