...

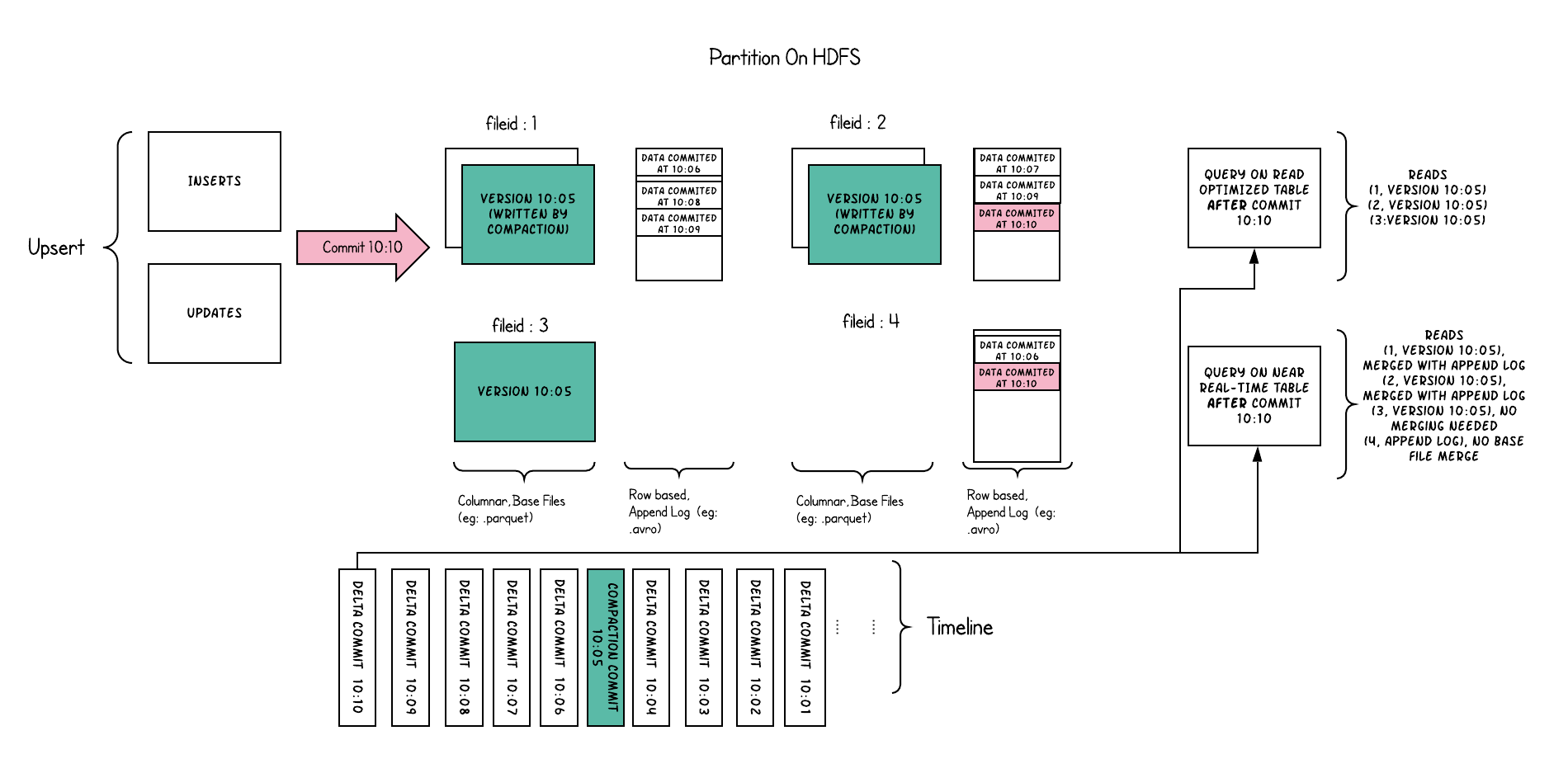

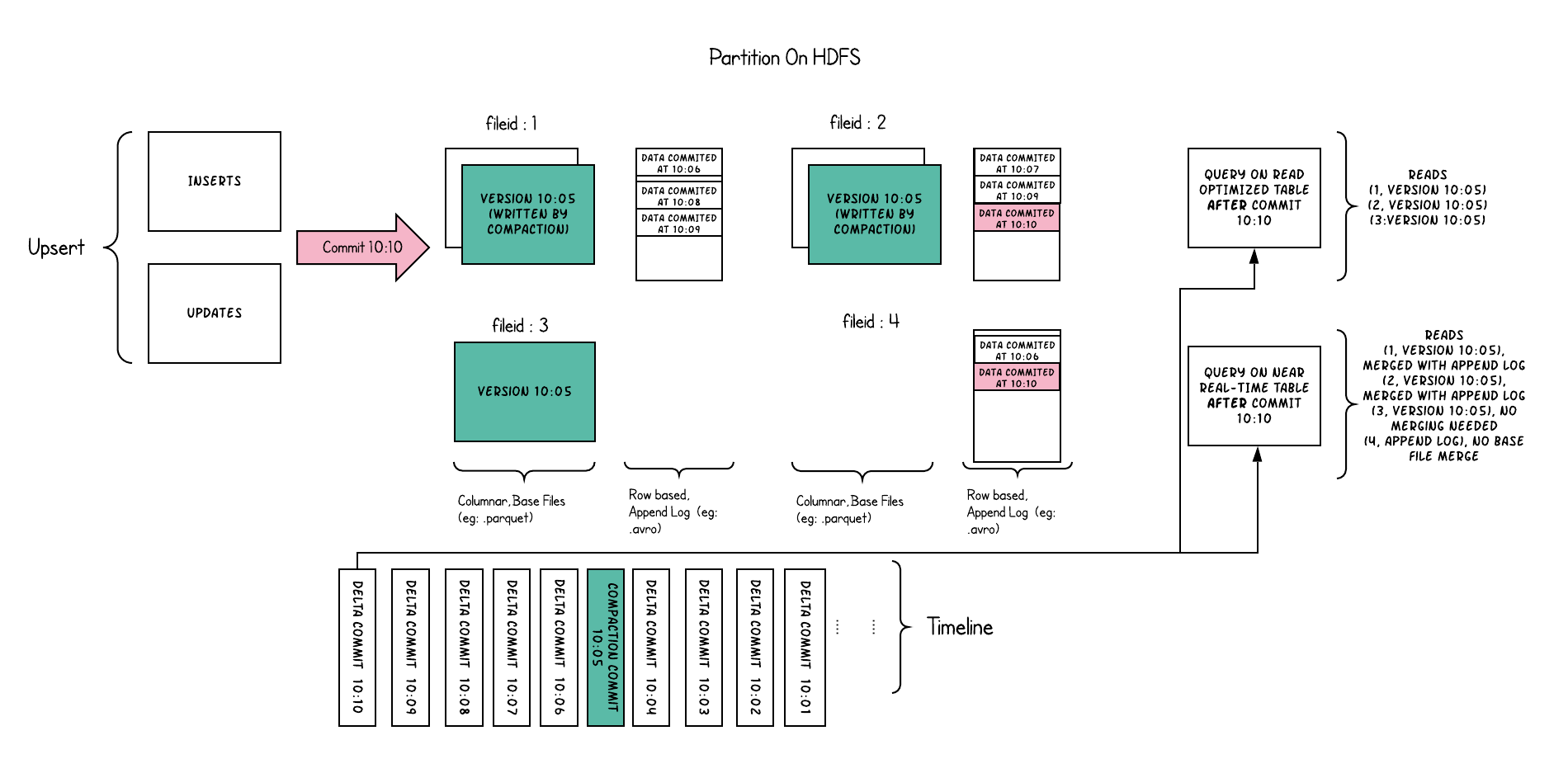

Hudi organizes a table into a folder structure under a def~table-basepath on DFS. If the table is partitioned by some columns, then there are additional def~table-partitions under the base path, which are folders containing data files for that partition, very similar to Hive tables. Each partition is uniquely identified by its def~partitionpath, which is relative to the basepath. Within each partition, files are organized into def~file-groups, uniquely identified by a def~file-id. Each file group contains several def~file-slices, where each slice contains a def~base-file (e.g: parquet) produced at a certain commit/compaction def~instant-time, along with set of def~log-files that contain inserts/updates to the base file since the base file was last written. Hudi adopts a MVCC design, where compaction action merges logs and base files to produce new file slices and cleaning action gets rid of unused/older file slices to reclaim space on DFS.

Index

...

Image AddedFig : Shows four file groups 1,2,3,4 with base and log files, with few file slices each

Image AddedFig : Shows four file groups 1,2,3,4 with base and log files, with few file slices each

Index

| Excerpt Include |

|---|

| def~index |

|---|

| def~index |

|---|

| nopanel | true |

|---|

|

...

| Excerpt Include |

|---|

| def~table-type |

|---|

| def~table-type |

|---|

| nopanel | true |

|---|

|

Copy On Write Table

def~copy-on-write (COW)

| Excerpt Include |

|---|

| def~copy-on-write (COW) |

|---|

| def~copy-on-write (COW) |

|---|

| nopanel | true |

|---|

|

Merge On Read Table

def~merge-on-read (MOR)

| Excerpt Include |

|---|

| def~merge-on-read (MOR) |

|---|

| def~merge-on-read (MOR) |

|---|

| nopanel | true |

|---|

|

Writing

Write Operations

...

| Excerpt Include |

|---|

| def~write-operation |

|---|

| def~write-operation |

|---|

| nopanel | true |

|---|

|

Compaction

| Excerpt Include |

|---|

| def~compaction |

|---|

| def~compaction |

|---|

| nopanel | true |

|---|

|

Cleaning

| Excerpt Include |

|---|

| def~cleaning |

|---|

| def~cleaning |

|---|

| nopanel | true |

|---|

|

Optimized DFS Access

Compaction

<WIP>

Cleaning

<WIP>

...

Hudi also performs several key storage management functions on the data stored in a Hudi dataset def~table. A key aspect of storing data on DFS is managing file sizes and counts and reclaiming storage space. For e.g HDFS is infamous for its handling of small files, which exerts memory/RPC pressure on the Name Node and can potentially destabilize the entire cluster. In general, query engines provide much better performance on adequately sized columnar files, since they can effectively amortize cost of obtaining column statistics etc. Even on some cloud data stores, there is often cost to listing directories with large number of small files.

Here are some ways to , Hudi writing efficiently manage manages the storage of your Hudi datasetsdata.

- The [ small file handling feature](configurations.html#compactionSmallFileSize) in feature in Hudi, profiles incoming workload and distributes inserts to existing file groups def~file-group instead of creating new file groups, which can lead to small files.Cleaner can be [configured](configurations.html#retainCommits) to clean up older file slices, more or less aggressively depending on maximum time for queries to run & lookback needed for incremental pull

- Employing a cache of the def~timeline, in the writer such that as long as the spark cluster is not spun up everytime, subsequent def~write-operations never list DFS directly to obtain list of def~file-slices in a given def~table-partition

- User can also tune the size of the [base/parquet file](configurations.html#limitFileSize), [log files](configurations.html#logFileMaxSize) & expected [compression ratio](configurations.html#parquetCompressionRatio) def~base-file as a fraction of def~log-files & expected compression ratio, such that sufficient number of inserts are grouped into the same file group, resulting in well sized base files ultimately.

- Intelligently tuning the [ bulk insert parallelism](configurations.html#withBulkInsertParallelism), can again in nicely sized initial file groups. It is in fact critical to get this right, since the file groups once created cannot be deleted, but simply expanded as explained before.

- For workloads with heavy updates, the [merge-on-read storage](concepts.html#merge-on-read-storage) provides a nice mechanism for ingesting quickly into smaller files and then later merging them into larger base files via compaction.

Querying

<WIP>

Snapshot Queries

<WIP>

Incremental Queries

<WIP>

Read Optimized Queries

<WIP>

Hive Integration

Querying

| Excerpt Include |

|---|

| def~query-type |

|---|

| def~query-type |

|---|

| nopanel | true |

|---|

|

Snapshot Queries

| Excerpt Include |

|---|

| def~snapshot-query |

|---|

| def~snapshot-query |

|---|

| nopanel | true |

|---|

|

Incremental Queries

| Excerpt Include |

|---|

| def~incremental-query |

|---|

| def~incremental-query |

|---|

| nopanel | true |

|---|

|

Read Optimized Queries

| Excerpt Include |

|---|

| def~read-optimized-query |

|---|

| def~read-optimized-query |

|---|

| nopanel | true |

|---|

|

<wip>