...

KIP-500: Replace ZooKeeper with a Self-Managed Metadata Quorum (Accepted)

Status

Current state: Under DiscussionAccepted

Discussion thread: TBD here

JIRA:

| Jira | ||||||

|---|---|---|---|---|---|---|

|

...

Note that this protocol assumes something like the Kafka v2 log message format. It is not compatible with older formats because records do not include the leader epoch information that is needed for log reconciliation. We have intentionally avoided any make no assumption about the representation of the physical log and its semantics. This format and minimal assumptions about it's logical structure. This makes it usable both for internal metadata replication and (eventually) partition data replication.

...

- Specification of the replication protocol and its semantics

- Specification of the log structure and message schemas that will be used to maintain quorum state

- Tooling support /metrics to manage the quorum

...

- view quorum health

The following aspects are specified in follow-up proposals:

- Log compaction: see KIP-630

- Controller use of the quorum and message specification: see KIP-631

- Quorum reassignment: see KIP-642

At a high level, this proposal treats the metadata log as a topic with a single partition. The benefit of this is that many of the existing APIs can be reused. For example, it allows users to use a consumer to read the contents of the metadata log for debugging purposes. We are also trying to pave the way for normal partition replication through Raft as well as eventually supporting metadata sharding with multiple partitions. In our prototype implementation, we have assumed the name __cluster_metadata for this topic, but this is not a formal part of this proposal.

...

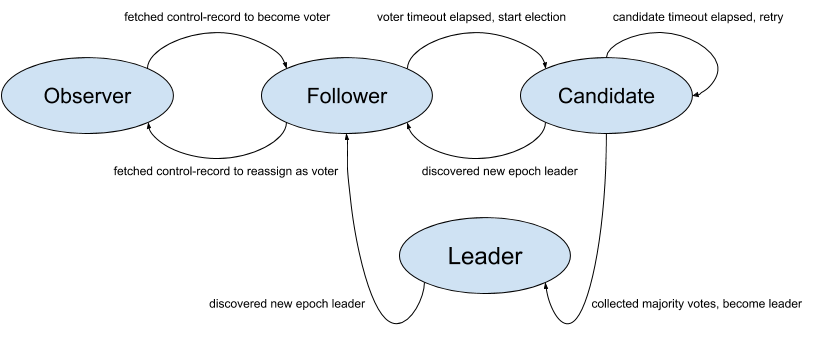

State Machine

Configurations

bootstrapquorum.servers: Defines the set of servers to contact to get quorum information. This does not have to address quorum members directly. For example, it could be a VIP.quorum.voters:Defines the ids of the expected voters. This is only required when bootstrapping the cluster for the first time. As long as the cluster (hence the quorum) has started then new brokers would rely on DiscoverBrokers (described below) to discover the current voters of the quorum.voters: This is a connection map which contains the IDs of the voters and their respective endpoint. We use the following format for each voter in the list{broker-id}@{broker-host):{broker-port}. For example, `quorum.voters=1@kafka-1:9092, 2@kafka-2:9092, 3@kafka-3:9092`.quorum.fetch.timeout.ms:Maximum time without a successful fetch from the current leader before a new election is started.quorum.election.timeout.ms:Maximum time without collected a majority of votes during the candidate state before a new election is retried.quorum.election.backoff.max.ms:Maximum exponential backoff time (based on the number if retries) after an election timeout, before a new election is triggered.quorum.request.timeout.ms:Maximum time before a pending request is considered failed and the connection is dropped.quorum.retry.backoff.ms:Initial delay between request retries. This config and the one below is used for retriable request errors or lost connectivity and are different from the election.backoff configs above.quorum.retry.backoff.max.ms: Max delay between requests. Backoff will increase exponentially beginning fromquorum.retry.backoff.ms(the same as in KIP-580).broker.id: The existing broker id config shall be used as the voter id in the Raft quorum.

...

Records are uniquely defined by their offset in the log and the epoch of the leader that appended the record. The key and value schemas will be defined by the controller in a separate KIP; here we treat them as arbitrary byte arrays. However, we do require the ability to append "control records" to the log which are reserved only for use within the Raft quorum (e.g. this enables quorum reassignment).

Kafka's current The v2 message format version supports everything we need, so we will assume thathas all the assumed/required properties already.

Quorum State

We use a separate file to store the current state of the quorum. This is both for convenience and correctness. It helps us to initialize the quorum state after a restart, but we also need it in order to know which broker we have voted for in a given election. The Raft protocol does not allow voters to change their votes, so we have to preserve this state across restarts. Below is the schema for this quorum-state file.

| Code Block |

|---|

{

"type": "data",

"name": "QuorumStateMessage",

"validVersions": "0",

"flexibleVersions": "0+",

"fields": [

{"name": "ClusterIdLeaderId", "type": "stringint32", "versions": "0+"},

{"name": "LeaderIdLeaderEpoch", "type": "int32", "versions": "0+"},

{"name": "LeaderEpochVotedId", "type": "int32", "versions": "0+"},

{"name": "VotedIdAppliedOffset", "type": "int32", "versions": "0+"},

{"name": "AppliedOffset", "type": "int64int64", "versions": "0+"},

{"name": "CurrentVoters", "type": "[]Voter", "versions": "0+", "fields": [

{"name": "VoterId", "type": "int32", "versions": "0+"}

]},

{"name": "TargetVoters", "type": "[]Voter", "versions": "0+", "nullableVersions": "0+", "fields": [

{"name": "VoterId", "type": "int32", "versions": "0+"}

]}

]

} |

Below we define the purpose of these fields:

ClusterIdLeaderId: the clusterId, which is persisted in the log and used to ensure that we do not mistakenly interact with the wrong cluster.LeaderId: this is the last this is the last known leader of the quorum. A value of -1 indicates that there is no leader.LeaderEpoch: this is the last known leader epoch. This is initialized to 0 when the quorum is bootstrapped and should never be negative.VotedId: indicates the id of the broker that this replica voted for in the current epoch. A value of -1 indicates that the replica has not (or cannot) vote.AppliedOffset: Reflects the maximum offset that has been applied to this quorum state. This is used for log recovery. The broker must scan from this point on initialization to detect updates to this file.CurrentVoters: the latest known voters for this quorum.TargetVoters: the latest known target voters if the quorum is being reassigned.

The use of this file will be described in more detail below as we describe the protocol.

Note one key difference of this internal topic compared with other topics is that we should always enforce fsync upon appending to local log to guarantee Raft algorithm correctness, i.e. we are dropping group flushing for this topic. In practice, we could still optimize the fsync latency in the following way: 1) the client requests to the leader are expected to have multiple entries, 2) the fetch response from the leader can contain multiple entries, and 3) the leader can actually defer fsync until it knows "quorum.size majority - 1" has get to a certain entry offset. We will discuss a bit more about these in the following sectionsleave this potential optimization as a future work out of the scope of this KIP design.

Other log-related metadata such as log start offset, recovery point, HWM, etc are still stored in the existing checkpoint file just like any other Kafka topic partitions.

Additionally, we make use of the current meta.properties file, which caches the value from broker.id in the configuration as well as the discovered clusterId. As is the case today, the configured broker.id does not match the cached value, then the broker will refuse to start. Similarly, the cached clusterId is included when interacting with the quorum APIs. If we connect to a cluster with a different clusterId, then the broker will receive a fatal error and shutdown.

...

The key functionalities of any consensus protocol are leader election and data replication. The protocol for these two functionalities consists of 5 four core RPCs:

- Vote: Sent by a voter to initiate an election.

- BeginQuorumEpoch: Used by a new leader to inform the voters of its status.

- EndQuorumEpoch: Used by a leader to gracefully step down and allow a new election.

- Fetch: Sent by voters and observers to the leader in order to replicate the log.

- DiscoverBrokers: Used to discover or view current quorum state when bootstrapping a broker.

There are also two administrative APIs:

We are also adding one new API to view the health/status of quorum replication:

- DescribeQuorum:

- AlterPartitionReassignments: Administrative API to change the members of the quorum.

- DescribeQuorum: Administrative API to list the replication state (i.e. lag) of the voters. More of the details can also be found in KIP-642: Dynamic quorum reassignment#DescribeQuorum.

Before getting into the details of these APIs, there are a few common attributes worth mentioning upfront:

- The core requests have a field for the clusterId. This is validated when a request is received to ensure that misconfigured brokers will not cause any harm to the cluster.

- All requests save

Votehave a field for the leader epoch. Voters and leaders are always expected to ensure that the request epoch is consistent with its own and return an error if it is not. - We piggyback current leader and epoch information on all responses. This reduces latency to discover leader changes.

- Although this KIP only expects a single-raft quorum implementation initially, we see it is beneficial to keep the door open for multi-raft architecture in the long term. This leaves the door open for use cases such as sharded controller or general quorum based topic replication.

...

- INVALID_CLUSTER_ID: The request either included indicates a clusterId which does not match the one expected by the leader or failed to include a clusterId when one was expectedvalue cached in

meta.properties. - FENCED_LEADER_EPOCH: The leader epoch in the request is smaller than the latest known to the recipient of the request.

- UNKNOWN_LEADER_EPOCH: The leader epoch in the request is larger than expected. Note that this is an unexpected error. Unlike normal Kafka log replication, it cannot happen that the follower receives the newer epoch before the leader.

- OFFSET_OUT_OF_RANGE: Used in the

FetchQuorumRecordsFetchAPI to indicate that the follower has fetched from an invalid offset and should truncate to the offset/epoch indicated in the response. - NOT_LEADER_FOR_PARTITION: Used in

DescribeQuorumandAlterPartitionReassignmentsto indicate that the recipient of the request is not the current leader. - INVALID_QUORUM_STATE: This error code is reserved for cases when a request conflicts with the local known state. For example, if two separate nodes try to become leader in the same epoch, then it indicates an illegal state change.

- INCONSISTENT_VOTER_SET: Used when the request contains inconsistent membership.

...

- If it fails to receive a

FetchQuorumRecordsResponseFetchResponsefrom the current leader before expiration ofquorum.fetch.timeout.ms - If it receives a

EndQuorumEpochrequest from the current leader - If it fails to receive a majority of votes before expiration of

quorum.election.timeout.msafter declaring itself a candidate.

...

New elections are always delayed by a random time which is bounded by quorum.election.backoff.max.ms. This is part of the Raft protocol and is meant to prevent gridlocked elections. For example, with a quorum size of three, if only two voters are online, then we have to prevent repeated elections in which each voter declares itself a candidate and refuses to vote for the other.

Request Schema

Leader Progress Timeout

In the traditional push-based model, when a leader is disconnected from the quorum due to network partition, it will start a new election to learn the active quorum or form a new one immediately. In the pull-based model, however, say a new leader has been elected with a new epoch and everyone has learned about it except the old leader (e.g. that leader was not in the voters anymore and hence not receiving the BeginQuorumEpoch as well), then that old leader would not be notified by anyone about the new leader / epoch and become a pure "zombie leader", as there is no regular heartbeats being pushed from leader to the follower. This could lead to stale information being served to the observers and clients inside the cluster.

To resolve this issue, we will piggy-back on the "quorum.fetch.timeout.ms" config, such that if the leader did not receive Fetch requests from a majority of the quorum for that amount of time, it would begin a new election and start sending VoteRequest to voter nodes in the cluster to understand the latest quorum. If it couldn't connect to any known voter, the old leader shall keep starting new elections and bump the epoch. And if the returned response includes a newer epoch leader, this zombie leader would step down and becomes a follower. Note that the node will remain a candidate until it finds that it has been supplanted by another voter, or win the election eventually.

As we know from the Raft literature, this approach could generate disruptive voters when network partitions happen on the leader. The partitioned leader will keep increasing its epoch, and when it eventually reconnects to the quorum, it could win the election with a very large epoch number, thus reducing the quorum availability due to extra restoration time. Considering this scenario is rare, we would like to address it in a follow-up KIP.

Request Schema

| Code Block |

|---|

{

"apiKey": N,

"type": "request",

"name": "VoteRequest",

"validVersions": "0",

"flexibleVersions": "0+",

"fields": [

|

| Code Block |

{ "apiKey": N, "type": "request", "name": "VoteRequest", "validVersions": "0", "flexibleVersion": "0+", "fields": [ {"name": "ClusterId", "type": "string", "versions": "0+"}, { "name": "Topics", "type": "[]VoteTopicRequest", "versions": "0+", "fields": [ { "name": "TopicNameClusterId", "type": "string", "versions": "0+", "entityTypenullableVersions": "topicName0+", "about"default": "The topic name." null"}, { "name": "PartitionsTopics", "type": "[]VotePartitionRequestTopicData", "versions": "0+", "fields": [ { "name": "PartitionIndexTopicName", "type": "int32string", "versions": "0+", "entityType": "topicName", "about": "The partitiontopic indexname." }, {{ "name": "CandidateEpochPartitions", "type": "int32[]PartitionData", "versions": "0+", "fields": [ { ""name": "PartitionIndex", "type": "int32", "versions": "0+", "about": "The partition index." }, { "name": "CandidateEpoch", "type": "int32", "versions": "0+", "about": "The bumped epoch of the candidate sending the request"}, { {"name": "CandidateId", "type": "int32", "versions": "0+", "about": "The ID of the voter sending the request"}, {{ "name": "LastOffsetEpoch", "type": "int32", "versions": "0+", "about": "The epoch of the last record written to the metadata log"}, { {"name": "LastOffset", "type": "int64", "versions": "0+", "about": "The offset of the last record written to the metadata log"} ] } }] } ] } |

As noted above, the request follows the convention of other batched partition APIs, such as Fetch and Produce. Initially we expect that only a single topic partition will be included, but this leaves the door open for "multi-raft" support.

...

| Code Block |

|---|

{

"apiKey": N,

"type": "response",

"name": "VoteResponse",

"validVersions": "0",

"flexibleVersions": "0+",

"fields": [

{ "name": "ErrorCode", "type": "int16", "versions": "0+",

"about": "The top level error code."},

{ "name": "Topics", "type": "[]VoteTopicResponseTopicData",

"versions": "0+", "fields": [

{ "name": "TopicName", "type": "string", "versions": "0+", "entityType": "topicName",

"about": "The topic name." },

{ "name": "Partitions", "type": "[]VotePartitionResponsePartitionData",

"versions": "0+", "fields": [

{ "name": "PartitionIndex", "type": "int32", "versions": "0+",

"about": "The partition index." },

{ {"name": "ErrorCode", "type": "int16", "versions": "0+"},

{{ "name": "LeaderId", "type": "int32", "versions": "0+",

"about": "The ID of the current leader or -1 if the leader is unknown."},

{{ "name": "LeaderEpoch", "type": "int32", "versions": "0+",

"about": "The latest known leader epoch"},

{{ "name": "VoteGranted", "type": "bool", "versions": "0+",

"about": "True if the vote was granted and false otherwise"}

]

}

}]

}

]

} |

Vote Request Handling

Note we need to respect the extra condition on leadership: the candidate’s log is at least as up-to-date as any other log in the majority who vote for it. Raft determines which of two logs is more "up-to-date" by comparing the offset and epoch of the last entries in the logs. If the logs' last entry have different epochs, then the log with the later epoch is more up-to-date. If the logs end with the same epoch, then whichever log is longer is more up-to-date. The Vote request includes information about the candidate’s log, and the voter denies its vote if its own log is more up-to-date than that of the candidate.

...

When a voter handles a Vote request:

- Check whether the clusterId in the request matches the cached local value (if present). If not, then reject the vote and return INVALID_CLUSTER_ID.

- First it checks whether an epoch larger than the candidate epoch from the request is known. If so, the vote First it checks whether an epoch larger than the candidate epoch from the request is known. If so, the vote is rejected.

- Next it checks if it has voted for that candidate epoch already. If it has, then only grant the vote if the candidate id matches the id that was already voted. Otherwise, the vote is rejected.

- If the candidate epoch is larger than the currently known epoch:

- Check whether

CandidateIdis one of the expected voters. If not, then reject the vote. The candidate in this case may have been part of an incomplete voter reassignment, so the voter should accept the epoch bump and itself begin a new election. - Check that the candidate's log is at least as up-to-date as it (see above for the comparison rules). If yes, then grant that vote by first updating the quorum-state file, and then returning the response with

voteGrantedto yes; otherwise rejects that request with the response.

- Check whether

...

Note that only voters receive the BeginQuorumEpoch request: observers will discover the new leader through either the DiscoverBrokers or FetchQuorumRecords APIs. For example, the old leader the Fetch API. If a non-leader receives a Fetch request, it would return an error code in FetchQuorumRecords response the response indicating that it is no longer the leader and it will also encode the current known leader id / epoch as well, then the observers can start fetching from the new leader. In case the old leader does not know who's the new leader, observers can still fallback to DiscoverBrokers request to discover the new leaderUpon initialization, an observer will send a Fetch request to any of the voters at random until the new leader is found.

Request Schema

| Code Block |

|---|

{

"apiKey": N,

"type": "request",

"name": "BeginQuorumEpochRequest",

"validVersions": "0",

"fields": [

{ {"name": "ClusterId", "type": "string", "versions": "0+", "nullableVersions": "0+", "default": "null"},

{ "name": "Topics", "type": "[]BeginQuorumTopicRequestTopicData",

"versions": "0+", "fields": [

{ "name": "TopicName", "type": "string", "versions": "0+", "entityType": "topicName",

"about": "The topic name." },

{ "name": "Partitions", "type": "[]BeginQuorumPartitionRequestPartitionData",

""versions": "0+", "fields": [

{ "name": "PartitionIndex", "type": "int32", "versions": "0+",

"about": "The partition index." },

{ {"name": "LeaderId", "type": "int32", "versions": "0+",

"about": "The ID of the newly elected leader"},

{ {"name": "LeaderEpoch", "type": "int32", "versions": "0+",

"about": "The epoch of the newly elected leader"}

]

}

]}

]

} |

Response Schema

| Code Block |

|---|

{

"apiKey": N,

"type": "response",

"name": "BeginQuorumEpochResponse",

"validVersions": "0",

"fields": [

{ {"name": "ErrorCode", "type": "int16", "versions": "0+"},

"about": "The top level error code."},

{ "name": "Topics", "type": "[]BeginQuorumTopicResponseTopicData",

"versions": "0+", "fields": [

{ "name": "TopicName", "type": "string", "versions": "0+", "entityType": "topicName",

"about": "The topic name." },

{ "name": "Partitions", "type": "[]BeginQuorumPartitionResponsePartitionData",

"versions": "0+", "fields": [

{ {"name": "ErrorCodePartitionIndex", "type": "int16int32", "versions": "0+"},

"about": "The partition index." },

{ "name": "LeaderIdErrorCode", "type": "int32int16", "versions": "0+"},

"{ "name": "LeaderId", "type": "int32", "versions": "0+",

"about": "The ID of the current leader or -1 if the leader is unknown."},

{ {"name": "LeaderEpoch", "type": "int32", "versions": "0+",

"about": "The latest known leader epoch"}

]

}

]}

]

} |

BeginQuorumEpoch Request Handling

A voter will accept a BeginQuorumEpoch if its leader epoch is greater than or equal to the current known epoch so long as it doesn't conflict with previous knowledge. For example, if the leaderId for epoch 5 was known to be A, then a voter will reject a BeginQuorumEpoch request from a separate voter B from the same epoch. If the epoch is less than the known epoch, the request is rejected.

As described in the section on Cluster Bootstrapping, a broker will fail if it receives a BeginQuorumEpoch with a clusterId which is inconsistent from the cached value.

As soon as a broker accepts a BeginQuorumEpoch request, it will transition to a follower state and begin sending FetchQuorumRecords Fetch requests to the new leader.

...

If the response contains no errors, then the leader will record the follower in memory as having endorsed the election. The leader will continue sending BeginQuorumEpoch to each known voter until it has received its endorsement. This ensures that a voter that is partitioned from the network will be able to discover the leader quickly after the partition is restored. An endorsement from an existing voter may also be inferred through a received FetchQuorumRecords Fetch request with the new leader's epoch even if the BeginQuorumEpoch request was never received.

If the error code indicates that voter's known leader epoch is larger (i.e. if the error is FENCED_LEADER_EPOCH), then the voter will update quorum-state and become a follower of that leader and begin sending FetchQuorumRecords Fetch requests.

EndQuorumEpoch

The EndQuorumEpoch API is used by a leader to gracefully step down so that an election can be held immediately without waiting for the election timeout. The primary use case for this is to enable graceful shutdown. If the shutting down voter is either an active current leader or a candidate if there is an election in progress, then this request will be sent. It is also used when the leader needs to be removed from the quorum following an AlterPartitionReassignments request.

The EndQuorumEpochRequest will be sent to all voters in the quorum. Inside each request, leader will define the list of preferred successors sorted by each voter's current replicated offset in descending order. Based on the priority of the preferred successors, each voter will choose the corresponding delayed election time so that the most up-to-date voter has a higher chance to be elected. If the node's priority is highest, it will become candidate immediately instead of waiting for next polland not wait for the election timeout. For a successor with priority N > 0, the next election timeout will be computed as:

...

| Code Block |

|---|

{

"apiKey": N,

"type": "request",

"name": "EndQuorumEpochRequest",

"validVersions": "0",

"fields": [

{ "name": "ClusterId", "type": "string", "versions": "0+", "nullableVersions": "0+", "default": "null"},

{ "name": "Topics", "type": "[]EndQuorumTopicRequestTopicData",

"versions": "0+", "fields": [

{ "name": "TopicName", "type": "string", "versions": "0+", "entityType": "topicName",

"about": "The topic name." },

{ "name": "Partitions", "type": "[]EndQuorumPartitionRequestPartitionData",

"versions": "0+", "fields": [

{ "{ "name": "PartitionIndex", "type": "int32", "versions": "0+",

"about": "The partition index." },

{ {"name": "ReplicaId", "type": "int32", "versions": "0+",

"about": "The ID of the replica sending this request"},

{ {"name": "LeaderId", "type": "int32", "versions": "0+",

"about": "The current leader ID or -1 if there is a vote in progress"},

{ {"name": "LeaderEpoch", "type": "int32", "versions": "0+",

"about": "The current epoch"},

{ "name": "PreferredSuccessors", "type": "[]int32", "versions": "0+",

"about": "A sorted list of preferred successors to start the election"}

]

}

]}

]

} |

Note that LeaderId and ReplicaId will be the same if the leader has been voted. If the replica is a candidate in a current election, then LeaderId will be -1.

...

| Code Block |

|---|

{

"apiKey": N,

"type": "response",

"name": "EndQuorumEpochResponse",

"validVersions": "0",

"fields": [

{ {"name": "ErrorCode", "type": "int16", "versions": "0+"},

"about": "The top level error code."},

{ "name": "Topics", "type": "[]EndQuorumTopicResponseTopicData",

"versions": "0+", "fields": [

{ "name": "TopicName", "type": "string", "versions": "0+", "entityType": "topicName",

"about": "The topic name." },

{ "name": "Partitions", "type": "[]EndQuorumPartitionResponsePartitionData",

"versions": "0+", "fields": [

{ {"name": "ErrorCodePartitionIndex", "type": "int16int32", "versions": "0+"},

{"nameabout": "LeaderId", "type": "int32", "The partition index." },

{ "name": "ErrorCode", "type": "int16", "versions": "0+"},

{ "name": "LeaderId", "type": "int32", "versions": "0+",

"about": "The ID of the current leader or -1 if the leader is unknown."},

{ {"name": "LeaderEpoch", "type": "int32", "versions": "0+",

"about": "The latest known leader epoch"}

]

}

} ]}

]

} |

EndQuorumEpoch Request Handling

...

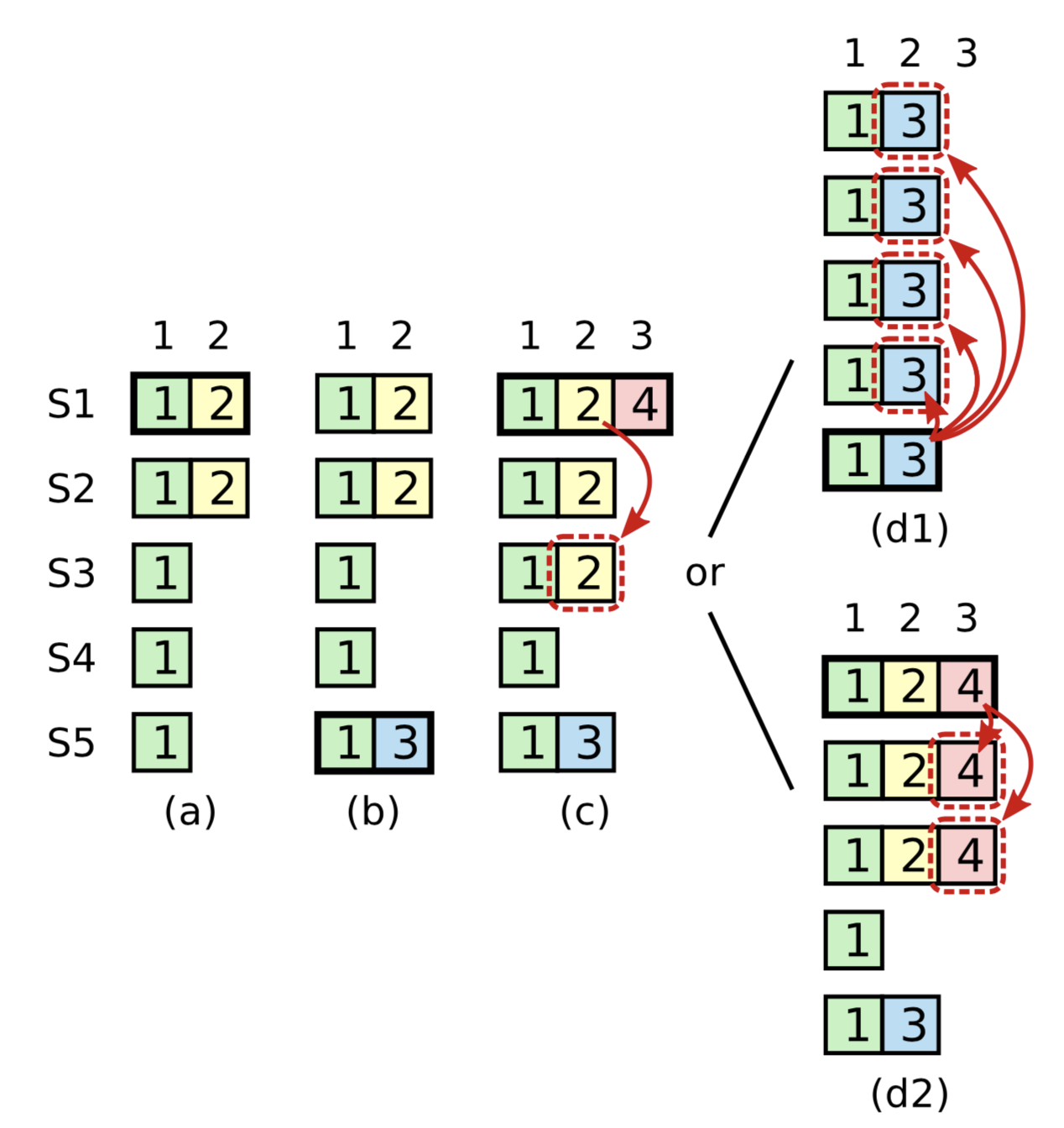

Extra condition on commitment: The Raft protocol requires the leader to only commit entries from any previous epoch if the same leader has already successfully replicated an entry from the current epoch. Kafka's ISR replication protocol suffers from a similar problem and handles it by not advancing the high watermark until the leader is able to write a message with its own epoch. The diagram below taken from the Raft dissertation illustrates the scenario:

The problem concerns the conditions for commitment and leader election. In this diagram, S1 is the initial leader and writes "2," but fails to commit it to all replicas. The leadership moves to S5 which writes "3," but also fails to commit it. Leadership then returns to S1 which proceeds to attempt to commit "2." Although "2" is successfully written to a majority of the nodes, the risk is that S5 could still become leader, which would lead to the truncation of "2" even though it is present on a majority of nodes.

...

Current Leader Discovery: This proposal also extends the Fetch response to include a separate field for the receiver of the request to indicate the current leader. This is intended to improve the latency to find the current leader since otherwise a replica would need additional round trips. When a broker is restarted, it will rely on the Fetch protocol to find the current leader.

We believe that using the same mechanism would be helpful for normal partitions as well since consumers have to use the Metadata API to find the current leader after received a NOT_LEADER_FOR_PARTITION error. However, this is outside the scope of this proposal.

...

| Code Block |

|---|

{

"apiKey": 1,

"type": "request",

"name": "FetchRequest",

"validVersions": "0-12",

"flexibleVersions": "12+",

"fields": [

{ "name": "ReplicaId", "type": "int32// ---------- Start new field ----------

{ "name": "ClusterId", "type": "string", "versions" "12+", "nullableVersions": "12+", "default": "0null", "taggedVersions": "12+", "tag": 0,

"about": "The brokerclusterId IDif ofknown. theThis follower,is ofused -1to ifvalidate thismetadata requestfetches isprior fromto abroker consumerregistration." },

// ---------- End new field ----------

{ "name": "MaxWaitTimeMsReplicaId", "type": "int32", "versions": "0+",

"about": "The maximumbroker timeID inof milliseconds to the follower, of -1 if this request is from a consumer." },

{ "name": "MaxWaitTimeMs", "type": "int32", "versions": "0+",

"about": "The maximum time in milliseconds to wait for the response." },

{ "name": "MinBytes", "type": "int32", "versions": "0+",

"about": "The minimum bytes to accumulate in the response." },

{ "name": "MaxBytes", "type": "int32", "versions": "3+", "default": "0x7fffffff", "ignorable": true,

"about": "The maximum bytes to fetch. See KIP-74 for cases where this limit may not be honored." },

{ "name": "IsolationLevel", "type": "int8", "versions": "4+", "default": "0", "ignorable": false,

"about": "This setting controls the visibility of transactional records. Using READ_UNCOMMITTED (isolation_level = 0) makes all records visible. With READ_COMMITTED (isolation_level = 1), non-transactional and COMMITTED transactional records are visible. To be more concrete, READ_COMMITTED returns all data from offsets smaller than the current LSO (last stable offset), and enables the inclusion of the list of aborted transactions in the result, which allows consumers to discard ABORTED transactional records" },

{ "name": "SessionId", "type": "int32", "versions": "7+", "default": "0", "ignorable": false,

"about": "The fetch session ID." },

{ "name": "SessionEpoch", "type": "int32", "versions": "7+", "default": "-1", "ignorable": false,

"about": "The fetch session epoch, which is used for ordering requests in a session" },

{ "name": "Topics", "type": "[]FetchableTopic", "versions": "0+",

"about": "The topics to fetch.", "fields": [

{ "name": "Name", "type": "string", "versions": "0+", "entityType": "topicName",

"about": "The name of the topic to fetch." },

{ "name": "FetchPartitions", "type": "[]FetchPartition", "versions": "0+",

"about": "The partitions to fetch.", "fields": [

{ "name": "PartitionIndex", "type": "int32", "versions": "0+",

"about": "The partition index." },

{ "name": "CurrentLeaderEpoch", "type": "int32", "versions": "9+", "default": "-1", "ignorable": true,

"about": "The current leader epoch of the partition." },

{ "name": "FetchOffset", "type": "int64", "versions": "0+",

"about": "The message offset." },

// ---------- Start new field ----------

{ "name": "FetchEpochLastFetchedEpoch", "type": "int32", "versions": "12+", "default": "-1", "taggedVersions": "12+", "tag": 0,

"about": "The epoch of the last replicated record"},

// ---------- End new field ----------

{ "name": "LogStartOffset", "type": "int64", "versions": "5+", "default": "-1", "ignorable": false,

"about": "The earliest available offset of the follower replica. The field is only used when the request is sent by the follower."},

{ "name": "MaxBytes", "type": "int32", "versions": "0+",

"about": "The maximum bytes to fetch from this partition. See KIP-74 for cases where this limit may not be honored." }

]}

]},

{ "name": "Forgotten", "type": "[]ForgottenTopic", "versions": "7+", "ignorable": false,

"about": "In an incremental fetch request, the partitions to remove.", "fields": [

{ "name": "Name", "type": "string", "versions": "7+", "entityType": "topicName",

"about": "The partition name." },

{ "name": "ForgottenPartitionIndexes", "type": "[]int32", "versions": "7+",

"about": "The partitions indexes to forget." }

]},

{ "name": "RackId", "type": "string", "versions": "11+", "default": "", "ignorable": true,

"about": "Rack ID of the consumer making this request"}

]

} |

...

| Code Block |

|---|

{

"apiKey": 1,

"type": "response",

"name": "FetchResponse",

"validVersions": "0-12",

"flexibleVersions": "12+",

"fields": [

{ "name": "ThrottleTimeMs", "type": "int32", "versions": "1+", "ignorable": true,

"about": "The duration in milliseconds for which the request was throttled due to a quota violation, or zero if the request did not violate any quota." },

{ "name": "ErrorCode", "type": "int16", "versions": "7+", "ignorable": false,

"about": "The top level response error code." },

{ "name": "SessionId", "type": "int32", "versions": "7+", "default": "0", "ignorable": false,

"about": "The fetch session ID, or 0 if this is not part of a fetch session." },

{ "name": "Topics", "type": "[]FetchableTopicResponse", "versions": "0+",

"about": "The response topics.", "fields": [

{ "name": "Name", "type": "string", "versions": "0+", "entityType": "topicName",

"about": "The topic name." },

{ "name": "Partitions", "type": "[]FetchablePartitionResponse", "versions": "0+",

"about": "The topic partitions.", "fields": [

{ "name": "PartitionIndex", "type": "int32", "versions": "0+",

"about": "The partiitonpartition index." },

{ "name": "ErrorCode", "type": "int16", "versions": "0+",

"about": "The error code, or 0 if there was no fetch error." },

{ "name": "HighWatermark", "type": "int64", "versions": "0+",

"about": "The current high water mark." },

{ "name": "LastStableOffset", "type": "int64", "versions": "4+", "default": "-1", "ignorable": true,

"about": "The last stable offset (or LSO) of the partition. This is the last offset such that the state of all transactional records prior to this offset have been decided (ABORTED or COMMITTED)" },

{ "name": "LogStartOffset", "type": "int64", "versions": "5+", "default": "-1", "ignorable": true,

"about": "The current log start offset." },

// ---------- Start new field ----------

{ "name": "NextOffsetAndEpochDivergingEpoch", "type": "OffsetAndEpochEpochEndOffset",

"versions": "12+", "taggedVersions": "12+", "tag": 0, "fields": [

{ "nameabout": "NextFetchOffset", "type": "int64", "versions": "0+",

"about": "If set, this is the offset that the follower should truncate to"}In case divergence is detected based on the `LastFetchedEpoch` and `FetchOffset` in the request, this field indicates the largest epoch and its end offset such that subsequent records are known to diverge",

"fields": [

{ "name": "NextFetchOffsetEpochEpoch", "type": "int32", "versions": "0+"12+", "default": "-1" },

{ "aboutname": "The epoch of the next offset in case the follower needs to truncate"}, EndOffset", "type": "int64", "versions": "12+", "default": "-1" }

]},

{ "name": "CurrentLeader", "type": "LeaderIdAndEpoch",

"versions": "12+", "taggedVersions": "12+", "tag": 1, fields": [

{ "name": "LeaderId", "type": "int32", "versions": "0+",

"about": "The ID of the current leader or -1 if the leader is unknown."},

{ "name": "LeaderEpoch", "type": "int32", "versions": "0+",

"about": "The latest known leader epoch"}

]},

// ---------- End new field ----------

{ "name": "Aborted", "type": "[]AbortedTransaction", "versions": "4+", "nullableVersions": "4+", "ignorable": false,

"about": "The aborted transactions.", "fields": [

{ "name": "ProducerId", "type": "int64", "versions": "4+", "entityType": "producerId",

"about": "The producer id associated with the aborted transaction." },

{ "name": "FirstOffset", "type": "int64", "versions": "4+",

"about": "The first offset in the aborted transaction." }

]},

{ "name": "PreferredReadReplica", "type": "int32", "versions": "11+", "ignorable": true,

"about": "The preferred read replica for the consumer to use on its next fetch request"},

{ "name": "Records", "type": "bytes", "versions": "0+", "nullableVersions": "0+",

"about": "The record data." }

]}

]}

]

} |

...

When a leader receives a FetchRequest, it must check the following:

- Check that the clusterId if not null matches the cached value in

meta.properties. - First First ensure that the leader epoch from the request is the same as the locally cached value. If not, reject this request with either the FENCED_LEADER_EPOCH or UNKNOWN_LEADER_EPOCH error.

- If the leader epoch is smaller, then eventually this leader's BeginQuorumEpoch would reach the voter and that voter would update the epoch.

- If the leader epoch is larger, then eventually the receiver would learn about the new epoch anyways. Actually this case should not happen since, unlike the normal partition replication protocol, leaders are always the first to discover that they have been elected.

- Check that the epoch on the

FetchOffset's FetchEpochareLastFetchedEpochis consistent with the leader's log. Specifically we check thatFetchOffsetis less than or equal to the end offset ofFetchEpoch. If not, return OUT_OF_RANGE and encode the nextFetchOffsetas the last offset of the . The leader assumes that theLastFetchedEpochis the epoch of the offset prior toFetchOffset. FetchOffsetis expected to be in the range of offsets with an epoch ofLastFetchedEpochor it is the start offset of the next epoch in the log. If not, return a empty response (no records included in the response) and set theDivergingEpochto the largest epoch which is less than or equal to the fetcher's epochLastFetchedEpoch. This is a heuristic of truncating an optimization to truncation to let the voter follower truncate as much as possible to get to the starting - divergence point with fewer FetchQuorumRecords Fetch round-trips: if . For example, If the fetcher's last epoch is X which does not match the epoch of that the offset prior to the fetching offset, then it means that all the records of with epoch X on that voter the follower may have diverged and hence could be truncated, then returning the next offset of largest epoch Y (< X) is reasonable. - If the request is from a voter not an observer, the leader can possibly advance the high-watermark. As stated above, we only advance the high-watermark if the current leader has replicated at least one entry to majority of quorum to its current epoch. Otherwise, the high watermark is set to the maximum offset which has been replicated to a majority of the voters.

The check in step 2 is similar to the logic that followers use today in Kafka through the OffsetsForLeaderEpoch API. In order to make this check efficient, Kafka maintains a leader-epoch-checkpoint file on disk, the contents of which is cached in memory. After every epoch change is observed, we write the epoch and its start offset to this file and update the cache. This allows us to efficiently check whether the leader's log has diverged from the follower and where the point of divergence is. Note that it may take multiple rounds of FetchQuorumRecords Fetch in order to find the first offset that diverges in the worst case.

...

- If the response contains FENCED_LEADER_EPOCH error code, check the

leaderIdfrom the response. If it is defined, then update thequorum-statefile and become a "follower" (may or may not have voting power) of that leader. Otherwise, try to learn about the new leader viaDiscoverBrokersrequests; in retry to theFetchrequest again against one of the remaining voters at random; in the mean time it may receive BeginQuorumEpoch request which would also update the new epoch / leader as well. - If the response contains OUT_OF_RANGE error code, truncate its local log to the encoded nextFetchOffset, and then resend the

FetchRecordrequesthas the fieldDivergingEpochset, then truncate from the log all of the offsets greater than or equal toDivergingEpoch.EndOffsetand truncate any offset with an epoch greater thanDivergingEpoch.EndOffset.

Note that followers will have to check any records received for the presence of control records. Specifically a follower/observer must check for voter assignment messages which could change its role.

Discussion:

...

Pull v.s. Push Model

In the original Raft paper, the push model is used for replicating states, where leaders actively send requests to followers and count quorum from returned acknowledgements to decide if an entry is committed.

In our implementation, we used a pull model for this purpose. More concretely:

- Consistency check: In the push model this is done at the replica's side, whereas in the pull model it has to be done at the leader. The voters / observers send the fetch request associated with its current log end offset. The leader will check its entry for offset, if matches the epoch the leader respond with a batch of entries (see below); if does not match, the broker responds to let the follower truncate all of its current epoch to the next entry of the leader’s previous epoch's end index (e.g. if the follower’s term is X, then return the next offset of term Y’s ending offset where Y is the largest term on leader that is < X). And upon receiving this response the voter / observer has to truncate its local log to that offset.

- Extra condition on commitment: In the push model the leader has to make sure it does not commit entries that are not from its current epoch, in the pull model the leader still has to obey this by making sure that the batch it sends in the fetch response contains at least one entry associated with its current epoch.

Based on the recursive deduction from the original paper, we can still conclude that the leader / replica log would never diverge in the middle of the log while the log end index / term still agrees.

Comparing this implementation with the original push based approach:

- On the Pro-side: with the pull model it is more natural to bootstrap a newly

There's one caveat of the pull-based model: say a new leader has been elected with a new epoch and everyone has learned about it except the old leader (e.g. that leader was not in the voters anymore and hence not receiving the BeginQuorumEpoch as well), then that old leader would not be notified by anyone about the new leader / epoch and become a pure "zombie leader".

To resolve this issue, we will piggy-back on the "quorum.fetch.timeout.ms" config, such that if the leader did not receive FetchQuorumRecords requests from a majority of the quorum for that amount of time, it would start sending Metadata request to random nodes in the cluster to understand the latest quorum. If it couldn't connect to any known voter, the old leader shall reset the connection information and send out DiscoverBrokers. And if the returned response includes a newer epoch leader, this zombie leader would step down and becomes an observer; and if it realized that it is still within the current quorum's voter list, it would start fetching from that leader. Note that the node will remain a leader until it finds that it has been supplanted by another voter.

Discussion: Pull v.s. Push Model

In the original Raft paper, the push model is used for replicating states, where leaders actively send requests to followers and count quorum from returned acknowledgements to decide if an entry is committed.

In our implementation, we used a pull model for this purpose. More concretely:

- Consistency check: In the push model this is done at the replica's side, whereas in the pull model it has to be done at the leader. The voters / observers send the fetch request associated with its current log end offset. The leader will check its entry for offset, if matches the epoch the leader respond with a batch of entries (see below); if does not match, the broker responds to let the follower truncate all of its current epoch to the next entry of the leader’s previous epoch's end index (e.g. if the follower’s term is X, then return the next offset of term Y’s ending offset where Y is the largest term on leader that is < X). And upon receiving this response the voter / observer has to truncate its local log to that offset.

- Extra condition on commitment: In the push model the leader has to make sure it does not commit entries that are not from its current epoch, in the pull model the leader still has to obey this by making sure that the batch it sends in the fetch response contains at least one entry associated with its current epoch.

Based on the recursive deduction from the original paper, we can still conclude that the leader / replica log would never diverge in the middle of the log while the log end index / term still agrees.

Comparing this implementation with the original push based approach:

- On the Pro-side: with the pull model it is more natural to bootstrap a newly added member with empty logs because we do not need to let the leader retry sending request with decrementing nextIndex, instead the follower just send fetch request with zero starting offset. Also the leader could simply reject fetch requests from old-configuration’s members who are no longer part of the group in the new configuration, and upon receiving the rejection the replica knows it should shutdown now — i.e. we automatically resolve the “disruptive servers” issue.

- On the Con-side: zombie leader step-down is more cumbersome, as with the push model, the leader can step down when it cannot successfully send heartbeat requests within a follower timeout, whereas with the pull model, the zombie leader does not have that communication pattern, and one alternative approach is that after it cannot commit any entries for some time, it should try to step down. Also, the pull model could introduce extra latency (in the worst case, a single fetch interval) to determine if an entry is committed, which is a problem especially when we want to update multiple metadata entries at a given time — this is pretty common in Kafka's use case — and hence we need to consider supporting batch writes efficiently in our implementation.

On Performance: There are also tradeoffs from a performance perspective between these two models. In order to commit a record, it must be replicated to a majority of nodes. This includes both propagating the record data from the leader to the follower and propagating the successful write of that record from the follower back to the leader. The push model potentially has an edge in latency because it allows for pipelining. The leader can continue sending new records to followers while it is awaiting the committing of previous records. In the proposal here, we do not pipeline because the leader relies on getting the next offset from the FetchQuorumRecords Fetch request. However, this is not a fundamental limitation. If the leader keeps track of the last sent offset, then we could instead let the FetchQuorumRecords Fetch request be pipelined so that it indicates the last acked offset and allows the leader to choose the next offset to send. Basically rather than letting the leader keep sending append requests to the follower as new data arrives, the follower would instead keep sending fetch requests as long as the acked offset is changing. Our experience with Kafka replication suggests that this is unlikely to be necessary, so we prefer the simplicity of the current approach, which also allows us to reuse more of the existing log layer in Kafka. However, we will evaluate the performance characteristics and make improvements as necessary. Note that although we are specifying the initial versions of the protocols in this KIP, there will almost certainly be additional revisions before this reaches production.

...

DescribeQuorum

The DiscoverBrokers API DescribeQuorum API is primarily used by new brokers to discover the connection of the current set of live brokers. When a broker first starts up, for example, it will send DiscoverBrokers requests to the bootstrap.servers. Once it discovers the current membership and its own state, then will it resume participation in elections. the crucial role of discovering connection information for existing nodes using a gossip approach. Brokers include their own connection information in the request and collect the connection information of the voters in the cluster from the response. Additionally, if one of the voters becomes unreachable at some point, then DiscoverBrokers can be used to find the new connection information (similar to how metadata refreshes are used in the client after a disconnect).the admin client to show the status of the quorum. This API must be sent to the leader, which is the only node that would have lag information for all of the voters.

Request Schema

| Code Block |

|---|

{

"apiKey": N,

"type": "request",

"name": "DiscoverBrokersRequestDescribeQuorumRequest",

"validVersions": "0",

"flexibleVersions": "0+",

"fields": [

{ { "name": "ReplicaIdTopics", "type": "int32[]TopicData",

"versions": "0+"},

{ "fields": [

{ "name": "BootTimestampTopicName", "type": "int64string", "versions": "0+"},

{ ", "entityType": "topicName",

"about": "The topic name." },

{ "name": "HostPartitions", "type": "string[]PartitionData",

"versions": "0+"},

{ "fields": [

{ "name": "PortPartitionIndex", "type": "int32", "versions": "0+"}

]

} |

The BootTimestamp field is defined when a broker starts up. We use this as a heuristic for finding the latest connection information for a given replica.

Response Schema

,

"about": "The partition index." }

]

}]

}

]

} |

Response Schema

| Code Block |

|---|

{

"apiKey": N,

"type": "response",

"name": "DiscoverBrokersResponseDescribeQuorumResponse",

"validVersions": "0",

"flexibleVersions": "0+",

"fields": [

{ { "name": "ErrorCode", "type": "int16", "versions": "0+"},

"about": "The top level {error code."},

{ "name": "ClusterIdTopics", "type": "string[]TopicData",

"versions": "0+"},

{ "fields": [

{ "name": "BrokersTopicName", "type": "[]Brokerstring", "versions": "0+",

"about "entityType": "The current voters "topicName",

"fieldsabout": [

{ "The topic name." },

{ "name": "VoterIdPartitions", "type": "int32[]PartitionData",

"versions": "0+"},

{ "fields": [

{ "name": "BootTimestampPartitionIndex", "type": "int64int32", "versions": "0+"},

"about": "The { partition index." },

{ "name": "HostErrorCode", "type": "stringint16", "versions": "0+"},

{ { "name": "PortLeaderId", "type": "int32", "versions": "0+"}

]

}

]

} |

Note that only the host and port information are provided. We assume that the security protocol will be configured with security.inter.broker.protocol. Additionally, the listener that is advertised through this API is the same one specified by inter.broker.listener.name.

DiscoverBrokers Request Handling

When a broker receives a DiscoverBrokers request, it will do the following:

- If the

ReplicaIdfrom the request is greater than 0 and matches one of the existing voters (or target voters), update local connection information for that node if either that node is not present in the cache or if theBootTimestampfrom the request is larger than the cached value. - Respond with the latest known leader and epoch along with the connection information of all known voters.

Note on AdminClient Usage: This API is also used by the admin client in order to describe/alter quorum state. In this case, we will use a sentinel -1 for ReplicaId. The values for the other fields in the request will not be inspected by the broker if the ReplicaId is less than 0, so we can choose reasonable sentinels for them as well (e.g. Host can be left as an empty string).

DiscoverBrokers Response Handling

After receiving a DiscoverBrokers response, the node will update its local cache of connection information with the values from the response. As when handling requests, the node will only update cached connection information corresponding to the largest observed BootTimestamp.

Note on Connection Discovery with Gossip: The reason this API is specified as a gossip protocol is that the cluster must be able to propagate connection information even when there are insufficient voters available to establish a quorum. There must be some base layer which is able to spread enough initial metadata so that voters can connect to each other and conduct elections. An alternative way to do this would be to require connection information always be defined statically in configuration, however this makes operations cumbersome in modern cloud environments where hosts change frequently. We have opted instead to embrace dynamic discovery.

Quorum Metadata

To discover the current membership, the broker will rely on Metadata RPC resume participation in elections. There is no actual change to the RPC for now, as we just need to find the single partition leader after the connection information discovery.

Quorum Bootstrapping

This section discusses the process for establishing the quorum when the cluster is first initialized and afterwards when nodes are restarted.

When first initialized, we rely on the quorum.voters configuration to define the expected voters. Brokers will only rely on this when starting up if there is no quorum state written to the log and if the broker fails to discover an existing quorum through the DiscoverBrokers API. We suggest that a --bootstrap flag could be passed through kafka-server-start.sh when the cluster is first started to add some additional safety. In that case, we would only use the config when the flag is specified and otherwise expect to discover the quorum dynamically.

Assuming the broker fails to discover an existing quorum, it will then check its broker.id to see if it is expected to be one of the initial voters. If so, then it will immediately include itself in DiscoverBrokers responses. Until the quorum is established, brokers will send DiscoverBrokers to the nodes in bootstrap.servers and other discovered voters in order to find the other expected members. Once enough brokers are known, the brokers will begin a vote to elect the first leader.

Note that the DiscoverBrokers API is intended to be a gossip API. Quorum members use this to advertise their connection information on bootstrapping and to find new quorum members. All brokers use the latest quorum state in response to DiscoverBrokers requests that they receive. The latest voter state is determined by looking at 1) the leader epoch, and 2) boot timestamps. Brokers only accept monotonically increasing updates to be cached locally. This approach is going to replace the current ZooKeeper ephemeral nodes based approach for broker (de-)registration procedure, where broker registration will de done by a new broker gossiping its host information via DiscoverBrokersRequest. As for broker de-registration, it will be done separately for voters and observers: for voters, we would first change the quorum to degrade the shutting down voters to observers of the quorum; and for observers, they can directly shuts down themselves after they've let the controller to move its hosted partitions to other hosts.

Bootstrapping Procedure

To summarize, this is the procedure that brokers will follow on initialization:

- Upon starting up, brokers always try to bootstrap its knowledge of the quorum by first reading the

quorum-statefile and then scanning forward fromAppliedOffsetto the end of the log to see if there are any changes to the quorum state. For newly started brokers, the log / file would all be empty so no previous knowledge can be restored. - If after step 1), there's some known quorum state along with a leader / epoch already, the broker would:

- Promote itself from observer to voter if it finds out that it's a voter for the epoch.

- Start sending

FetchQuorumRecordsrequest to the current leader it knows (it may not be the latest epoch's leader actually).

- Otherwise, it will try to learn the quorum state by sending

DiscoverBrokersto any other brokers inside the cluster viaboostrap.serversas the second option of quorum state discovery.- As long as a broker does not know all the current quorum voter's connections, it should continue periodically ask other brokers via

DiscoverBrokers.

- As long as a broker does not know all the current quorum voter's connections, it should continue periodically ask other brokers via

- Send out MetadataRequest to the discovered brokers to find the current metadata partition leader.

- As long as a broker does not know the current quorum (including the leader and the voters), it should continue periodically ask other brokersvia

Metadata.

- As long as a broker does not know the current quorum (including the leader and the voters), it should continue periodically ask other brokersvia

- If even step 3) 4) cannot find any quorum information – e.g. when there's no other brokers in the cluster, or there's a network partition preventing this broker to talk to others in the cluster – fallback to the third option of quorum state discover by checking if it is among the brokers listed in

quorum.voters.- If so, then it will promote to voter state and add its own connection information to the cached quorum state and return that in the

DiscoverBrokersresponses it answers to other brokers; otherwise stays in observer state. - In either case, it continues to try to send

DiscoverBrokersto all other brokers in the cluster viaboostrap.servers.

- If so, then it will promote to voter state and add its own connection information to the cached quorum state and return that in the

- For any voter, after it has learned a majority number of voters in the expected quorum from

DiscoverBrokersresponses, it will begin a vote.

When a leader has been elected for the first time in a cluster (i.e. if the leader's log is empty), the first thing it will do is append a VoterAssignmentMessage (described in more detail below) which will contain quorum.voters as the initial CurrentVoters. Once this message has been persisted in the log, then we no longer need `quorum.voters` and users can safely roll the cluster without this config.

ClusterId generation: Note that the first leader is also responsible for providing the ClusterId field which is part of the VoterAssignmentMessage. If the cluster is being migrated from Zookeeper, then we expect to reuse the existing clusterId. If the cluster is starting for the first time, then a new one will be generated -- in practice it is the user's responsibility to guarantee the newly generated clusterId is unique. Once this message has been committed to the log, the leader and all future leaders will strictly validate that this value matches the ClusterId provided in requests when receiving Vote, BeginEpoch, EndEpoch, and FetchQuorumRecords requests.

The leader will also append a LeaderChangeMessage as described in the VoteResponse handling above. This is not needed for correctness. It is just useful for debugging and to ensure that the high watermark always has an opportunity to advance after an election.

Bootstrapping Example

With this procedure in mind, a convenient way to initialize a cluster might be the following.

- Start with a single node configured with

broker.id=0andquorum.voters=0. - Start the node and verify quorum status. This would also be a good opportunity to make dynamic config changes or initialize security configurations.

- Add additional nodes to the cluster and verify that they can connect to the quorum.

- Finally use

AlterPartitionReassignmentsto grow the quorum to the intended size.

Quorum Reassignment

The protocol for changing the active voters is well-described in the Raft literature. The high-level idea is to use a new control record type to write quorum changes to the log. Once a quorum change has been written and committed to the log, then the quorum change can take effect.

This will be a new control record type with Type=2 in the key schema. Below we describe the message value schema used for the quorum change messages written to the log.

| Code Block |

|---|

{

"type": "message",

"name": "VoterAssignmentMessage",

"validVersions": "0",

"flexibleVersions": "0+",

"fields": [

{"name": "ClusterId", "type": "string", "versions": "0+"}

{"name": "CurrentVoters", "type": "[]Voter", "versions": "0+", "fields": [

{"name": "VoterId", "type": "int32", "versions": "0+"}

]},

{"name": "TargetVoters", "type": "[]Voter", "versions": "0+", "nullableVersions": "0+", "fields": [

{"name": "VoterId", "type": "int32", "versions": "0+"}

]}

]

} |

DescribeQuorum

The DescribeQuorum API is used by the admin client to show the status of the quorum. This includes showing the progress of a quorum reassignment and viewing the lag of followers and observers.

Unlike the DiscoverBrokers request, this API must be sent to the leader, which is the only node that would have lag information for all of the voters.

Request Schema

| Code Block |

|---|

{

"apiKey": 56,

"type": "request",

"name": "DescribeQuorumRequest",

"validVersions": "0",

"flexibleVersions": "0+",

"fields": [

{ "name": "Topics", "type": "[]DescribeQuorumTopicRequest",

"versions": "0+", "fields": [

{ "name": "TopicName", "type": "string", "versions": "0+", "entityType": "topicName",

"about": "The topic name." },

{ "name": "Partitions", "type": "[]DescribeQuorumPartitionRequest",

"versions": "0+", "fields": [

{ "name": "PartitionIndex", "type": "int32", "versions": "0+",

"about": "The partition index." }

]

}]

}

]

} |

Response Schema

| Code Block |

|---|

{

"apiKey": 56,

"type": "response",

"name": "DescribeQuorumResponse",

"validVersions": "0",

"flexibleVersions": "0+",

"fields": [

{ "name": "Topics", "type": "[]DescribeQuorumTopicResponse",

"versions": "0+", "fields": [

{ "name": "TopicName", "type": "string", "versions": "0+", "entityType": "topicName",

"about": "The topic name." },

{ "name": "Partitions", "type": "[]DescribeQuorumPartitionResponse",

"versions": "0+", "fields": [

{ "name": "PartitionIndex", "type": "int32", "versions": "0+",

"about": "The partition index." },

{"name": "ErrorCode", "type": "int16", "versions": "0+"},

{"name": "LeaderId", "type": "int32", "versions": "0+",

"about": "The ID of the current leader or -1 if the leader is unknown."},

{"name": "LeaderEpoch", "type": "int32", "versions": "0+",

"about": "The latest known leader epoch"},

{"name": "HighWatermark", "type": "int64", "versions": "0+"},

{"name": "CurrentVoters", "type": "[]ReplicaState", "versions": "0+" },

{"name": "TargetVoters", "type": "[]ReplicaState", "versions": "0+" },

{"name": "Observers", "type": "[]ReplicaState", "versions": "0+" }

]}

]}],

"commonStructs": [

{"name": "ReplicaState", "versions": "0+", "fields": [

{ "name": "ReplicaId", "type": "int32", "versions": "0+"},

{ "name": "LogEndOffset", "type": "int64", "versions": "0+",

"about": "The last known log end offset of the follower or -1 if it is unknown"},

{ "name": "LastCaughtUpTimeMs", "type": "int64", "versions": "0+",

"about": "The last time the replica was caught up to the high watermark"}

]}

]

} |

DescribeQuorum Request Handling

This request is always sent to the leader node. We expect AdminClient to use DiscoverBrokers and Metadata in order to discover the current leader. Upon receiving the request, a node will do the following:

- First check whether the node is the leader. If not, then return an error to let the client retry with

DiscoverBrokers. If the current leader is known to the receiving node, then include theLeaderIdandLeaderEpochin the response. - Build the response using current assignment information and cached state about replication progress.

DescribeQuorum Response Handling

On handling the response, the admin client would do the following:

- If the response indicates that the intended node is not the current leader, then check the response to see if the

LeaderIdhas been set. If so, then attempt to retry the request with the new leader. - If the current leader is not defined in the response (which could be the case if there is an election in progress), then backoff and retry with

DiscoverBrokers and Metadata. - Otherwise the response can be returned to the application, or the request eventually times out.

AlterPartitionReassignments

The AlterPartitionReassignments API was introduced in KIP-455 and is used by the admin client to change the replicas of a topic partition. We propose to reuse it for Raft voter reassignment as well.

The effect of an AlterPartitionReassignments is to change the TargetVoters field in the VoterAssignmentMessage defined above. Once this is done, the leader will begin the process of bringing the new nodes into the quorum and kicking out the nodes which are no longer needed.

Cancellation: If Replicas is set to null in the request, then effectively we will cancel an ongoing reassignment and leave the quorum with the current voters. The more preferable option is to always set the intended TargetVoters. Note that it is always possible to send a new AlterPartitionReassignments request even if the pending reassignment has not finished. So if we are in the middle of a reassignment from (1, 2, 3) to (4, 5, 6), then the user can cancel the reassignment by resubmitting (1, 2, 3) as the TargetVoters.

AlterPartitionReassignments Request Handling

This request must be sent to the leader (which is the controller in KIP-500). Upon receiving the AlterPartitionReassignments request, the node will verify a couple of things:

- If the target node is not the leader of the quorum, return NOT_LEADER_FOR_PARTITION code to the admin client.

- Check whether

TargetVotersin the request matches that from the latest assignment. If so, check whether it has been committed. If it has, then return immediately. Otherwise go to step 4. - If the

TargetVotersin the request does not match the currentTargetVoters, then append a newVoterAssignmentMessageto the log. - Return successfully only when the desired

TargetVotershas been safely committed to the log.

After returning the result of the request, the leader will begin the process to modify the quorum. This is described in more detail below, but basically the leader will begin tracking the fetch state of the target voters. It will then make a sequence of single-movement alterations by appending new VoterAssignmentMessage records to the log.

AlterPartitionReassignments Response Handling

The current response handling of this API in the AdminClient is sufficient for our purpose:

- If the response error indicates that the intended node is not the current leader, use the

MetadataAPI to find the latest leader and retry. - Otherwise return the successful response to the application.

Note that the AdminClient should retry the AlterPartitionReassignments request if it times out before the reassignment had been committed to the log. If the caller does not continue retrying the operation, then there is no guarantee about whether or not the reassignment had been successfully received by the cluster.

Once the reassignment has been accepted by the leader, then a user can monitor the status of the reassignment through the DescribeQuorum API.

Quorum Change Protocol

This protocol allows arbitrary quorum changes through the AlterPartitionReassignments API. Internally, we arrive at the target quorum by making a sequence of single-member changes.

Before getting into the details, we consider an example which summarizes the basic mechanics. Suppose that we start with a quorum consisting of the replicas (1, 2, 3) and we want to change the quorum to (4, 5, 6). When the leader receives the request to alter the quorum, it will write a message to the current quorum indicating the desired change:

| Code Block |

|---|

currentVoters: [1, 2, 3]

targetVoters: [4, 5, 6] |

Once this message has been committed to the quorum, the leader will add 4 to the current quorum by writing a new log entry:

| Code Block |

|---|

currentVoters: [1, 2, 3, 4]

targetVoters: [4, 5, 6] |

A common optimization for this step is to wait until 4 has caught up near the end of the log before adding it. Similarly, we may choose to add another replica first if it has already caught up.

Once the check passes, the leader will finally send out the control record to modify the existing voter list. Once that entry has been committed to the log, the new membership takes effect.

Then we could remove one of the existing replicas (say 3) by writing a new message:

| Code Block |

|---|

currentVoters: [1, 2, 4]

targetVoters: [4, 5, 6] |

Continuing in this way, eventually arrive at the following state:

| Code Block |

|---|

currentVoters: [1, 4, 5, 6]

targetVoters: [4, 5, 6] |

Suppose that replica 1 is the current leader. Before finalizing the reassignment, it will resign its leadership by sending EndQuorumEpoch to the other voters. Once the new leader takes over, it will complete the reassignment by removing replica 1 from the current voters. At this point, the quorum change has completed and we can clear the targetVoters field:

| Code Block |

|---|

currentVoters: [4, 5, 6]

targetVoters: null |

The previous leader will resume as a voter until it knows that it is no longer one of them. The leader will always choose to remove itself last as long as progress can still be made.

To summarize the protocol details:

- Quorum changes are executed through a series of single-member changes.

- The

BeginQuorumEpochAPI is used to notify new voters of their new status. - The

EndQuorumEpochAPI is used by leaders when they need to remove themselves from the quorum.

Reassignment Algorithm

Upon receiving an AlterPartitionReassignments request, the leader will do the following:

- Append an

VoterAssignmentMessageto the log with the current voters asCurrentVotersand theReplicasfrom theAlterPartitionReassignmentsrequest as theTargetVoters. - Leader will compute 3 sets based on

CurrentVotersandTargetVoters:RemovingVoters: voters to be removedRetainedVoters: voters shared between current and targetNewVoters: voters to be added

- Based on comparison between

size(NewVoters)andsize(RemovingVoters),- If

size(NewVoters)>=size(RemovingVoters), pick one ofNewVotersasNVby writing a record withCurrentVoters=CurrentVoters + NV, andTargetVoters=TargetVoters. - else pick one of

RemovingVotersasRV, preferably a non-leader voter, by writing a record withCurrentVoters=CurrentVoters - RV, andTargetVoters=TargetVoters.

- If

- Once the record is committed, the membership change is safe to be applied. Note that followers will begin acting with the new voter information as soon as the log entry has been appended. They do not wait for it to be committed.

- As there is a potential delay for propagating the removal message to the removing voter, we piggy-back on the `FetchQuorumRecords` to inform the voter to downgrade immediately after the new membership gets committed. See the error code NOT_FOLLOWER.

- The leader will continue this process until one of the following scenarios happens:

- If

TargetVoters = CurrentVoters, then the reassignment is done. The leader will append a new entry withTargetVoters=nullto the log. - If the leader is the last remaining node in

RemovingVoters, then it will step down by sendingEndQuorumEpochto the current voters. It will continue as a voter until the next leader removes it from the quorum.

- If

Note that there is one main subtlety with this process. When a follower receives the new quorum state, it immediately begins acting with the new state in mind. Specifically, if the follower becomes a candidate, it will expect votes from a majority of the new voters specified by the reassignment. However, it is possible that the VoterAssignmentMessage gets truncated from the follower's log because a newly elected leader did not have it in its log. In this case, the follower needs to be able to revert to the previous quorum state. To make this simple, voters will only persist quorum state changes in quorum-state after they have been committed. Upon initialization, any uncommitted state changes will be found by scanning forward from the LastOffset indicated in the quorum-state.

In a similar vein, if the VoterAssignmentMessage fails to be copied to all voters before a leader failure, then there could be temporary disagreement about voter membership. Each voter must act on the information they have in their own log when deciding whether to grant votes. It is possible in this case for a voter to receive a request from a non-voter (according to its own information). Voters must reject votes from non-voters, but that does not mean that the non-voter cannot ultimately win the election. Hence when a voter receives a VoteRequest from a non-voter, it must then become a candidate.

Observer Promotion

To ensure no downtime of the cluster switch, newly added nodes should already be acting as an up-to-date observer to avoid unnecessary harm to the cluster availability. There are two approaches to achieve this goal, either from leader side or from observer side:

Leader based approach: leader is responsible for tracking the observer progress by monitoring the new entry replication speed, and promote the observer when one replication round is less than election timeout, which suggests the gap is sufficiently small. This idea adds burden to leader logic complexity and overhead for leadership transfer, but is more centralized progress management.

Observer based approach: a self-nomination approach through observer initiates readIndex call to make sure it is sufficiently catching up with the leader. If we see consecutive rounds of readIndex success within election timeout, the observer will trigger a config change to add itself as a follower on next round. This is a de-centralized design which saves the dependency on elected leader to decide the role, thus easier to reason about.

To be effectively collecting information from one place and have the membership change logic centralized, leader based approach is more favorable. However, tracking the progress of an observer should only happen during reassignment, which is also the reason why we may see incomplete log status from DescribeQuorum API when the cluster is stable.

Reassignment Admin Tools

In order to support the DescribeQuorum API, we will add the following APIs to the AdminClient:

| Code Block | ||||

|---|---|---|---|---|

| ||||

public QuorumInfo describeQuorum(Set<TopicPartition> topicPartitions, DescribeQuorumOptions options); |

The recommended usage is to first use the describeQuorum API to get the existing cluster status, learning whether there is any ongoing reassignment. The QuorumInfo struct is deducted from the DescribeQuorumResponse:

| Code Block | ||||

|---|---|---|---|---|

| ||||

public clase QuorumInfo {

Map<Integer, VoterDescription> currentVoters();

Map<Integer, VoterDescription> targetVoters();

Map<Integer, VoterDescription> observers();

Optional<Integer> leaderId();

int leaderEpoch();

long highWatermark();

public static class VoterDescription {

Optional<Long> logEndOffset();

Optional<Long> lastCaughtUpTimeMs();

}

} |

The epoch and offsets are set as optional because the leader may not actively maintain all the observers' status.

Tooling Support

We will add a new utility called kafka-metadata-quorum.sh to describe and alter quorum state. As usual, this tool will require --bootstrap-server to be provided. We will support the following options:

Describing Current Status

There will be two options available with --describe:

--describe status: a short summary of the quorum status and the other provides detailed information about the status of replication.--describereplication: provides detailed information about the status of replication

Here are a couple examples:

| Code Block |

|---|

> bin/kafka-metadata-quorum.sh --describe

LeaderId: 0

LeaderEpoch: 15

HighWatermark: 234130

MaxFollowerLag: 34

MaxFollowerLagTimeMs: 15

CurrentVoters: [0, 1, 2]

TargetVoters: [0, 3, 4]

> bin/kafka-metadata-quorum.sh --describe replication

ReplicaId LogEndOffset Lag LagTimeMs Status IsReassignTarget

0 234134 0 0 Leader Yes

1 234130 4 10 Follower No

2 234100 34 15 Follower No

3 234124 10 12 Observer Yes

4 234130 4 15 Observer Yes |

Altering Voters

We intend to use the current kafka-reassign-partition.sh tool in order to make assignment changes to the metadata partition.

Metrics

Here’s a list of proposed metrics for this new protocol:

...

NAME

...

TAGS

...

TYPE

...

NOTE

...

CurrentLeader

...

type=raft-manager

...