...

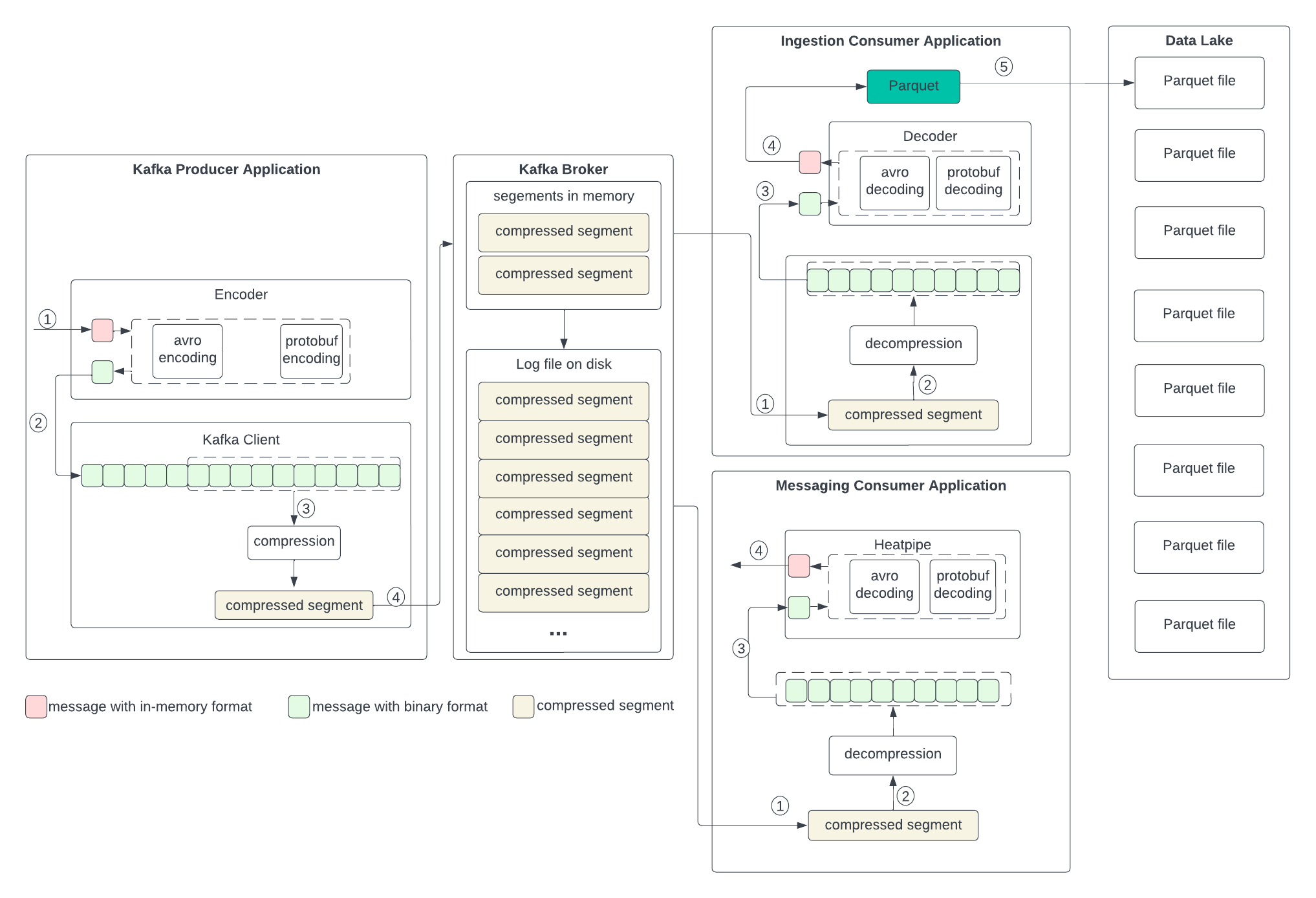

To set up the context for discussing the changes in the next section, let’s examine the current data formats in the producer, broker, and consumer, as well as the process of transformation outlined in the following diagram. We don’t anticipate changes to the broker, so we will skip discussing its format.

Producer

The producer writes the in-memory data structures to an encoder to serialize them to binary and then sends them to the Kafka client.

...

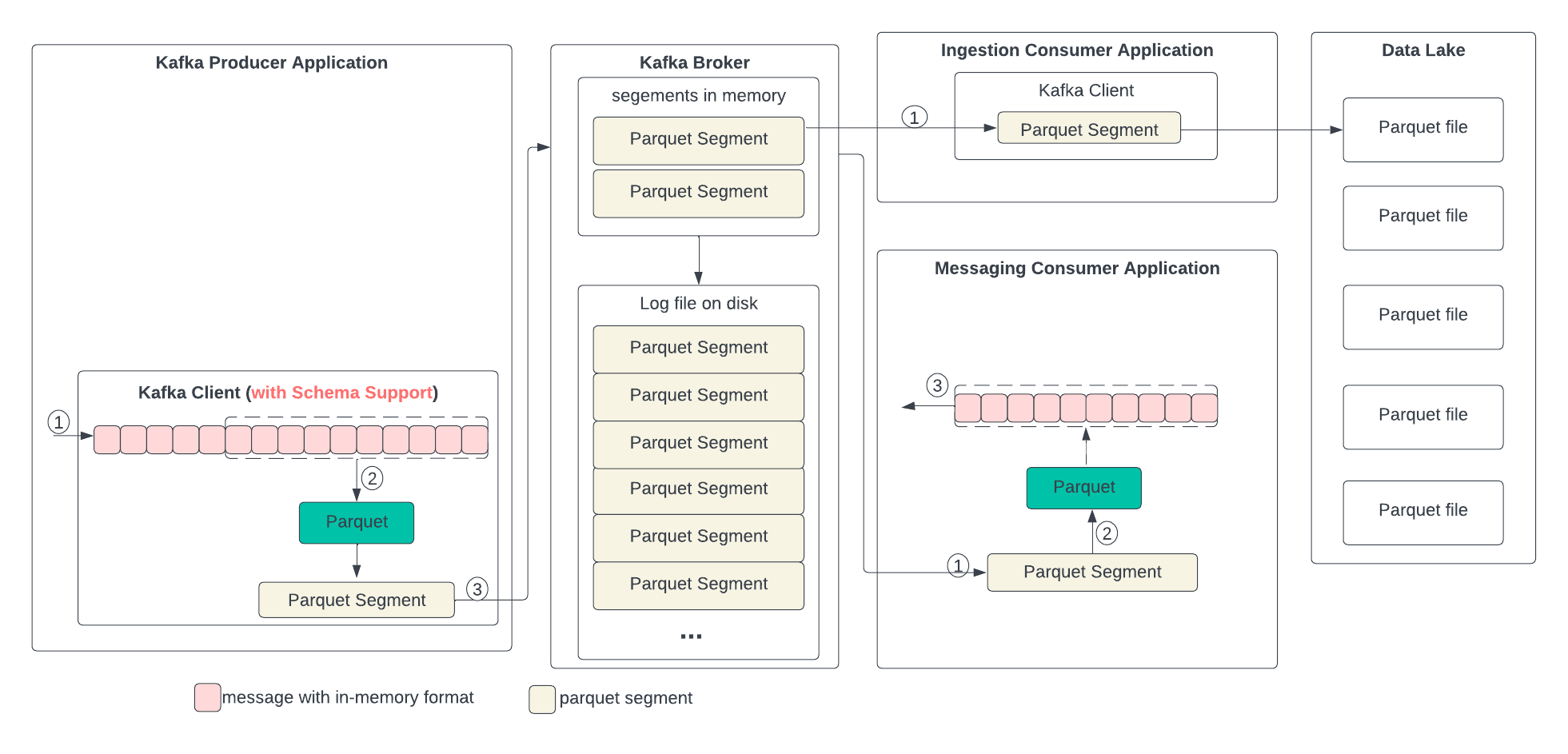

In the following diagram, we describe the proposed data format changes in each state and the process of the transformation. In short, we propose replacing compression with Parquet. Parquet combines encoding and compression at the segment level. The ingestion consumer is simplified by solely dumping the Parquet segment into the data lake.

Producer

The producer writes the in-memory data structures directly to the Kafka client and encodes and compresses all together.

...