Status

Current state: Under discussion

Discussion thread:

JIRA:

Please keep the discussion on the mailing list rather than commenting on the wiki (wiki discussions get unwieldy fast).

Motivation

Exactly once semantics (EOS) provides transactional message processing guarantees. Producers can write to multiple partitions atomically so that either all writes succeed or all writes fail. This can be used in the context of stream processing frameworks, such as Kafka Streams, to ensure exactly once processing between topics.

In Kafka EOS, we use the concept of a "transactional Id" in order to preserve exactly once processing guarantees across process failures and restarts. Essentially this allows us to guarantee that for a given transactional Id, there can only be one producer instance that is active and permitted to make progress at any time. Zombie producers are fenced by an epoch which is associated with each transactional Id. We can also guarantee that upon initialization, any transactions which were still in progress are completed before we begin processing. This is the point of the initTransactions() API.

The problem we are trying to solve in this proposal is a semantic mismatch between consumers in a group and transactional producers. In a consumer group, ownership of partitions can transfer between group members through the rebalance protocol. For transactional producers, assignments are assumed to be static. Every transactional id must map to a consistent set of input partitions. To preserve the static partition mapping in a consumer group where assignments are frequently changing, the simplest solution is to create a separate producer for every input partition. This is what Kafka Streams does today.

This architecture does not scale well as the number of input partitions increases. Every producer come with separate memory buffers, a separate thread, separate network connections. This limits the performance of the producer since we cannot effectively use the output of multiple tasks to improve batching. It also causes unneeded load on brokers since there are more concurrent transactions and more redundant metadata management.

Proposed Changes

We argue that the root of the problem is that transaction coordinators have no knowledge of consumer group semantics. They simply do not understand that partitions can be moved between processes. Let's take a look at a sample exactly-once use case, which is quoted from KIP-98:

public class KafkaTransactionsExample {

public static void main(String args[]) {

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(consumerConfig);

KafkaProducer<String, String> producer = new KafkaProducer<>(producerConfig);

producer.initTransactions();

while(true) {

ConsumerRecords<String, String> records = consumer.poll(CONSUMER_POLL_TIMEOUT);

if (!records.isEmpty()) {

producer.beginTransaction();

List<ProducerRecord<String, String>> outputRecords = processRecords(records);

for (ProducerRecord<String, String> outputRecord : outputRecords) {

producer.send(outputRecord);

}

sendOffsetsResult = producer.sendOffsetsToTransaction(getUncommittedOffsets());

producer.endTransaction();

}

}

}

}

Currently transaction coordinator uses the initTransactions API currently in order to fence producers using the same transactional Id and to ensure that previous transactions have been completed. We propose to switch this guarantee on group coordinator instead.

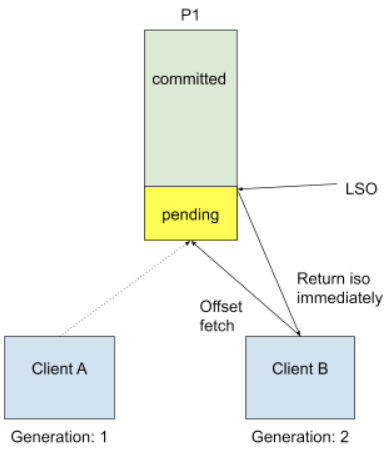

In the above template, we call consumer.poll() to get data, but internally for the very first time we start doing so, we need to know the input topic offset. This is done by a FetchOffset call to group coordinator. With transactional processing, there could be offsets that are "pending", I.E they are part of some ongoing transaction. Upon receiving FetchOffset request, broker will export offset position to the "latest stable offset" (LSO), which is the largest offset that has already been committed. Since we rely on unique transactional.id to revoke stale transaction, we believe any pending transaction will be aborted when producer calls initTransaction again. During normal use case such as Kafka Streams, we will also explicitly close producer to send out a EndTransaction request to make sure we start from clean state.

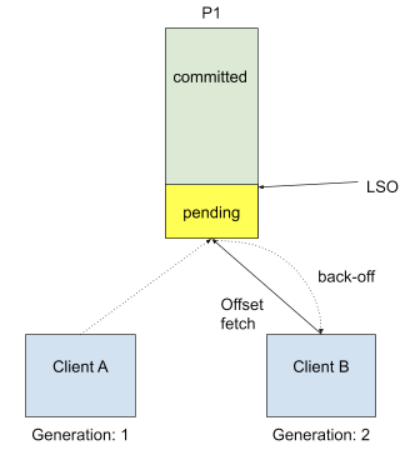

It is no longer safe to do so when we allow topic partitions to move around transactional producers, since transactional coordinator doesn't know about partition assignment and producer won't call initTransaction again during its lifecycle. The proposed solution is to reject FetchOffset request by sending out PendingTransactionException to new client when there is pending transactional offset commits, so that old transaction will eventually expire due to transaction.timeout, and txn coordinator will take care of writing abort markers and failure records, etc. Since it would be an unknown exception for old consumers, we will choose to send a COORDINATOR_LOAD_IN_PROGRESS exception to let it retry. When client receives PendingTransactionException, it will back-off and retry getting input offset until all the pending transaction offsets are cleared. This is a trade-off between availability and correctness, and in this case the worst case for availability is just waiting transaction timeout.

Below is the new approach we introduce here.

Note that the current default transaction.timeout is set to one minute, which is too long for Kafka Streams EOS use cases. It is because the default commit interval was set to 100 ms, and we would first hit exception if we don't actively commit offsets during that tight window. So we suggest to shrink the transaction timeout to be the same default value as session timeout, to reduce the potential performance loss for offset fetch delay.

Public Interfaces

The main addition of this KIP is a new variant of the current initTransactions API which gives us access to the consumer group states, such as member.id and generation.id.

interface Producer {

/**

* This API shall be called for consumer group aware transactional producers.

*/

void initTransactions(Consumer<byte[], byte[]> consumer);

/**

* No longer need to pass in the consumer group id in a case where we already get access to the consumer state.

*/

void sendOffsetsToTransaction(Map<TopicPartition, OffsetAndMetadata> offsets);

}

Here we introduced an intermediate data structure `GroupAssignment` just to make the evolvement easier in case we need to add more identification info during transaction init stage. There are two main differences in the behavior of this API and the pre-existing `initTransactions`:

- The first is that it is safe to call this API multiple times. In fact, it is required to be invoked after every consumer group rebalance or dynamic assignment.

- The second is that it is safe to call after receiving a `ProducerFencedException`. If a producer is fenced, all that is needed is to rejoin the associated consumer group and call this new `initTransactions` API.

The new thread producer API will highly couple with consumer group. We choose to define a new producer config `transactional.group.id` to pass in consumer group id:

public static final String TRANSACTIONAL_GROUP_ID = "transactional.group.id";

And attempt to deprecate sendOffset API which is using consumer group id:

void sendOffsetsToTransaction(Map<TopicPartition, OffsetAndMetadata> offsets); // NEW

We could effectively unset the `transactional.id` config because we no longer use it for revoking ongoing transactions. Instead we would stick to consumer group id when we rely on group membership. To enable this, we need two protocol changes. First we need to update the FindCoordinator API to support lookup of the transaction coordinator using the consumer group Id. Second, we need to extend the InitProducerId API to support consumer group aware initialization.

The schema for FindCoordinator does not need to change, but we need to add a new coordinator type

FindCoordinatorRequest => CoordinatorKey CoordinatorType CoordinatorKey => STRING CoordinatorType => INT8 // 0 -> Consumer group coordinator, 1 -> Transaction coordinator, 2 -> Transaction "group" coordinator

Below we provide the new InitProducerId schema:

InitProducerIdRequest => TransactionalId TransactionTimeoutMs ConsumerGroupId AssignedPartitions TransactionalId => NullableString TransactionTimeoutMs => Int64 TransactionalGroupId => NullableString // NEW InitProducerIdResponse => ThrottleTimeMs ErrorCode ProducerId ProducerEpoch ThrottleTimeMs => Int64 ErrorCode => Int16 ProducerId => Int64 ProducerEpoch => Int16

The new InitProducerId API accepts either a user-configured transactional Id or a consumer group Id and a generation id. When a consumer group is provided, the transaction coordinator will honor consumer group id and allocate a new producer.id every time initTransaction is called.

Fencing zombie

A zombie process may invoke InitProducerId after falling out of the consumer group. In order to distinguish zombie requests, we include the consumer group generation. Once the coordinator observes a generation bump for a group, it will refuse to handle requests from the previous generation. The only thing other group members can do is call InitProducerId themselves. This in fact would be the common case since transactions will usually be completed before a consumer joins a rebalance.

In order to pass the group generationId to `initTransaction`, we need to expose it from the consumer. We propose to add a new function call on consumer to expose the generation info:

public Generation generation();

With this proposal, the transactional id is no longer needed for proper fencing, but the coordinator still needs a way to identify producers individually as they are executing new transactions. There are two options: continue using the transactional id or use the producer id which is generated by the coordinator in InitProducerId. Either way, the main challenge is authorization. We currently use the transaction Id to authorize transactional operations. In this KIP, we could instead utilize the consumer group id for authorization. The producer must still provide a transactional Id if it is working on standalone mode though.

We also need to change the on-disk format for transaction state in order to persist both the consumer group id and the assigned partitions. We propose to use a separate record type in order to store the group assignment. Transaction state records will not change.

Key => GroupId TransactionalId

GroupId => String

TransactionalId => String

Value => GenerationId AssignedPartitions

GenerationId => Int32

AssignedPartitions => [Topic [Partition]]

Topic => String

Partition => Int32

To fence an old producer accessing group, we will return ILLEGAL_GENERATION exception. A new generation id field shall be added to the `TxnOffsetCommitRequest` request:

TxnOffsetCommitRequest => TransactionalId GroupId ProducerId ProducerEpoch Offsets GenerationId TransactionalId => String GroupId => String ProducerId => int64 ProducerEpoch => int16 Offsets => Map<TopicPartition, CommittedOffset> GenerationId => int32 // NEW

To be able to upgrade Kafka Streams application to leverage this new feature, a new config shall be introduced to control the producer upgrade decision:

public static boolean CONSUMER_GROUP_AWARE_TRANSACTION = "consumer.group.aware.transaction"; // default to true

When set to true and exactly-once is turned on, Kafka Streams application will choose to use single producer per thread.

Fencing for upgrades

And to avoid concurrent processing due to upgrade, we also want to introduce an exception to let consumer back off:

PENDING_TRANSACTION(86, "There are pending transactions that need to be cleared before proceeding.", PendingTransactionException::new),

Will discuss in more details in Compatibility section.

Example

Below we provide an example of a simple read-process-write loop with consumer group-aware EOS processing.

String consumerGroupId = "group";

Set<String> topics = buildSubscription();

KafkaConsumer consumer = new KafkaConsumer(buildConsumerConfig(groupId));

KafkaProducer producer = new KafkaProducer(buildProducerConfig(groupId)); // passing in consumer group id as transactional.group.id

producer.initTransactions(new GroupAssignment());

Generation generation = consumer.generation();

consumer.subscribe(topics, new ConsumerRebalanceListener() {

void onPartitionsAssigned(Collection<TopicPartition> partitions) {

generation = consumer.generation();

}

});

while (true) {

// Read some records from the consumer and collect the offsets to commit

ConsumerRecords consumed = consumer.poll(Duration.ofMillis(5000));

Map<TopicPartition, OffsetAndMetadata> consumedOffsets = offsets(consumed);

// Do some processing and build the records we want to produce

List<ProducerRecord> processed = process(consumed);

// Write the records and commit offsets under a single transaction

producer.beginTransaction();

for (ProducerRecord record : processed)

producer.send(record);

try {

if (generation == null) {

throw new IllegalStateException("unexpected consumer state");

}

producer.sendOffsetsToTransaction(consumedOffsets, generation.generationId);

} catch (IllegalGenerationException e) {

throw e; // fail the zombie member if generation doesn't match

}

producer.commitTransaction();

}

The main points are the following:

- Consumer group id becomes a config value on producer.

- Generation.id will be used for group coordinator fencing.

- We no longer need to close the producer after a rebalance.

Compatibility, Deprecation, and Migration Plan

This is a server-client integrated change, and it's required to upgrade the broker first with `inter.broker.protocol.version` to the latest. Any produce request with higher version will automatically get fenced because of no support. If this is the case on a Kafka Streams application, you will be recommended to unset `CONSUMER_GROUP_AWARE_TRANSACTION` config as necessary to just upgrade the client without using new thread producer.

We need to ensure 100% correctness during upgrade. This means no input data should be processed twice, even though we couldn't distinguish the client by transactional id anymore. The solution is to reject consume offset request by sending out PendingTransactionException to new client when there is pending transactional offset commits, so that new client shall start from a clean state instead of relying on transactional id fencing. Since it would be an unknown exception for old consumers, we will choose to send a COORDINATOR_LOAD_IN_PROGRESS exception to let it retry. When client receives PendingTransactionException, it will back-off and retry getting input offset until all the pending transaction offsets are cleared. This is a trade-off between availability and correctness, and in this case the worst case for availability is just waiting transaction timeout for one minute which should be trivial one-time cost during upgrade only.

Rejected Alternatives

- Producer Pooling:

- Producer support multiple transactional ids:

- Tricky rebalance synchronization:

- We could use admin client to fetch the inter.broker.protocol on start to choose which type of producer they want to use. This approach however is harder than we expected, because brokers maybe on the different versions and if we need user to handle the tricky behavior during upgrade, it would actually be unfavorable. So a hard-coded config is a better option we have at hand.

- We have considered to leverage transaction coordinator to remember the assignment info for each transactional producer, however this means we are copying the state data into 2 separate locations and could go out of sync easily. We choose to still use group coordinator to do the generation and partition fencing in this case.