...

The tick size is 1ms and the wheel size is 20. The timeout was set to 200ms. The data size of a request was 100 bytes. For a low timeout rate case, we chose 75percentile = 60ms and 50percentile = 20. And for a high timeout rate case, we chose 75percentile = 400ms and 50percentile = 200ms.

Requests are actively completed by a separate thread. Requests that are supposed to be completed before timeout are enqueued to another DelayQueue. And a separate thread keeps polling and completes them. There is no guarantee of accuracy in terms of actual completion time.

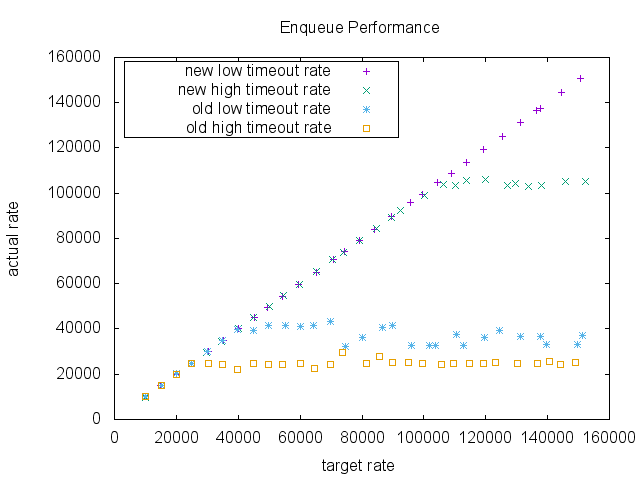

The result shows a dramatic difference in a high enqueue rate area. As the target rate increases, both implementations keep up with the requests initially. However, in low timeout scenario the old implementation was saturated around 60000 40000 RPS (request per second), whereas the proposed implementation didn't show any significant performance degradation, and in high timeout scenario the old implementation was saturated around 25000 RPS, whereas the proposed implementation was saturated 105000 RPS in this benchmark.Another thing worth noting is that there was a performance degradation in the current implementation when timeouts happen more often. It may be because the current implementation has to purge the delay queue and watch lists more often. The proposed implementation shows a slight degradation only when the enqueue rate was very high.

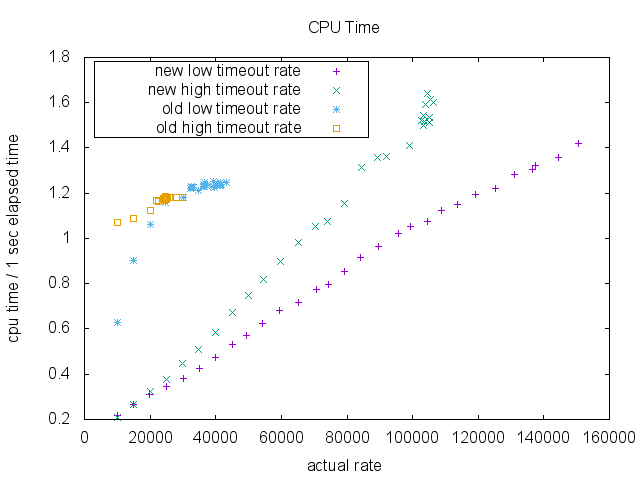

CPU usage is significantly better in the new implementation.

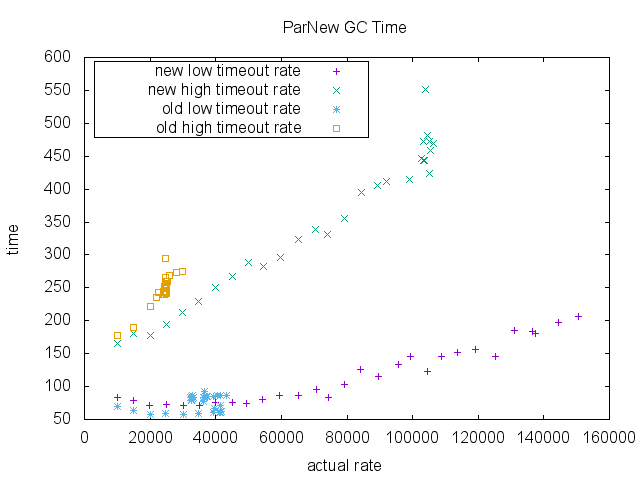

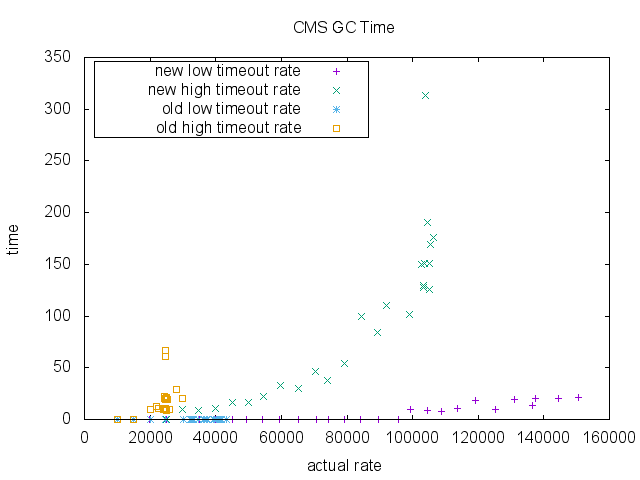

Finally, we measured GC time for ParNew collection and CMS collection. There isn't much difference in the old implementation and the new implementation in the region of enqueue rate that the old implementation can sustain.

Summary

In the new design, we use Hierarchical Timing Wheels for the timeout timer, WeakReference based list for watcher lists, and DelayQueue of timer buckets to advance the clock on demand. The advantage is that a completed request is removed from the timer queue immediately and becomes GCable. When a request is completed we will immediately remove it from a timer bucket with O(1) cost. A strong reference to a request is cleared at this point. All remaining references are weak references from watcher lists, so completed requests become GCable right away. The buckets remain in the delay queue. However, the number of buckets is bounded. And, in a healthy system, most of the requests are satisfied before timeout. Many of the buckets become empty before pulled out of the delay queue. Also, the timer should rarely have the buckets of the lower interval. We expect a improvement in GC behavior and lower CPU usage.

...