This document describes how to release Apache Kafka from trunk.

It is a work in progress and should be refined by the Release Manager (RM) as they come across aspects of the release process not yet documented here.

NOTE: For the purpose of illustration, this document assumes that the version being released is 0.10.0.0 and the following development version will become 0.10.1.0.

Prerequisites

- Prepare release plan in the wiki, notifying the community the overall plan and goals for the release (For example: Release Plan 0.10.0)

Go over JIRA for the release and make sure that blockers are marked as blockers and non-blockers are non-blockers. This JIRA filter may be handy:

project = KAFKA AND fixVersion = 0.10.0.0 AND resolution = Unresolved AND priority = blocker ORDER BY due ASC, priority DESC, created ASC

- Send an email to dev@kafka.apache.org offering to act as the Release Manager (RM). Look for previous emails with "I'd like to volunteer for the release manager".

It is important that between the time that the release plan is voted to the time when the release branch is created, no experimental or potentially destabilizing work is checked into the trunk. While it is acceptable to introduce major changes, they must be thoroughly reviewed and have good test coverage to ensure that the release branch does not start off being unstable. If necessary the RM can discuss if certain issues should be fixed on the trunk in this time, and if so what is the gating criteria for accepting them.

- RM must have gpg keys with the public key publicly available to validate the authenticity of the Apache Kafka release: If you haven't set up gpg key, set up one using 4096 bit RSA (http://www.apache.org/dev/release-signing.html). Make sure that your public key is uploaded to one of the public servers (http://www.apache.org/dev/release-signing.html#keyserver). Also, add your public key to https://github.com/apache/kafka-site/blob/asf-site/KEYS

- RM's Apache account must have one of the RM's ssh public key so that the release script can use SFTP to upload artifacts to the RM's account on home.apache.org. Verify by using `

sftp <your-apache-id>@home.apache.org`; if you get authentication failures, login to id.apache.org and add your public ssh key to your Apache account. If you need a new ssh key, generate one with `ssh-keygen -t rsa -b 4096 -C <your-apache-id>@apache.org` and saving the key in `~/.ssh/apache_rsa`, add the key locally with `ssh-add ~/.ssh/apache_rsa`, add the public SSH key (contents of `~/.ssh/apache_rsa.pub`) to your account using id.apache.org, and verify you can connect with sftp (may require up to 10 minutes for account changes to synchronize). See more detailed instructions. - Make sure docs/documentation.html is referring to the next release and links and update docs/upgrade.html with upgrade instructions for next release. For a bugfix release, make sure to at least bump the version number in the "Upgrading to ..." header in docs/upgrade.html. If this is a major or minor release #, it's a good idea to make this change now. If you end up doing it after cutting branches, be sure the commit lands on both trunk and your release branch. Note that this must be done before generating any artifacts because these docs are part of the content that gets voted on.

- Install the dependencies for the release scripts:

easy_install jira==1.0.15 - Ensure you have configured SSH to pick up your key when connecting to apache.org domains. In ~/.ssh/config, add:

Host *.apache.org IdentityFile ~/.ssh/<apache-ssh-key>

You will need to upload your maven credentials and signatory credentials for the release script by editing your `

~/.gradle/gradle.properties` with:~/.gradle/gradle.propertiesmavenUrl=https://repository.apache.org/service/local/staging/deploy/maven2 mavenUsername=your-apache-id mavenPassword=your-apache-passwd signing.keyId=your-gpgkeyId signing.password=your-gpg-passphrase signing.secretKeyRingFile=/Users/your-id/.gnupg/secring.gpg

If you don't already have a secret key ring under ~/.gnupg (which will be the case with GPG 2.1 and beyond), you will need to manually create it with `

gpg --export-secret-keys -o ~/.gnupg/secring.gpg`.Obviously, be careful not to publicly upload your passwords. You should be editing the `gradle.properties` file under your home directory, not the one in Kafka itself.Make sure your `~/.m2/settings.xml` is configured for pgp signing and uploading to the apache release maven:

~/.m2/settings.xml<servers> <server> <id>apache.releases.https</id> <username>your-apache-id</username> <password>your-apache-passwd</password> </server> <server> <id>your-gpgkeyId</id> <passphrase>your-gpg-passphrase</passphrase> </server> </servers> <profiles> <profile> <id>gpg-signing</id> <properties> <gpg.keyname>your-gpgkeyId</gpg.keyname> <gpg.passphraseServerId>your-gpgkeyId</gpg.passphraseServerId> </properties> </profile> </profiles>You may also need to update some gnupgp configs:

echo "allow-loopback-pinentry" >> ~/.gnupg/gpg-agent.conf echo "use-agent" >> ~/.gnupg/gpg.conf echo "pinentry-mode loopback" >> ~/.gnupg/gpg.conf echo RELOADAGENT | gpg-connect-agent

Cut Branches

Skip this section if you are releasing a bug fix version (e.g. 2.2.1).

- Make sure you are working with a clean repo (i.e. identical to upstream - no changes in progress). If needed clone a fresh copy

- git checkout trunk

- Check that current HEAD points to commit on which you want to base new release branch. Checkout particular commit if not.

- git branch 0.10.0

- git push apache 0.10.0

- Check that the branch was correctly propagated to Apache using the webui: https://gitbox.apache.org/repos/asf?p=kafka.git

- Modify the version in trunk to bump to the next one "0.10.1.0-SNAPSHOT" in the following files:

docs/js/templateData.js

gradle.properties

kafka-merge-pr.py

streams/quickstart/java/pom.xml

streams/quickstart/java/src/main/resources/archetype-resources/pom.xml

streams/quickstart/pom.xml

tests/kafkatest/__init__.py

tests/kafkatest/version.py

- Commit and push to trunk in apache. This is also needed in the non-trunk branch for a bug-fix release.

- Modify the DEFAULT_FIX_VERSION to 0.10.1.0 in kafka-merge-pr.py in the trunk branch. This is not needed in the non-trunk branch for bug-fix release.

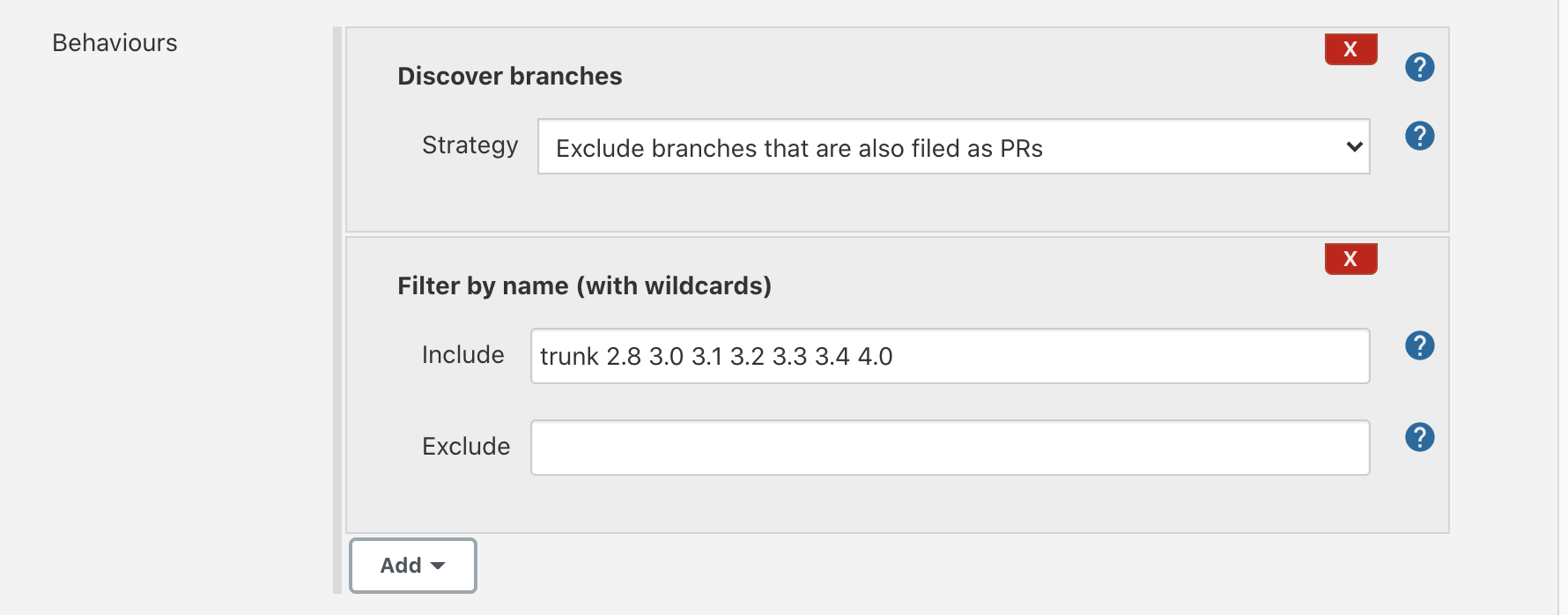

- Update the Jenkins configuration to include the newly created branch (if it's not there already) in the section shown in the following screenshot. If you don't have permission to create apache job, please ask Jun Rao to give you access.

Send email announcing the new branch:

To: dev@kafka.apache.org Subject: New release branch 0.10.0 Hello Kafka developers and friends, As promised, we now have a release branch for 0.10.0 release (with 0.10.0.0 as the version). Trunk has been bumped to 0.10.1.0-SNAPSHOT. I'll be going over the JIRAs to move every non-blocker from this release to the next release. From this point, most changes should go to trunk. *Blockers (existing and new that we discover while testing the release) will be double-committed. *Please discuss with your reviewer whether your PR should go to trunk or to trunk+release so they can merge accordingly. *Please help us test the release! * Thanks! $RM

Create Release Artifacts

- Run the `release.py` script in the root of the kafka repository and follow the instructions. NOTE that if you are releasing a version prior to 1.0.x, you need to have minor edits on the script to change the three-digits pattern checking to four-digits pattern.

- Until is complete, you will have to manually verify the binary artifact's LICENSE file matches its own packaged dependencies. See that ticket for an example of how to do this.

- If any step fails, make sure you have everything set up as described in the Prerequisites section and are using the correct passphrase for each config.

Website update process

Note: Unlike the Kafka sources (kafka repo), the content of the Apache Kafka website kafka.apache.org is backed by a separate git repository (kafka-site repo). Today, any changes to the content and docs must be kept manually in sync between the two repositories.

We should improve the release script to include these steps. In the meantime, for new releases:

- Verify that documentation.html (in kafka-site repo) is referring to the correct release and links.

- Verify that docs/documentation.html (in kafka repo) is similarly set up correctly.

- git clone https://git-wip-us.apache.org/repos/asf/kafka-site.git

- git checkout asf-site

- Update the website content including docs:

- The gradle target

releaseTarGzgenerates the Kafka website content including the Kafka documentation (with the exception of a few pages like project-security.html, which are only tracked in the kafka-site repository). This build target also auto-generates the configuration docs of the Kafka broker/producer/consumer/etc. from their respective Java sources. The build output is stored in./core/build/distributions/kafka_2.13-2.8.0-site-docs.tgz. - Untar the file and rename the

site-docs/folder to28/(or, if the latter already exists, replace its contents). That's because the docs for a release are stored in a separate folder (e.g.,27/for Kafka v2.7 and28/for Kafka v2.8), which ensures the Kafka website includes the documentation for the current and all past Kafka releases.

- The gradle target

- Update the javadocs:

- Create the release Javadocs with the gradle target

aggregatedJavadoc, with output under./build/docs/javadoc/. - Copy the

javadocfolder to28/(i.e., the full path is28/javadoc/). If this is bug fix release, do this after the vote has passed to avoid showing an unreleased version number in the published javadocs.

- Create the release Javadocs with the gradle target

- Commit

Blog Post

- For major releases, consider writing a blog in https://blogs.apache.org/kafka/. If you don't have a blog account, just log into blogs.apache.org once with your Apache credential and notify the Kafka PMC chair, who can then invite you to the blog space. Follow the instructions for creating a preview. Send the a link to the preview blog post to dev mailing for comments before publishing. (See INFRA-20646 for an issue about previews of blog entry drafts.)

It's nice to thank as many people as we can identify. The best I could come up with is this:

# get a list of all committers, contributors, and reviewers git log 2.7..2.8 | grep 'Author\|Reviewer\|Co-authored-by:' | tr ',' '\n' | sed 's/^.*:\s*//' | sed 's/^\s*//' | sed 's/\s*<.*$//' | sort | uniq > /tmp/contributors # and then manually clean up the list, removing different versions of people's names and stripping out any fragments of commit messages that made it in vim /tmp/contributors # then copy the list (don't forget to drop the trailing comma) cat /tmp/contributors | tr '\n' ',' | sed 's/,/, /g' | xclip -selection clipboard

- Consider incorporating any suggestions from the dev thread until release is announced

Announce the RC

- Send an email announcing the release candidate.

If need to roll a new RC

- Go to https://repository.apache.org/#stagingRepositories, find the uploaded artifacts and drop it.

- Go back to the beginning

- don't forget to bump the RC number.

After the vote passes

- Remember: at least 3 days, 3 +1 from PMC members (committers are not enough!) and no -1.

Send a vote closing email:

To: dev@kafka.apache.org Subject: [RESULTS] [VOTE] Release Kafka version 0.10.0.0 This vote passes with 7 +1 votes (3 bindings) and no 0 or -1 votes. +1 votes PMC Members: * $Name * $Name * $Name Committers: * $Name * $Name Community: * $Name * $Name 0 votes * No votes -1 votes * No votes Vote thread: http://markmail.org/message/faioizetvcils2zo I'll continue with the release process and the release announcement will follow in the next few days. $RM

- Create a new tag for the release, on the same commit as the voted rc tag and push it:

- Use "git show 0.10.0.0-rc6" to find the commit hash of the tag

- git tag -a 0.10.0.0 <commit hash>

- git push apache 0.10.0.0

- Merge the last version change / rc tag into the release branch and bump the version to 0.10.0.1-SNAPSHOT

- git checkout 0.10.0

- git merge 0.10.0.0-rc6

- Update version on the branch to 0.10.0.1-SNAPSHOT in the following places:

- KAFKA-REPO-DIR/gradle.properties

- KAFKA-REPO-DIR/streams/quickstart/java/pom.xml

- KAFKA-REPO-DIR/streams/quickstart/java/src/main/resources/archetype-resources/pom.xml

- KAFKA-REPO-DIR/streams/quickstart/pom.xml

- KAFKA-REPO-DIR/tests/kafkatest/__init__.py (note: this version name can't follow the -SNAPSHOT convention due to python version naming restrictions, instead update it to 0.10.0.1.dev0)

- git commit -a

- git push apache 0.10.0

- Mark the version as released in Kafka JIRA (from JIRA administration panel, select versions and scroll mouse towards the end of the line for the particular version. From the dropdown list, select release and set the date).

- Upload all artifacts, release notes, and docs to https://dist.apache.org/repos/dist/release/kafka (a SVN repo, using Apache committer id/passwd):

- Only PMC members can upload to the `release` directory, so if the RM is not in the PMC, they can upload the files to https://dist.apache.org/repos/dist/dev/kafka instead and ask a PMC member to move them to the release directory

- svn co https://dist.apache.org/repos/dist/release/kafka kafka-release

- create a new directory for the release (for example kafka-release/0.10.0.0)

- copy the release artifacts from the latest RC (the ones who were in your people.apache.org directory) to the new release directory

- Add the directory to SVN: svn add 0.10.0.0

Make sure the KEYS file in the svn repo includes the committer who signed the release.

The KEYS must be in https://www.apache.org/dist/kafka/KEYS and not just in http://kafka.apache.org/KEYS.svn commit -m "Release 0.10.0.0"

- Go to https://repository.apache.org/#stagingRepositories, find the uploaded artifacts and release this (this will push to maven central)

- Wait for about a day for the artifacts to show up in apache mirror and maven central.

- In trunk update the following files with the current release number. This is needed for a feature as well as a bug-fix release

- KAFKA-REPO-DIR/gradle/dependencies.gradle

- KAFKA-REPO-DIR/tests/docker/Dockerfile

- KAFKA-REPO-DIR/tests/kafkatest/version.py

- KAFKA-REPO-DIR/vagrant/base.sh

- Upload the new release and kafka-stream-x.x.x-test.jar to S3 bucket "kafka-packages". For example:

- aws s3 cp kafka_2.12-2.7.0.tgz s3://kafka-packages

- aws s3 cp kafka_2.13-2.7.0.tgz s3://kafka-packages

- aws s3 cp kafka-streams-2.7.0-test.jar s3://kafka-packages

- Update these commands to use the current release version.

- If you don't have permission to run these commands, request on the dev mailing list for someone with access to run them for you

- Update the website:

- git clone https://gitbox.apache.org/repos/asf/kafka-site.git

- git checkout asf-site

- If it's a feature release:

- Update files (e.g. documentation.html, protocol.html, quickstart.html, intro.html) to include the link for the new version (e.g. 0100/documentation.html). The full list of files can be found by `grep "should always link the the latest"` and exclude those per-release files (e.g. ./10/documentation/streams/upgrade-guide.html)

- Verify that related html files (exlcluding per-release files) have been updated to use the new release version by doing `grep -A1 "should always link the the latest" . -Irn` and checking that the new feature version is used.

- Update files (e.g documentation.html, streams/quickstart.html) from the previous release (e.g current release is 2.8 so update files in the /27 folder) to change `'<!--//#include virtual="'...` to `'<!--#include virtual="...`. You can find the files by running `grep -R '<!--//#include virtual="' *` from the directory of the previous feature release. This kafka-site PR is an example of the changes that need to be made.

- Update downloads.html to include the new download links from mirrors and change last release to use archive. Also add a paragraph with a brief feature introduction for the release.

- Update index.html "Latest News" box

- git commit -am ".."

- git push origin asf-site

- Send out an announcement email. You will need to use your apache email address to send out the email (otherwise, it won't be delivered to announce@apache.org).

- Run `./release.py release-email` script in the root of the kafka repository and follow the instructions to generate the announcement email template for the release to the mailing list.

(or) follow below instruction to generate the email content.

Include a paragraph in the announcement email like: "According to git shortlog <number_of_contributors> people contributed to this release: <contributors>" where:- number_of_contributors is determined via `git shortlog -sn --no-merges <previous_release_tag>..<current_release_tag> | wc -l` (eg `git shortlog -sn --no-merges 0.8.2.2..0.9.0.0 | wc -l`)

- contributors is determined via: `git shortlog -sn --no-merges <previous_release_tag>..<current_release_tag> | cut -f2 | tr '\n' ',' | sed -e 's/,/, /g'` (eg `git shortlog -sn --no-merges 0.8.2.2..0.9.0.0 | cut -f2 | sort --ignore-case | tr '\n' ',' | sed -e 's/,/, /g'`)

- Log into people.apache.org with your apache id.

- cat mail.txt|mail -s "[ANNOUNCE] ..." announce@apache.org, users@kafka.apache.org, dev@kafka.apache.org, kafka-clients@googlegroups.com

note: you need to be subscribed to `kafka-clients@googlegroups.com` with you apache email address – otherwise it bounces back

Use the previously generated email template using `release.py` script (or) Use the below email template (maybe update Scala versions accordingly):

The Apache Kafka community is pleased to announce the release for Apache Kafka <release-number>. This is a bug fix release and it includes fixes and improvements from <#> JIRAs, including a few critical bugs. All of the changes in this release can be found in the release notes: https://www.apache.org/dist/kafka/<release-number>/RELEASE_NOTES.html You can download the source and binary release (Scala 2.11 and Scala 2.12) from: https://kafka.apache.org/downloads#<release-number> --------------------------------------------------------------------------------------------------- Apache Kafka is a distributed streaming platform with four core APIs: ** The Producer API allows an application to publish a stream records to one or more Kafka topics. ** The Consumer API allows an application to subscribe to one or more topics and process the stream of records produced to them. ** The Streams API allows an application to act as a stream processor, consuming an input stream from one or more topics and producing an output stream to one or more output topics, effectively transforming the input streams to output streams. ** The Connector API allows building and running reusable producers or consumers that connect Kafka topics to existing applications or data systems. For example, a connector to a relational database might capture every change to a table. With these APIs, Kafka can be used for two broad classes of application: ** Building real-time streaming data pipelines that reliably get data between systems or applications. ** Building real-time streaming applications that transform or react to the streams of data. Apache Kafka is in use at large and small companies worldwide, including Capital One, Goldman Sachs, ING, LinkedIn, Netflix, Pinterest, Rabobank, Target, The New York Times, Uber, Yelp, and Zalando, among others. A big thank you for the following <#> contributors to this release! <list-of-contributors> We welcome your help and feedback. For more information on how to report problems, and to get involved, visit the project website at https://kafka.apache.org/ Thank you! Regards, $RM

- Run `./release.py release-email` script in the root of the kafka repository and follow the instructions to generate the announcement email template for the release to the mailing list.

- The PMC member who committed the release artifacts to the SVN dist repository should add the release data to https://reporter.apache.org/addrelease.html?kafka (they will get a notification asking them to do this after the svn commit).

- PMC member should double check if older releases should be archived (cf. http://www.apache.org/legal/release-policy.html#when-to-archive). This includes updating `download.html`

- Cf. and

- File a JIRA for updating compatibility/upgrade system tests to test the newly released version. Example PRs:

- Broker and clients: https://github.com/apache/kafka/pull/6794

- Streams: https://github.com/apache/kafka/pull/6597/files

- For major releases, publish the blog post previously shared with the dev list.